SD RESOURCE GOLDMINE

character limit hit, move here for the next version, this will no longer be updated: https://rentry.org/sdupdates2

Warnings:

- Ckpts/hypernetworks/embeddings are not interently safe as of right now. They can be pickled/contain malicious code. Use your common sense and protect yourself as you would with any random download link you would see on the internet.

- Monitor your GPU temps and increase cooling and/or undervolt them if you need to. There have been claims of GPU issues due to high temps.

Links are dying. If you happen to have a file listed in https://rentry.org/sdupdates#deadmissing or that's not on this list, please get it to me.

There is now a github for this rentry: https://github.com/questianon/sdupdates. This should allow you to see changes across the different updates. There is also a WIP embedding directory here: https://github.com/questianon/sdupdates/wiki

If you know how to do stuff in markdown and html/can make a webpage easily/want to contribute in any way, contact me

Quicklinks:

- News: https://rentry.org/sdupdates#newsfeed

- Prompting: https://rentry.org/sdupdates#prompting

- Models, Embeddings, and Hypernetworks: https://rentry.org/sdupdates#models-embeddings-and-hypernetworks

- Embeddings: https://rentry.org/sdupdates#embeddings

- Hypernetworks: https://rentry.org/sdupdates#hypernetworks

- Aesthetic Gradients: https://rentry.org/sdupdates#aesthetic-gradients

- Models, embeds, and hypernetworks that might be sus: https://rentry.org/sdupdates#polar-resources

- DEAD/MISSING: https://rentry.org/sdupdates#deadmissing

- Training: https://rentry.org/sdupdates#training

- Datasets: https://rentry.org/sdupdates#datasets

- FAQ: https://rentry.org/sdupdates#common-questions-ctrlcmd-f

- Link Dump: https://rentry.org/sdupdates#rentrys-link-dump-will-sort

- Confirmed Drama: https://rentry.org/sdupdates#confirmed-drama

- Unconfirmed Drama: https://rentry.org/sdupdates#unconfirmed-drama

- Fed Bait Information: https://rentry.org/sdupdates#fed-bait-information

- Hall of Fame: https://rentry.org/sdupdates#hall-of-fame

- Miscellaneous: https://rentry.org/sdupdates#misc

- Github: https://github.com/questianon/sdupdates/

NEWSFEED

Don't forget to git pull to get a lot of new optimizations + updates, if SD breaks go backward in commits until it starts working again

Instructions:

- If on Windows:

- go to the webui directory

git pullpip -r install requirements.txt

- If on Linux:

- go to the webui directory

source ./venv/bin/activate

a. if this doesn't work, runpython -m venv venvbeforehandgit pullpip -r install requirements.txt

10/31

- You might be able to get more performance on windows by disabling hardware scheduling

- New inpainting options added

- Extensions manager added for AUTOMATIC1111's webui

- Pixiv adding AI art filter: https://www.pixiv.net/info.php?id=8729

- VAE selector PR: https://github.com/AUTOMATIC1111/stable-diffusion-webui/pull/3986

- Open sourced, AI-powered creator released

- https://github.com/carefree0910/carefree-creator#webui--local-deployment

- Can run local and through their servers

- Copied from their github:

- An infinite draw board for you to save, review and edit all your creations.

- Almost EVERY feature about Stable Diffusion (txt2img, img2img, sketch2img, variations, outpainting, circular/tiling textures, sharing, ...).

- Many useful image editing methods (super resolution, inpainting, ...).

- Integrations of different Stable Diffusion versions (waifu diffusion, ...).

- GPU RAM optimizations, which makes it possible to enjoy these features with an NVIDIA GeForce GTX 1080 Ti

- ERNIE-ViLG 2.0 (new open source text to image generator developed by Baidu): https://arxiv.org/abs/2210.15257

- https://github.com/PaddlePaddle/ERNIE

- Supposedly has benefits over SD?

- (old news) Google AI video showcase: https://imagen.research.google/video/

- (old news) Facebook Img2video: https://makeavideo.studio/

- (Info by anon) A look into better trainings: https://arxiv.org/pdf/2210.15257.pdf

train multiple denoisers, use one for the starting few steps to form rough shapes, use one for the last few steps to finalize detail

while training, use a image classifier to mark regions corresponding to subjects in the text descriptor. If text descriptor doesn't exist, add it to the prompt

modify attention function to increase the attention weight between subjects found by the classifier

modify loss function to give regions marked by the classifier more weight - PaintHua.com - New GUI focusing on Inpainting and Outpainting

- Training a TI on 6gb: https://pastebin.com/iFwvy5Gy

- Have xformers enabled.

This diff does 2 things.

- enables cross attention optimizations during TI training. Voldy disabled the optimizations during training because he said it gave him bad results. However, if you use the InvokeAI optimization or xformers after the xformers fix it does not give you bad results anymore.

This saves around 1.5GB vram with xformers - unloads vae from VRAM during training. This is done in hypernetworks, and idk why it wasn't in the code for TI. It doesn't break anything and doesn't make anything worse.

This saves around .2 GB VRAM

After you apply this, turn on Move VAE and CLIP to RAM and Use cross attention optimizations while training

- enables cross attention optimizations during TI training. Voldy disabled the optimizations during training because he said it gave him bad results. However, if you use the InvokeAI optimization or xformers after the xformers fix it does not give you bad results anymore.

- Have xformers enabled.

- Google AI demonstration: https://youtu.be/YxmAQiiHOkA

- Deconvolution and Checkerboard Artifacts: https://distill.pub/2016/deconv-checkerboard/

10/30

- (oldish news) Mubert, text to music released: https://github.com/MubertAI/Mubert-Text-to-Music

- app to listen: https://apps.apple.com/app/apple-store/id1154429580

- search for music: https://mubert.com/render

- Huggingface demo: https://huggingface.co/spaces/Mubert/Text-to-Music

- Stable diffusion "deepfake" (good with few keyframes)

- Git pull for some updates

- Hypernetwork training fixed (continuing training off old checkpoints for HNs and embeds is still broken)

- shrink the size of ckpts and grow them back to their original size: https://github.com/bmaltais/dehydrate

- not sure if safe, but it seems to work

- Blender camera animations to deforum released: https://github.com/micwalk/blender-export-diffusion

- New Windows based Dreambooth solution with Adam8bit support (should run on 8gb and 12gb cards): https://github.com/bmaltais/kohya_ss

- instructions: https://note.com/kohya_ss/n/n61c581aca19b

- new, so not sure if pickled

- Img2music (fun): https://huggingface.co/spaces/fffiloni/img-to-music

- GUI helper for manual tagging and cropping released: https://github.com/arenatemp/sd-tagging-helper

- Dreambooth PR: https://github.com/AUTOMATIC1111/stable-diffusion-webui/pull/3995

- Video diffusion models: https://video-diffusion.github.io/

- Dataset shuffling should be fixed now so that it actually shuffles.

10/29

- SD multiplayer: https://huggingface.co/spaces/huggingface-projects/stable-diffusion-multiplayer

- kind of like r/place

- Big inpainting updated released (composition stays the same but style changes)

- Unreal engine 5 plugin released

- Hires broken on the latest commit

- (old news) new hypernetwork training added

10/28

- Largest Korean hypernetwork/embedding sharing forum post with a ton of hypernetworks/embeddings + images (highly recommended)

- https://arca.live/b/hypernetworks/60940948

- has an English explanation of some stuff at the top

- koreanon requests for good embeddings to be posted in the comments with artist name

Rumor on /g/ that AUTOMATIC1111 was conscripted into the russian armyFalse rumor, AUTOMATIC1111 said that he's fine and is just resting from Stable Diffusion and will probably:- work on PRs soon

- "make a tab for extensions for list and easy install from URL"

- Custom poseable doll released

- Note for training: You can set a learning rate of "0.1:500, 0.01:1000, 0.001:10000" in textual inversion and it will follow the schedule

- Parseq released

- parameter sequencer

- "Generate videos with tight control and flexible interpolation over many Stable Diffusion parameters (such as seed, scale, prompt weights, denoising strength...), as well as input processing parameter (such as zoom, pan, 3D rotation...)"

- https://github.com/rewbs/sd-parseq

- Img2tiles script released

- Stable Diffusion Prompt Book released

- AI Pictionary released

- CIO statement from a few days ago

- (old news) Imagic running with Stable Diffusion

- (old news) government letter to Stability AI: https://eshoo.house.gov/sites/eshoo.house.gov/files/9.20.22LettertoNSCandOSTPonStabilityAI.pdf

- (old news) Deviant Art CEO supports ai (?)

- https://www.deviantart.com/wannabby, check their posts about AI

- (old news) imagic: img2img but better

10/27

- hypernetwork training is currently broken (unsure if fixed now)

10/26

- Created https://github.com/questianon/sdupdates

- Rentry backup for now

- Features people might like:

- Commit history so you know what's new

- Watch so you can get notifications

- The formatting might be nicer

- New generative models, supposedly faster than diffusers

- https://github.com/Newbeeer/Poisson_flow

- More info: https://www.assemblyai.com/blog/an-introduction-to-poisson-flow-generative-models/

- electrodynamics inspired (the current diffusion model is thermodynamics/statistical physics inspired)

- 10-20x faster

- https://colab.research.google.com/drive/1neY6OovzZELul9t2OTdThUitptNVnuHR?usp=sharing

- Automatic1111's webui supports subfolders and symlinks

- saves space + allows for organization

- https://www.reddit.com/r/StableDiffusion/comments/ye2fwh/tip_automatic1111_supports_model_subfolders/

- Stable Diffusion plugin for Krita and Photoshop (not much info, so not sure if safe)

10/21 - 10/25 (big news bolded, big thanks to asuka-test-imgur-anon-who-also-made-the-speedrun-tutorial for some info)

- Latest git pull can break SD (windows)

- https://github.com/AUTOMATIC1111/stable-diffusion-webui/issues/3688

- update with "git pull origin master" instead of "git pull" until the branch is deleted on the github side

- gaming cock flower arrangement club (Japanese lore)

- Deforum (video animation) extension released

- Many new VAE's (finetunes) released

- Check https://rentry.org/sdmodels for most of them

- NovelAI explanation of all their implemations

- Infinite outpainting: https://github.com/lkwq007/stablediffusion-infinity

- Safer pickleless (unpickleable) format, still needs to be implemented

- https://github.com/huggingface/safetensors

- "This repository implements a new simple format for storing tensors safely (as opposed to pickle) and that is still fast (zero-copy)."

- Temp folder storing generations, space issues (might be fixed now)

- Dreambooth training (now with gui https://github.com/smy20011/dreambooth-gui ), referenced via prompt (?)

- Guided inpainting (video inpainting with keyframes)

- If you build Hydrus from source, someone made a fork to import the tags and other metadata automatically.

- AUTOMATIC1111's history tab now an extension:

- Imagic Stable Diffusion training in 11 GB VRAM

- Interpolate script for AUTOMATIC1111's webui

- Text2LIVE: Text-Driven Layered Image and Video Editing

- AUTOMATIC1111's webui has an api

- StabilityAI released a new VAE

- Improves eyes, hands, colors, and img2img

- https://huggingface.co/stabilityai

- Tutorial + how to use on ALL models (applies for the NAI vae too): https://www.reddit.com/r/StableDiffusion/comments/yaknek/you_can_use_the_new_vae_on_old_models_as_well_for/

- Aesthetic Gradients released

- voldy's announcement https://desuarchive.org/g/thread/89343235/#89345163

- breakdown of new interface https://desuarchive.org/g/thread/89343235/#89345258

- more explanation https://desuarchive.org/g/thread/89343235/#89345322

- https://github.com/AUTOMATIC1111/stable-diffusion-webui/wiki/Extensions

- https://github.com/AUTOMATIC1111/stable-diffusion-webui-aesthetic-gradients

- Lama Cleaner released with v1.5 support

- https://github.com/Sanster/lama-cleaner

- Good at watermark removal

- https://www.reddit.com/r/StableDiffusion/comments/y90hzz/lama_cleaner_add_runwaysd15inpainting_support_the

- Mini tutorial in the comments

- Dance Diffusion (AI Music) released by HarmonAI

- Discord: https://discord.gg/MunJTXwk

- AI Music by Google

- 8-10gb Dreambooth for AUTOMATIC1111's webui WIP

- hlky’s/sd-webui rebranded as Sygil.dev

- Working on Project Nataili, a common Standard Diffusion backend

- Goal is to centralize all resources

- https://www.reddit.com/r/StableDiffusion/comments/yd5p5s/hlkyssdwebui_announcing_sygildev_project_nataili/

- visualise.ai

- Account required

- Free unlimited 512x512/64 step runs

- Optimized dreambooth

- train under 10 minutes without class images on multiple subjects, retrainable-ish model

- Tutorial: https://www.reddit.com/r/StableDiffusion/comments/yd9oks/new_simple_dreambooth_method_is_out_train_under/

- Github: https://github.com/TheLastBen/fast-stable-diffusion

- Many sites banned AI art

- Hypernetwork structures added

- more numbers = more vram needed = deeper hypernetwork = better results (?)

- Deep hypernetworks are suited for training with large datasets

- Waifu Diffusion 1.4 roadmap:

- https://gist.github.com/harubaru/313eec09026bb4090f4939d01f79a7e7

- Release date: December 1

- Discord: https://discord.gg/SqrKhArt

- Extensions added to AUTOMATIC1111's webui

- Test embeddings before you download them

- UMI AI, a wildcard engine, released

- Free

- Tutorial: https://www.patreon.com/posts/umi-ai-official-73544634

- Discord (SFW and NSFW): https://discord.gg/9K7j7DTfG2

- More info in https://rentry.org/sdupdates#prompting

- 3D AI stuff

- Pose Estimation

10/20

- SD v1.5 released by RunwayML

- Uncensored, legitimate 1.5

- Huggingface: https://huggingface.co/runwayml/stable-diffusion-v1-5

- Tweet: https://twitter.com/runwayml/status/1583109275643105280

- https://nitter.it/runwayml/status/1583109275643105280#m

- https://rentry.org/sdmodels

- Reddit thread: https://www.reddit.com/r/StableDiffusion/comments/y91pp7/stable_diffusion_v15/

- Drama recap: https://www.reddit.com/r/StableDiffusion/comments/y99yb1/a_summary_of_the_most_recent_shortlived_so_far/

- https://rentry.org/sdupdates#confirmed-drama for recap + links

10/19

- Git pull for a lot of new stuff

- theme argument: https://github.com/AUTOMATIC1111/stable-diffusion-webui/commit/665beebc0825a6fad410c8252f27f6f6f0bd900b

- A lot of optimizations

- Layered hypernetworks

- Time left estimation (if jobs take more than 60 sec)

- Minor UI changes

- Runway released new SD inpainting/outpainting model

- Stability AI event recap

- https://www.reddit.com/r/StableDiffusion/comments/y6v0v9/stability_event_happening_now_news_so_far/

- Animation API next week

- DreamStudio Pro in progress (automatic gen of video from music + latent space exploration)

- will fund 100 PHDs this year

- Their cluster is 4000 A100s on AWS and plans to grow 5x-10x next year

- will reduce price of Dreamstudio by half

- Game universes created with AI: https://twitter.com/Plinz/status/1582202096983498754

- Dreambooth GUI: https://github.com/smy20011/dreambooth-gui

- NAI possibly tinkering with their backend based on tests by touhou anons

- better hands

- Unreal Engine 5 SD plugin: https://github.com/albertotrunk/UE5-Dream

- Underreported: You can highlight a part of your prompt and ctrl + up/down to change weights

10/18

- Clarification on censoring SD's next model by the question asker

- https://rentry.org/sdupdates#confirmed-drama

- TLDR: SD will probably release a censored model before releasing their 1.5 model because of legal issues (like with CP)

10/17

- $101 million in funding from Stability AI for opensource and free AI

- xformers degrading quality

- https://github.com/AUTOMATIC1111/stable-diffusion-webui/pull/2967

- It's a bug that causes the variance with --xformers

- New trinart model

- Discovered hi-res generations are affected by the video card used

- https://desuarchive.org/g/thread/89259005/#89260871

- TLDR: 3000s series are similar, 2000s and 1000s will vary

10/16

-

Remote code execution exploit discovered 2 days ago

- AUTOMATIC pushed an update to deal with this. Use the hide_ui_dir_config if you plan on using --share after updating. Set a password.

- Gradio fix in progress: https://github.com/gradio-app/gradio/issues/2470

- https://github.com/AUTOMATIC1111/stable-diffusion-webui/issues/2571

- https://github.com/AUTOMATIC1111/stable-diffusion-webui/discussions/920

- https://github.com/AUTOMATIC1111/stable-diffusion-webui/issues/1576

- https://www.reddit.com/r/StableDiffusion/comments/y56qb9/security_warning_do_not_use_share_in/

- Deforum script released for AUTOMATIC1111's webui

- Google open sourced their prompt-to-prompt method

10/15

- Embeddings now shareable via images

- No need to download .pt files anymore

- To use, finish training an embedding, download the image of the embedding (the one with the circles at the edges), and place it in your embeddings folder. The name at the top of the image is the name you use to call the embedding.

- https://www.reddit.com/r/StableDiffusion/comments/y4tmzo/auto1111_new_shareable_embeddings_as_images/

- Example (2nd and 3rd image): https://www.reddit.com/gallery/y4tmzo

- Stability AI update pipeline (https://www.reddit.com/r/StableDiffusion/comments/y2x51n/the_stability_ai_pipeline_summarized_including/)

- This week:

- Updates to CLIP (not sure about the specifics, I assume the output will be closer to the prompt)

- Clip-guidance comes out open source (supposedly)

- Next week:

- DNA Diffusion (applying generative diffusion models to genetics)

- A diffusion based upscaler ("quite snazzy")

- A new decoding architecture for better human faces ("and other elements")

- Dreamstudio credit pricing adjustment (cheaper, that is more options with credits)

- Discord bot open sourcing

- Before the end of the year:

- Text to Video ("better" than Meta's recent work)

- LibreFold (most advanced protein folding prediction in the world, better than Alphafold, with Havard and UCL teams)

- "A ton" of partnerships to be announced for "converting closed source AI companies into open source AI companies"

- (Potentially) CodeCARP, Code generation model from Stability umbrella team Carper AI (currently training)

- (Potentially) Gyarados (Refined user preference prediction for generated content by Carper AI, currently training)

- (Potentially) CHEESE (some sort of platform for user preference prediction for generated content)

- (Potentially) Dance Diffusion, generative audio architecture from Stability umbrella project HarmonAI (there is already a colab for it and some training going on i think)

- This week:

- Animation Stable Diffusion:

- Stable Diffusion in Blender

- https://airender.gumroad.com/l/ai-render

- Uses Dreamstudio for now

- DreamStudio will now use CLIP guidance

- Stable Diffusion running on iPhone

- Cycle Diffusion: https://github.com/ChenWu98/cycle-diffusion

- txt2img > img2img editors, look at github to see examples

- Information about difference merging added to FAQ

- Distributed model training planned

- SD Training Labs server

- Gradio updated

- Optimized, increased speeds

- Git pulling should be safe

10/14

- Fed bait claims

- You can generate forever by right clicking on the generate button

- Can now load checkpoint, clip skip, and hypernet from infotext for AUTO's webui

- Advanced Prompt Tuning, minimizes prompt typing and optimzes output quality

- https://github.com/7eu7d7/APT-stable-diffusion-auto-prompt

- planned to be PR on AUTO's repo once updated

- 3D photo inpainting

- Beginner's guide released:

- New method for merging models on AUTOMATIC1111's UI

- Double model merging + difference merging using a third model

10/13

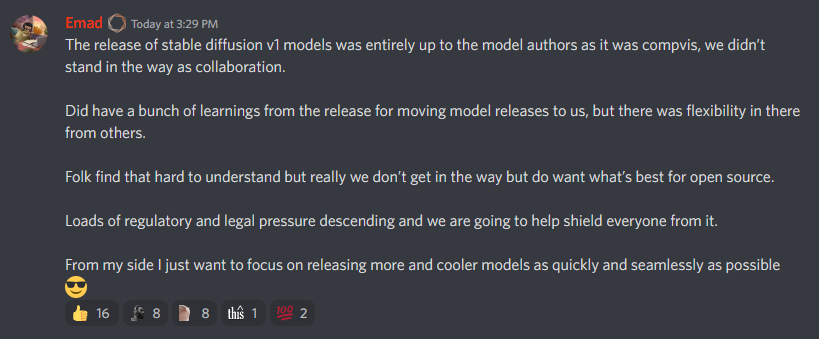

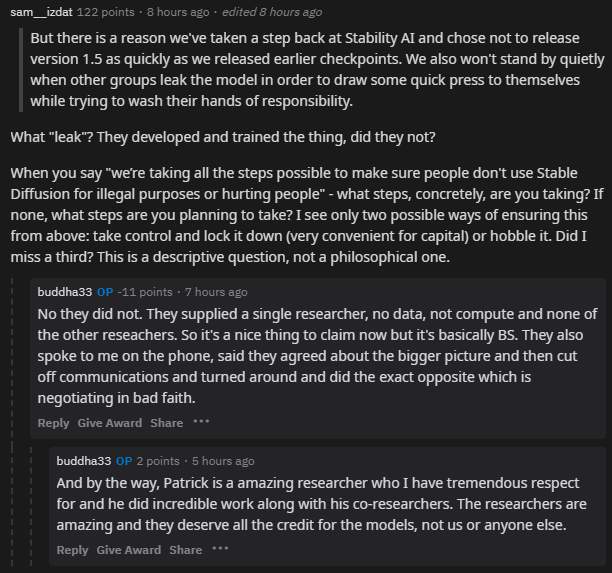

- Emad QnA Summary

- Image animation

- Motion Diffusion available (text to a video of human motion)

- Text to video available for everyone

- VR SD in the works

- Emad's statement on censoring SAI's next model: https://desuarchive.org/g/thread/89182040#89182584

- NSFW model is hard to train right now, meaning the next release will have:

- No more nudity

- Violence allowed

- Opt-out tool coming for artists who do not want their art to be trained

- NSFW model is hard to train right now, meaning the next release will have:

- New method for training styles that doesn't require as many computing resources

- Method for faster and low step count generations

10/12

- StabilityAI is only releasing SFW models from now on

10/11

- Training embeddings and hypernetworks are possible on --medvram now

- Easy to setup local booru by booru anon, might be pickled (NOW OPEN SOURCE, HIGHLY RECOMMENDED): https://github.com/demibit/stable-toolkit

- Planned to be open source in about a week

- Can now train hypernetworks, git pull and find it in the textual inversion tab

- Sample (bigrbear): https://files.catbox.moe/wbt30i.pt

- Anon (might be wrong): xformers now works on a lot of cards natively, try a clean install with --xformers

- Early Anime Video Generation, trained by dep

10/10

- New unpickler for new ckpts: https://rentry.org/safeunpickle2

HENTAI DIFFUSION MIGHT HAVE A VIRUSconfirmed to be safe by some kind people- github taken down because of nude preview images, hf files taken down because of complaints, windows defender false positive, some kind anons scanned the files with a pickle scanner and and it came back safe

- automatic's repo has security checks for pickles

- anon scanned with a "straced-container", safe

- NAI's euler A is now implemented in AUTOMATIC1111's build

- git pull to access

- New open-source (?) generation method revealed making good images in 4 steps

- Supposedly only 64x64, might be wrong

- Discovered that hypernetworks were meant to create anime using the default SD model

10/9

- Full NAI frontend + backend implementation: https://desuarchive.org/g/thread/89095460#89097704 (PICKLE??, careful might actually be pickled)

- 1:1 recreation, is NAI ran locally (offline NAI)

- 8GB VRAM required

- has danbooru tag suggestions, past generation history, and mobile support (from anon)

- Unlimited prompt tokens

- NAI 1:1 Recreation for Euler (ASUKA, https://desuarchive.org/g/thread/89097837#89098634 https://boards.4chan.org/h/thread/6887840#p6888020)

- detailed setup guide: https://github.com/AUTOMATIC1111/stable-diffusion-webui/discussions/2017

- xformers working for 30s series and up, anything below needs tinkering (https://rentry.org/25i6yn)

- Use --xformers to enable for 30s series, --force-enable-xformers for others

- Deepdanbooru integrated: Use --deepdanbooru as an argument to webui-user.bat and find the interrogation change in img2img

- CLIP layer thing integrated, check settings after update

- v2.pt working

- VAE working

- Full models working

Prompting

Google Docs with a prompt list/ranking/general info for waifu creation:

https://docs.google.com/document/d/1Vw-OCUKNJHKZi7chUtjpDEIus112XBVSYHIATKi1q7s/edit?usp=sharing

Anon's prompt collection: https://mega.nz/folder/VHwF1Yga#sJhxeTuPKODgpN5h1ALTQg

Tag effects on img: https://pastebin.com/GurXf9a4

- Anon says that "8k, 4k, (highres:1.1), best quality, (masterpiece:1.3)" leads to nice details

Japanese prompt collection: http://yaraon-blog.com/archives/225884

GREAT CHINESE TOME OF PROMPTING KNOWLEDGE AND WISDOM 101 GUIDE: https://docs.qq.com/doc/DWHl3am5Zb05QbGVs

- Site: https://aiguidebook.top/

- Backup: https://www105.zippyshare.com/v/lUYn1pXB/file.html

- translated + download: https://mega.nz/folder/MssgiRoT#enJklumlGk1KDEY_2o-ViA

- another backup? https://note.com/sa1p/n/ne71c846326ac

GREAT CHINESE SCROLLS OF PROMPTING ON 1.5: HEIGHTENED LEVELS OF KNOWLEDGE AND WISDOM 101: https://docs.qq.com/doc/DWGh4QnZBVlJYRkly

GREAT CHINESE ENCYCLOPEDIA OF PROMPTING ON GENERAL KNOWLEDGE: SPOOKY EDITION: https://docs.qq.com/doc/DWEpNdERNbnBRZWNL

GREAT JAPANESE TOME OF MASTERMINDING ANIME PROMPTS AND IMAGINATIVE AI MACHINATIONS 101 GUIDE https://p1atdev.notion.site/021f27001f37435aacf3c84f2bc093b5?p=f9d8c61c4ed8471a9ca0d701d80f9e28

- author: https://twitter.com/p1atdev_art/

Japenese wiki: https://seesaawiki.jp/nai_ch/d/

Database of prompts: https://publicprompts.art/

Krea AI prompt database: https://github.com/krea-ai/open-prompts

Prompt search: https://www.ptsearch.info/home/

Another search: http://novelai.io/

4chan prompt search: https://desuarchive.org/g/search/text/masterpiece%20high%20quality/

Japanese prompt generator: https://magic-generator.herokuapp.com/

Build your prompt (chinese): https://tags.novelai.dev/

NAI Prompts: https://seesaawiki.jp/nai_ch/d/%c8%c7%b8%a2%a5%ad%a5%e3%a5%e9%ba%c6%b8%bd/%a5%a2%a5%cb%a5%e1%b7%cf

Japanese wiki: https://seesaawiki.jp/nai_ch/

Korean wiki: https://arca.live/b/aiart/60392904

Korean wiki 2: https://arca.live/b/aiart/60466181

NAI to webui translator (not 100% accurate): https://seesaawiki.jp/nai_ch/d/%a5%d7%a5%ed%a5%f3%a5%d7%a5%c8%ca%d1%b4%b9

Tip Dump: https://rentry.org/robs-novel-ai-tips

Tips: https://github.com/TravelingRobot/NAI_Community_Research/wiki/NAI-Diffusion:-Various-Tips-&-Tricks

Info dump of tips: https://rentry.org/Learnings

Outdated guide: https://rentry.co/8vaaa

Tip for more photorealism: https://www.reddit.com/r/StableDiffusion/comments/yhn6xx/comment/iuf1uxl/

- TLDR: add noise to your img before img2img

SD 1.4 vs 1.5: https://postimg.cc/gallery/mhvWsnx

Model merge comparisons: https://files.catbox.moe/rcxqsi.png

Deep Danbooru: https://github.com/KichangKim/DeepDanbooru

Demo: https://huggingface.co/spaces/hysts/DeepDanbooru

Embedding tester: https://huggingface.co/spaces/sd-concepts-library/stable-diffusion-conceptualizer

Collection of Aesthetic Gradients: https://github.com/vicgalle/stable-diffusion-aesthetic-gradients/tree/main/aesthetic_embeddings

Euler vs. Euler A: https://github.com/AUTOMATIC1111/stable-diffusion-webui/discussions/2017#discussioncomment-4021588

- Euler: https://cdn.discordapp.com/attachments/1036718343140409354/1036719238607540296/euler.gif

- Euler A: https://cdn.discordapp.com/attachments/1036718343140409354/1036719239018590249/euler_a.gif

Seed hunting:

- By nai speedrun asuka imgur anon:

>made something that might help the highres seed/prompt hunters out there. this mimics the "0x0" firstpass calculation and suggests lowres dimensions based on target higheres size. it also shows data about firstpass cropping as well. it's a single file so you can download and use offline. picrel.

>https://preyx.github.io/sd-scale-calc/

>view code and download from

>https://files.catbox.moe/8ml5et.html

>for example you can run "firstpass" lowres batches for seed/prompt hunting, then use them in firstpass size to preserve composition when making highres.

Script for tagging (like in NAI) in AUTOMATIC's webui: https://github.com/DominikDoom/a1111-sd-webui-tagcomplete

Danbooru Tag Exporter: https://sleazyfork.org/en/scripts/452976-danbooru-tags-select-to-export

Another: https://sleazyfork.org/en/scripts/453380-danbooru-tags-select-to-export-edited

Tags (latest vers): https://sleazyfork.org/en/scripts/453304-get-booru-tags-edited

Basic gelbooru scraper: https://pastebin.com/0yB9s338

UMI AI:

- free

- SFW and NSFW

- Goal: ultimate char-gen

- Tutorial: https://www.patreon.com/posts/umi-ai-official-73544634

- Why you should use it: https://www.patreon.com/posts/umi-ai-ultimate-73560593

- Examples:

- Straddling Sluts random prompt:

https://i.imgur.com/eDpRdjj.png

https://i.imgur.com/1mZ0u6q.png

https://i.imgur.com/cOjwAMm.png - Cocksucking Cunts prompt.

https://i.imgur.com/GdVCZuV.png

https://i.imgur.com/i5WTTB5.png

https://i.imgur.com/xj3mp8V.png - Bedded Bitches prompt.

https://i.imgur.com/urwxn6S.png

https://i.imgur.com/5gfC1oP.png - Oneshot H-Manga prompt.

https://i.imgur.com/oBec2uO.jpeg

https://i.imgur.com/UiWYTgr.jpeg

https://i.imgur.com/GuhU0Kz.jpeg

- Straddling Sluts random prompt:

- Discord: https://discord.gg/9K7j7DTfG2

- Author is looking for help filling out and improving wildcards

- Ex: https://cdn.discordapp.com/attachments/1032201089929453578/1034546970179674122/Popular_Female_Characters.txt

- Author: Klokinator#0278

- Looking for wildcards with traits and tags of characters

- Planned updates

- Code will get cleaned up

- the 'species' are rudimentary rn but stuff like 'superior slimegirls' are the direction I want to head with species moving forward

- the genders are still unigender, but I'll be separating m/f very soon

- and finally, I just need to add shitloads more content and scenarios

- Code: https://github.com/Klokinator/UnivAICharGen/

Random Prompts: https://rentry.org/randomprompts

Python script of generating random NSFW prompts: https://rentry.org/nsfw-random-prompt-gen

Prompt randomizer: https://github.com/adieyal/sd-dynamic-prompting

Prompt generator: https://github.com/h-a-te/prompt_generator

- apparently UMI uses these?

http://dalle2-prompt-generator.s3-website-us-west-2.amazonaws.com/

https://randomwordgenerator.com/

funny prompt gen that surprisingly works: https://www.grc.com/passwords.htm

Unprompted extension released: https://github.com/ThereforeGames/unprompted

- Wildcards on steroids

- Powerful scripting language

- Can create templates out of booru tags

- Can make shortcodes

- "You can pull text from files, set up your own variables, process text through conditional functions, and so much more "

Ideas for when you have none: https://pentoprint.org/first-line-generator/

PaintHua.com - New GUI focusing on Inpainting and Outpainting

I didn't check the safety of these plugins, but they're open source, so you can check them yourself

Photoshop/Krita plugin (free): https://internationaltd.github.io/defuser/ (kinda new and currently only 2 stars on github)

Photoshop: https://github.com/Invary/IvyPhotoshopDiffusion

Photoshop plugin (paid, not open source): https://www.flyingdog.de/sd/

Krita plugins (free):

- https://github.com/sddebz/stable-diffusion-krita-plugin (listed in the OP, outdated? dead?)

- https://github.com/Interpause/auto-sd-krita (a fork from above, more improvement)

- https://www.flyingdog.de/sd/en/ (https://github.com/imperator-maximus/stable-diffusion-krita)

GIMP:

https://github.com/blueturtleai/gimp-stable-diffusion

Blender:

https://github.com/carson-katri/dream-textures

https://github.com/benrugg/AI-Render

Script collection: https://github.com/AUTOMATIC1111/stable-diffusion-webui/wiki/Custom-Scripts

Prompt matrix tutorial: https://gigazine.net/gsc_news/en/20220909-automatic1111-stable-diffusion-webui-prompt-matrix/

Animation Script: https://github.com/amotile/stable-diffusion-studio

Animation script 2: https://github.com/Animator-Anon/Animator

Video Script: https://github.com/memes-forever/Stable-diffusion-webui-video

Masking Script: https://github.com/dfaker/stable-diffusion-webui-cv2-external-masking-script

XYZ Grid Script: https://github.com/xrpgame/xyz_plot_script

Vector Graphics: https://github.com/GeorgLegato/Txt2Vectorgraphics/blob/main/txt2vectorgfx.py

Txt2mask: https://github.com/ThereforeGames/txt2mask

Prompt changing scripts:

- https://github.com/yownas/seed_travel

- https://github.com/feffy380/prompt-morph

- https://github.com/EugeoSynthesisThirtyTwo/prompt-interpolation-script-for-sd-webui

- https://github.com/some9000/StylePile

Interpolation script (img2img + txt2img mix): https://github.com/DiceOwl/StableDiffusionStuff

img2tiles script: https://github.com/arcanite24/img2tiles

Script for outpainting: https://github.com/TKoestlerx/sdexperiments

Img2img animation script: https://github.com/Animator-Anon/Animator/blob/main/animation_v6.py

- Can use in txt2img mode and combine with https://film-net.github.io/ for content aware interpolation

Giffusion tutorial:

Img2img megalist + implementations: https://github.com/AUTOMATIC1111/stable-diffusion-webui/discussions/2940

Runway inpaint model: https://huggingface.co/runwayml/stable-diffusion-inpainting

Inpainting Tips: https://www.pixiv.net/en/artworks/102083584

Rentry version: https://rentry.org/inpainting-guide-SD

Extensions:

Artist inspiration: https://github.com/yfszzx/stable-diffusion-webui-inspiration

- https://huggingface.co/datasets/yfszzx/inspiration

- delete the 0 bytes folders from their dataset zip or you might get an error extracting it

History: https://github.com/yfszzx/stable-diffusion-webui-images-browser

Collection + Info: https://github.com/AUTOMATIC1111/stable-diffusion-webui/wiki/Extensions

Deforum (video animation): https://github.com/deforum-art/deforum-for-automatic1111-webui

- Math: https://docs.google.com/document/d/1pfW1PwbDIuW0cv-dnuyYj1UzPqe23BlSLTJsqazffXM/edit

- Blender camera animations to deforum: https://github.com/micwalk/blender-export-diffusion

- Tutorial: https://www.youtube.com/watch?v=lztn6qLc9UE

Aesthetic Gradients: https://github.com/AUTOMATIC1111/stable-diffusion-webui-aesthetic-gradients

Aesthetic Scorer: https://github.com/tsngo/stable-diffusion-webui-aesthetic-image-scorer

Autocomplete Tags: https://github.com/DominikDoom/a1111-sd-webui-tagcomplete

Prompt Randomizer: https://github.com/adieyal/sd-dynamic-prompting

Wildcards: https://github.com/AUTOMATIC1111/stable-diffusion-webui-wildcards/

Clip interrogator: https://colab.research.google.com/github/pharmapsychotic/clip-interrogator/blob/main/clip_interrogator.ipynb

2: https://github.com/pharmapsychotic/clip-interrogator

Inpaint guide: https://archived.moe/h/thread/6930399/#6930453

Anon:

By request, a very quick inpainting guide:The key to good inpainting is understanding how "Inpaint at full resolution" actually works. The linked guides are obsolete and old at this. So I will tell you.

Inpaint at full resolution first determines the minimum rectangular box that fits all your mask. Then, it resizes the base image within that box into whatever your setting is for resolution. Note that when inpainting at full resolution, the resolution sliders determine THIS INPAINTING SPACE. In other words, you can inpaint for a 2048 by 2048 pixel image while having your sliders set for 256 by 256 pixels. There are some bugs with inpainting at full resolution with height > width https://pythontechworld.com/issue/automatic1111/stable-diffusion-webui/2524 so my recommendation is to just set it to 512 by 512 always.

Next, whatever is in your base image that would fit into the bounding box is rescaled and put into the inpainting space whose size is determined by your height and width sliders.

The padding for full resolution inpainting option ADDS ADDITIONAL PIXELS FROM THE BASE IMAGE TO YOUR BOUNDING BOX. It is extremely important to set this correctly. Essentially, it adds surrounding context from BEYOND your bounding box to the inpainting space. It MUST be set to a nonzero value if you want to match anything not interior to your mask. Set it very high if you want high context. The total input to the inpainting space is your window.

Next, what happens is essentially an img2img transformation on your window: which is the scaled image taken from the mask + original image bounding box. Set your prompts accordingly! Close-up is a very valuable tag to use in inpainting. Don't include prompts that are only relevant outside your window. DO include prompts that can determine composition within your window EVEN IF THEY AREN'T IN YOUR INPAINTING MASK.

Krita guide by anon:

- Get

https://krita.org/en/

https://github.com/Interpause/auto-sd-krita/wiki/Quick-Switch-Using-Existing-AUTOMATIC1111-Install

https://github.com/Interpause/auto-sd-krita/wiki/Install-Guide#plugin-installation - then you can run prompts in th app or pull one in then to inpaint like a boss, you add a new layer

https://files.catbox.moe/xy6z32.png - then use a white brush to brush the bits you want to change

https://files.catbox.moe/esdqk7.png - Turn off the layer off by hitting the eye icon but leave it selected

https://files.catbox.moe/wzaiw9.png - if you set everythign up right you have this section

https://files.catbox.moe/n43yrh.png - type what your after hit inpaint

Positive:

Biggest tip: just write what you want. the AI will generally understand and create it

- NAI's default (generally good) positive prompts to add at the beginning of all prompts: masterpiece, best quality

- can swap best for highest, high, etc.

- Group the things that you want that are similar together (e.g. things relating to body type, things relating to clothing, etc.), and put these groups in order of most important to least important

- Anon's order:

the picture's quality

the picture's subject

their physical appearance

their emotion

their clothing

their pose

the picture's setting

- Anon's order:

- "Anime screencap" creates scenes from an anime

- from anon: to use character (franchise/series/show/etc.), you have to format it as character \(franchise\)

- the tokenizer struggles to parse underscores, ymmv

- img2img -> prompt gets you more consistency

Negative:

- NAI's default (remove "nsfw" if you want nsfw outputs): nsfw, lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry

Tags: https://danbooru.donmai.us/tags

Tag Groups: https://danbooru.donmai.us/wiki_pages/tag_groups

Most Popular Tags: https://danbooru.donmai.us/tags?commit=Search&search%5Bhide_empty%5D=yes&search%5Border%5D=count

Faces and heads:

Expressions:

Camera Angles:

Hair Styles:

Hands

- Hands: writing "in the style of Serpieri" increased hand quality in SD v1.4

Colors:

Posture

Posing

- https://app.posemy.art/

- https://figurosity.com/figure-drawing-poses

- https://terawell.net/index.php

- https://manikin.app/

- https://app.justsketch.me/

- https://webapp.magicposer.com/

- Daz3d

- https://www.artstation.com/marketplace/p/VOAyv/stable-diffusion-3d-posable-manekin-doll?utm_source=artstation&utm_medium=referral&utm_campaign=homepage&utm_term=marketplace

-

faces and heads: http://www.relativitybook.com/CoolStuff/facebank.html

download FaceGen Modeller demo (or """purchase"" full version >/ptg/. the demo is still full featured with a shoopably manageable watermark):

https://facegen.com/modeller_demo.htm

install it, load the .fg files from the first link into the app, or make your own, the app does this easily with 1 or 2 photos.

fuck around with the expression/camera angle/lighthing.

printscreen or use the app's built-in render if you want custom resolution (File > Image > Custom > Save).

use it as a base for img2img outpaint/inpaint.

Locations

Clothes

VAE:

- SD 1.4 Anime styled: https://huggingface.co/hakurei/waifu-diffusion-v1-4/blob/main/vae/kl-f8-anime.ckpt

Sex pose

https://litter.catbox.moe/las83s.txt

Booru tag scraping:

- https://sleazyfork.org/en/scripts/451098-get-booru-tags

- script to run in browser, hover over pic in Danbooru and Gelbooru

- https://rentry.org/owmmt

- another script

- https://pastecode.io/s/jexs5p9c

- another script, maybe pickle

- press tilde on dan, gel, e621

- https://textedit.tools/

- if you want an online alternative

- https://github.com/onusai/grab-booru-tags

- works with e621, dev will try to get it to work with rule34.xxx

- https://pastecode.io/s/jexs5p9c

- https://pastecode.io/s/61owr7mz

- Press ] on the page you want the tags from

- Another script: https://pastecode.io/s/q6fpoa8k

- Another: https://pastecode.io/s/t7qg2z67

- Github for scraper: https://github.com/onusai/grab-booru-tags

Wildcards:

- https://desuarchive.org/g/thread/89006003#89007479

- https://rentry.org/sdWildcardLists

- Guide (ish): https://is2.4chan.org/h/1665343016289442.png

- A few wildcards: https://cdn.lewd.host/EtbKpD8C.zip

- https://github.com/Lopyter/stable-soup-prompts/tree/main/wildcards

- https://github.com/Lopyter/sd-artists-wildcards

- Allows you to split up the artists.csv from Automatic by category

- Another wildcard script: https://raw.githubusercontent.com/adieyal/sd-dynamic-prompting/main/dynamic_prompting.py

- wildcardNames.txt generation script: https://files.catbox.moe/c1c4rx.py

- Another script: https://files.catbox.moe/hvly0p.rar

- Script: https://gist.github.com/h-a-te/30f4a51afff2564b0cfbdf1e490e9187

- UMI AI: https://www.patreon.com/posts/umi-ai-official-73544634

- Wildcard dump:

- faces https://rentry.org/pu8z5

- focus https://rentry.org/rc3dp

- poses https://rentry.org/hkuuk

- times https://rentry.org/izc4u

- views https://rentry.org/pv72o

- Clothing: https://pastebin.com/EyghiB2F

- Another dump: https://github.com/jtkelm2/stable-diffusion-webui-1/tree/master/scripts/wildcards

- Big NAI Wildcard List: https://rentry.org/NAIwildcards

- 316 colors list: https://pastebin.com/s4tqKB8r

- 82 colors list: https://pastebin.com/kiSEViGA

- Backgrounds: https://pastebin.com/FCybuqYW

- More clothing: https://pastebin.com/DrkG1MRw

- Dump: https://www.dropbox.com/s/oa451lozzgo7sbl/wildcards.zip?dl=1

- 483 txt files, huge dump (for Danbooru trained models): https://files.catbox.moe/ipqljx.zip

- old 329 version: https://files.catbox.moe/qy6vaf.zip

- old 314 version: https://files.catbox.moe/11s1tn.zip

- Styles: https://pastebin.com/71HTfsML

- Word list (small): https://cdn.lewd.host/EtbKpD8C.zip

- Emotions/expressions: https://pastebin.com/VVnH2b83

- Clothing: https://pastebin.com/cXxN1fJw

- More clothing: https://files.catbox.moe/88s7bf.zip

- Cum: https://rentry.org/hoom5

- Dump: https://www.mediafire.com/file/iceamfawqhn5kvu/wildcards.zip/file

- Locations: https://pastebin.com/R6ugwd2m

- Clothing/outfits: https://pastebin.com/Xhhnyfvj

- Locations: https://pastebin.com/uyDJMnvC

- Clothes: https://pastebin.com/HaL3rW3j

- Color (has nouns): https://pastebin.com/GTAaLLnm

- Dump: https://files.catbox.moe/qyybik.zip

- Artists: https://pastebin.com/1HpNRRJU

- Animals: https://pastebin.com/aM4PJ2YY

- Food: https://pastebin.com/taFkYwt9

- Characters: https://files.catbox.moe/xe9qj7.txt

- Backgrounds: https://pastebin.com/gVue2q8g

- WIP random h-manga scene generator: https://files.catbox.moe/ukah7u.jpg

- Collection from https://rentry.org/NAIwildcards: https://files.catbox.moe/s7expb.7z

- Outfits: https://files.catbox.moe/y75qda.txt

- Collection: https://cdn.lewd.host/4Ql5bhQD.7z

- Settings + Minerals: https://pastebin.com/9iznuYvQ

- Hairstyles: https://pastebin.com/X39Kzxh7

- Hairstyles 2: https://pastebin.com/bRWu1Xvv

- subject filewords: https://pastebin.com/XRFhwXj8

- subject filewords but less emphasis on filewords: https://pastebin.com/LxZGkzj1

- subject filewords v3: https://pastebin.com/hL4nzEDW

- Danbooru Poses: https://pastebin.com/RgerA8Ry

- Character training text template: https://files.catbox.moe/wbat5x.txt

- Outfits: https://pastebin.com/Z9aHVpEy

- Danbooru tag group wildcard dump organized into folders: https://files.catbox.moe/hz5mom.zip

- by uploader anon: "I recommend using Dynamic Prompting rather than the normal Wildcards extension. It does everything the Wildcards extension does and then some, * being a thing is especially great and so is |"

- Poses: https://rentry.org/m9dz6

Wildcard extension: https://github.com/AUTOMATIC1111/stable-diffusion-webui-wildcards/

Some artists (may or may not work with NAI):

- SD 1.5 artists (might lag your pc): https://docs.google.com/spreadsheets/d/1SRqJ7F_6yHVSOeCi3U82aA448TqEGrUlRrLLZ51abLg/htmlview#

- pre-modern art: https://www.artrenewal.org/Museum/Search#/

- SD 1.4 artists: https://rentry.org/artists_sd-v1-4

- Link list: https://pastebin.com/HD7D6pnh

- Artist comparison grids: https://files.catbox.moe/y6bff0.rar

- Artist Comparison: https://reddit.com/r/NovelAi/comments/y879x1/i_made_an_experiment_with_different_artists_here/

- Site: https://sdartists.app/

- Comparison: https://imgur.com/a/hTEUmd9

- Comparison: https://proximacentaurib.notion.site/e28a4f8d97724f14a784a538b8589e7d?v=ab624266c6a44413b42a6c57a41d828c

- Comparison: https://imgur.com/a/ADPHh9q

- List: https://mpost.io/midjourney-and-dall-e-artist-styles-dump-with-examples-130-famous-ai-painting-techniques/

- List: https://arthive.com/artists

- Extension: https://github.com/yfszzx/stable-diffusion-webui-inspiration

- Huge comparison of artists (3gb, 90x90 different artist combinations on untampered WD v1.3.)

- Huge tested list: https://proximacentaurib.notion.site/e28a4f8d97724f14a784a538b8589e7d?v=42948fd8f45c4d47a0edfc4b78937474

- artists and themes: https://dict.latentspace.observer/

- SD 1.5 artist study: https://docs.google.com/spreadsheets/d/1SRqJ7F_6yHVSOeCi3U82aA448TqEGrUlRrLLZ51abLg/edit#gid=2005893444

- Artist comparisons for NAI: https://www.reddit.com/r/NovelAi/comments/y879x1/i_made_an_experiment_with_different_artists_here/

- Artist rankings: https://www.urania.ai/top-sd-artists

Anon's list of comparisons:

- Stable Diffusion v1.5, Waifu Diffusion v1.3, Trinart it4

- Berry Mix, CLIP 2:

- Berry Mix, CLIP 1:

- Artist + Artist, WD v1.3 (incomplete):

https://mega.nz/file/ACtigCpD#f9zP9h1AU_0_4DPsBnvdhnUYdQmIJMb4pyc6PJ4J-FU

Creating fake animes:

1:1 NAI/Novel AI Cheatsheet:

-

1:1 NAI cheatsheet by anon:

- Use unpruned/full model

- Load with ema weights (use .yaml config from base stable-diffusion, set use_ema to true) (minor)

- Doubles ram

-

Anon: "I copied the one from this path (which is what voldy defaults to if one isn't specified):

/repositories/stable-diffusion/configs/stable-diffusion/v1-inference.yamlAnd then on line 18 I set use_ema to True, and put that copy into the models folder with the correct name (name of model.yaml)."

- CLIP layer = 2

- Reset sigma noise / strength to the default value of 1 (no need to use 0.69 / 0.67)

- Set eta noise seed delta to 31337

- If using Euler a, eta noise seed delta = 31337

- If prompt has weights, manually adjust the weight accordingly (voldy uses 1.1, NAI uses 1.05)

- Use --no-half argument (minor)

Adding Variety

https://www.reddit.com/r/StableDiffusion/comments/yaziws/how_to_get_more_variety_in_your_ai_images_tutorial/

Photoshop Workflow Example

https://www.reddit.com/r/StableDiffusion/comments/wyduk1/show_rstablediffusion_integrating_sd_in_photoshop/

Tips to find a good img

anon: like i mentioned before, if using a non-ancestral sampler such as euler that trends to converge on the same image after a bunch of steps, you can roll seeds with 20 or less steps and very small resolution, then stop when you find a good seed and generate again with higher quality. saves scads of time when trying to make some really complicated 125 token prompt

so get a good image first with 512 rerolls

use the same seed but now with highres enabled

Anon's best output settings

[txt2img]

Positive: none

Negative: lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, 3 legs, 3 arms

Sampling Steps - 47/51

Euler a

Width - 512 normal/768 2 characters or better landscapes

Height - 512 normal/768 full body

CFG Scale: 12/12,5/18

[img2img]

Positive: complete image/none

Negative: lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, 3 legs, weird

Sampling Steps - 51

Euler a

Width - 512 normal/768 2 characters or better landscapes

Height - 512 normal/768 full body

CFG Scale: 18

Denoising strength: 0,5/0,68

Anon's workflow:

Artist list: https://rentry.org/anime_and_titties

Expressions and (STYLE): https://rentry.org/faces-faces-faces

Anon's order:

the picture's quality

the picture's subject

their physical appearance

their emotion

their clothing

their pose

the picture's setting

Lazy Prompting

go to *booru of your choice

pick an image you really like

click tags -> plain editor

copypaste to prompt

profit

Anon's Refinement Technique:

- generate a picture with the prompt that you want, be very precise. I personally generate pictures that are 512x512 initially.

- once you get a decent picture to come out of the generation, it will be used as the base "sketch" to feed to img2img.

- if you want to, increase the resolution but if you do so set the denoising to about .60

- once you have the resolution you want and everything, keep reprocessing the image with a denoising of about 0.2 - 0.3.

- if something on the image bothers you, work on it in an image editor, for example using a brush of the same colour of the what's adjacent to the detail you want to remove, or if you want to add something (like refining fingers), make sure to use the pencil with a contrasted colour (I generally use black).

- after editing, always reprocess the image with a denoising of about 0.3

- once the result satisfies you enough, use the "R-ESRGAN 4x+ Anime6B" upscaler if you want the image upscaled.

Models, Embeddings, and Hypernetworks

Models, embeddings, and hypernetworks can be pickled. Download at your own risk. Use https://rentry.org/safeunpickle2 to unpickle

Models (WIP)

Organized list: https://rentry.org/sdmodels

RISKY (MAJOR PICKLE WARNING) BUT A LOT OF MODELS HERE: https://bt4g.org/search/.ckpt/1

Collection: https://docs.google.com/spreadsheets/d/1fzsjGDlmbbPEnJWY9V2q5a99ySrK1Rj37sk7YJz8fCc/edit#gid=0

Organized list: https://cyberes.github.io/stable-diffusion-models/

Groups (add more later):

- Waifu Diffusion (https://huggingface.co/hakurei/waifu-diffusion-v1-4)

- Stability AI

- Zeipher (https://ai.zeipher.com/)

Upcoming models:

- Waifu Diffusion v1.4: https://huggingface.co/hakurei/waifu-diffusion-v1-4

Stable Diffusion v1.4

- Torrent: magnet:?xt=urn:btih:3A4A612D75ED088EA542ACAC52F9F45987488D1C&tr=udp://tracker.opentrackr.org:1337

- HuggingFace: https://huggingface.co/CompVis/stable-diffusion-v-1-4-original

- Login required

- Google Drive: https://drive.google.com/file/d/1wHFgl0ivCmIZv88hVZXkb8oy9qCuaBGA/view

Waifu Diffusion VAE (250k images)

- https://huggingface.co/hakurei/waifu-diffusion-v1-4/blob/main/vae/kl-f8-anime.ckpt

- https://twitter.com/haruu1367/status/1579286947519864833

- "finetuned the SD 1.4 vae on a bunch of anime-styled images"

- Supposedly improves eyes and fingers

- To load, rename .ckpt to .vae.pt

Waifu Diffusion v1.3

- https://huggingface.co/hakurei/waifu-diffusion-v1-3

- Click "Files and versions" to view all epochs

- 600,000 high-resolution Danbooru images, 10 Epochs

- Release Notes: https://gist.github.com/harubaru/f727cedacae336d1f7877c4bbe2196e1

Waifu Diffusion v1.2

Pruned Torrent:

Full EMA Torrent:

Trinart2

- https://huggingface.co/naclbit/trinart_stable_diffusion_v2/tree/main

- Uses dropouts, 10k more images than Trinart1, new tagging strategy, and trained for longer

Trinart1

- https://huggingface.co/naclbit/trinart_stable_diffusion/tree/main

- 3.5 epochs, 30k images

- Obsolete

gg1342_testrun1_pruned

- 280 NSFW nude solo women + 80 SFW fiction characters

Hentai Diffusion

- https://huggingface.co/Deltaadams/Hentai-Diffusion/tree/main

- Based on Waifu Diffusion 1.2, trainede on 150k images from rule34 and gelbooru, focused training on hands and poses

- Updated weekly

RD1412

- Pruned FP16

- Pruned FP32

RD1212

- Pruned FP16

- Pruned FP32

- Full EMA

Bare Feet / Full Body b4_t16_noadd

- Focused on bare feet and full body nude female images, good for genitalia and photorealistic feet

- Pruned FP16 v3

- Pruned FP32 v3

Lewd Diffusion

- 70k images from Danbooru, based on Waifu Diffusion 1.2

- Dataset: https://drive.google.com/drive/folders/1f_BYi88LLTZUzBHkUz8PDgw6l7M7swkd?usp=sharing

- Dataset stats: https://docs.google.com/spreadsheets/d/1BzNSXyT4fhiM64DwIJSCyAXuhRQ9fkxqcr-t1frIYkc/edit

- 2 epochs

- 1 epoch

- 0 epochs, 40k images

Yiffy

- During training explicit was misspelled as explict

- Tags:

- 18 epochs (210k images from e621):

- 15 epochs (210k images from e621):

- https://sexy.canine.wf/file/yiffy-ckpt/yiffy-e15.ckpt

- https://pixeldrain.com/u/qkRKKpqg

- https://iwiftp.yerf.org/Furry/Software/Stable%20Diffusion%20Furry%20Finetune%20Models/Finetune%20models/yiffy-e15.ckpt

-

13 epochs

- first 4 epochs were trained on ~70k images with lama infilling (the cause of all of our headaches, because the network found a pattern in the edges and started replicating it everywhere)

- next 6 epochs were trained on ~120k images with random cropping and a lower LR

- last epochs were done on a different dataset, not bigger than 150k

- https://iwiftp.yerf.org/Furry/Software/Stable%20Diffusion%20Furry%20Finetune%20Models/Finetune%20models/yiffy-e13.ckpt

Furry

- 300k images from e621

- Tags:

- Epoch 4

- https://pixeldrain.com/u/dtYiYN7g

- https://iwiftp.yerf.org/Furry/Software/Stable%20Diffusion%20Furry%20Finetune%20Models/Finetune%20models/furry_epoch4.ckpt

- Epoch 1

- https://iwiftp.yerf.org/Furry/Software/Stable%20Diffusion%20Furry%20Finetune%20Models/Finetune%20models/furry_epoch1.ckpt

- https://iwiftp.yerf.org/Furry/Software/Stable%20Diffusion%20Furry%20Finetune%20Models/Finetune%20models/furry_epoch1.ckpt

- Epoch 0

Zack3D_Kinky-v1

- Over 100k images, filtered aesthetics, NSFW, trained on SD v1.4, good for furry, specializes in kinks like transformation, latex, tentacles, goo, ferals, bondage, etc.

- Uses e621 tags with underscores

Anal Vore AVHumanFurryPony7

- 7 epochs, continued from Zack3D_Kinky-v1

- Tags

Gape Model

Pony Models

Purplesmart.AI's Pony v1:

https://mega.nz/file/ZT1xEKgC#Xxir5udMmU_mKaRZAbBkF247Yk7DqCr01V0pDzSlYI0

CookieSD's sfw/nsfw model:

https://drive.google.com/drive/folders/14JyQE36wYABH-0TSV_HBEsBJ3r8ZITrS

Pussy Diffusion 10/14 (only use for inpainting)

- trained on sankaku+e621 on gaping_anus,gaping_pussy,large_penetration,fisting, prolapse

- https://gofile.io/d/Viv9CJ

- https://bbs.kfpromax.com/read.php?tid=963487

- 链接: https://pan.baidu.com/s/1sC69cgSTWGuXCY79K5C_DA 提取码: 7qdp

a merged model of 80 NAI 20 TRIN120k

- https://mega nz/file/jB5lwa6J#ciSArZnJQLszvhatiMK2NTKFNjKYUhHJlXt9At3WRss

Berrymix Recipe

Rentry: https://rentry.org/berrymix

Make sure you have all the models needed, Novel Ai, Stable Diffusion 1.4, Zeipher F111, and r34_e4. All but Novel Ai can be downloaded from HERE

Open the Checkpoint Merger tab in the web ui

Set the Primary Model (A) to Novel Ai

Set the Secondary Model (B) to Zeipher F111

Set the Tertiary Model (C) to Stable Diffusion 1.4

Enter in a name that you will recognize

Set the Multiplier (M) slider all the way to the right, at "1"

Select "Add Difference"

Click "Run" and wait for the process to complete

Now set the Primary Model (A) to the new checkpoint you just made (Close the cmd and restart the webui, then refresh the web page if you have issues with the new checkpoint not being an option in the drop down)

Set the Secondary Model (B) to r34_e4

Ignore Tertiary Model (C) (I've tested it, it wont change anything)

Enter in the name of the final mix, something like "Berry's Mix" ;)

Set Multiplier (M) to "0.25"

Select "Weighted Sum"

Click "Run" and wait for the process to complete

Restart the Web Ui and reload the page just to be safe

At the top left of the web page click the "Stable Diffusion Checkpoint" drop down and select the Berry's Mix.ckpt (or whatever you named it) it should have the hash "[c7d3154b]"

- Berry + Novelai VAE (Might be malicious): https://mega.nz/folder/8HUikarD#epAOm3l2hltC_s_oiSC9dg

- Another berry + vae: https://anonfiles.com/Rdq7j7F0ye/Berry_zip

- Dump of ckpt merges, might be pickled, uploader anon says to download at your own risk, could also be a fed bait or something: https://droptext.cc/bfxwb

- NAI + Tri + Tri:

Fruit Salad Mix (might not be worth it to make)

Fruit Salad Guide

Recipe for the "Fruit Salad" checkpoint:

Make sure you have all the models needed, Novel Ai, Stable Diffusion 1.5, Trinart-11500, Zeipher F111, r34_e4, Gape_60 and Yiffy.

Open the Checkpoint Merger tab in the web ui

Set the Primary Model (A) to Novel Ai

Set the Secondary Model (B) to Yiffy e18

Set the Tertiary Model (C) to Stable Diffusion 1.4

Enter in a name that you will recognize

Set the Multiplier (M) slider to the left, at "0.1698765"

Select "Add Difference"

Click "Run" and wait for the process to complete

Now set the Primary Model (A) to the new checkpoint you just made (Close the cmd and restart the webui, then refresh the web page if you have issues with the new checkpoint not being an option in the drop down)

Set the Secondary Model (B) to r34_e4

Set the Tertiary Model (C) to Zeipher F111 (I've tested it, it changes EVERYTHING)

Set Multiplier (M) to "0.56565656"

Select "Weighted Sum"

Click "Run" and wait for the process to complete

Restart the Web Ui and reload the page just to be safe

Now download a previous version of WebUI, which still contains the "Inverse Sigmoid" option for checkpoint merger.

Now set the Primary Model (A) to the new checkpoint you just made

Set the Secondary Model (B) to Trinart-11500

Set Multiplier (M) to "0.768932"

Select "Inverse Sigmoid"(this is kind of like Sigmoid but inverted)

Click "Run" and wait for the process to complete

Restart the Web Ui and reload the page just to be safe

Now set the Primary Model (A) to the new checkpoint you just made.

Set the Secondary Model (B) to SD 1.5

Set the Tertiary Model (C) to Gape_60

Set the name of the final mix to something you will remember, like "Fruit's Salad" ;)

Set Multiplier (M) to "1"

Select "Weighted Sum"

Click "Run" and wait for the process to complete

Restart the Web Ui and reload the page just to be safe

At the top left of the web page click the "Stable Diffusion Checkpoint" drop down and select the Fruit's Salad.ckpt (or whatever you named it)

- Older files you need uploaded by anon (which means it might be pickled): https://codeload.github.com/AUTOMATIC1111/stable-diffusion-webui/zip/f7c787eb7c295c27439f4fbdf78c26b8389560be

- NAI + hypernetworks (uploaded by UMI AI dev, I didn't audit the files myself for changes/pickles):

- DL 1: https://anonfiles.com/U5Acl7F0y2/Novel_AI_Hypernetworks_zip

- DL 2: https://pixeldrain.com/u/FMJ4TQbM

- https://cdn.discordapp.com/attachments/1034551880220672181/1036386279597822082/unknown.png

- https://cdn.discordapp.com/attachments/1034551880220672181/1036386279207731262/unknown.png

- https://cdn.discordapp.com/attachments/1034551880220672181/1036386278813474857/unknown.png

- Scarlett's Mix (good at cute witches): https://rentry.org/scarlett_mix

- Mega mixing guide (has a different berry mix): https://rentry.org/lftbl

Dreambooth Models:

Links:

- https://huggingface.co/waifu-research-department

- https://huggingface.co/jinofcoolnes

- For preview pics/descriptions:

- https://huggingface.co/nitrosocke

- Toolkit anon: https://huggingface.co/demibit/

- https://rentry.org/sdmodels

- Big collection: https://publicprompts.art/

- Big collection of sex models (Might be a large pickle, so be careful): https://rentry.org/kwai

- Collection: https://cyberes.github.io/stable-diffusion-dreambooth-library/

- Nami: https://mega.nz/file/VlQk0IzC#8MEhKER_IjoS8zj8POFDm3ZVLHddNG5woOcGdz4bNLc

- https://huggingface.co/IShallRiseAgain/StudioGhibli/tree/main

- Jinx: https://huggingface.co/jinofcoolnes/sksjinxmerge/tree/main

- Arcane Vi: https://huggingface.co/jinofcoolnes/VImodel/tree/main

- Lucy (Edgerunners): https://huggingface.co/jinofcoolnes/Lucymodel/tree/main

- Gundam (full ema, non pruned): https://huggingface.co/Gazoche/stable-diffusion-gundam

- Starsector Portraits: https://huggingface.co/Severian-Void/Starsector-Portraits

- Evangelion style: https://huggingface.co/crumb/eva-fusion-v2

- Robo Diffusion: https://huggingface.co/nousr/robo-diffusion/tree/main/models

- Arcane Diffusion: https://huggingface.co/nitrosocke/Arcane-Diffusion

- Archer: https://huggingface.co/nitrosocke/archer-diffusion

- Wikihow style: https://huggingface.co/jvkape/WikiHowSDModel

- 60 Images. 2500 Steps. Embedding Aesthetics + 40 Image Embedding options

- Their patreon: https://www.patreon.com/user?u=81570187

- Lain girl: https://mega.nz/file/VK0U0ALD#YDfGgOu8rquuR5FbFxmzKD5hzxO1iF0YQafN0ipw-Ck

- Wikiart: https://huggingface.co/valhalla/sd-wikiart-v2/tree/main/unet

- diffusion_pytorch_model.bin, just rename to whatever.ckpt

- Megaman zero: https://huggingface.co/jinofcoolnes/Zeromodel/tree/main

- Cyberware: https://huggingface.co/Eppinette/Cyberware/tree/main

- taffy (keyword: champi): https://drive.google.com/file/d/1ZKBf63fV1Zm5_-a0bZzYsvwhnO16N6j6/view?usp=sharing

- Disney (3d?): https://huggingface.co/nitrosocke/modern-disney-diffusion/

- El Risitas (KEK guy): https://huggingface.co/Fictiverse/ElRisitas

- Cyberpunk Anime Diffusion: https://huggingface.co/DGSpitzer/Cyberpunk-Anime-Diffusion

- Kurzgesagt (called with "kurzgesagt! style"): https://drive.google.com/file/d/1-LRNSU-msR7W1HgjWf8g1UhgD_NfQjJ4/view?usp=sharing

- SHA-256: d47168677d75045ae1a3efb8ba911f87cfcde4fba38d5c601ef9e008ccc6086a

- Robodiffusion (good outputs for "meh" prompting): https://huggingface.co/nousr/robo-diffusion

- 2D Illustration style: https://huggingface.co/ogkalu/hollie-mengert-artstyle

- Rebecca (edgerunners, by booru anon, info is in link): https://huggingface.co/demibit/rebecca

- Kiwi (by booru anon): https://huggingface.co/demibit/kiwi

- Ranni (Elden Ring): https://huggingface.co/bitspirit3/SD-Ranni-dreambooth-finetune

- Cloud: https://huggingface.co/jinofcoolnes/cloud/tree/main

- Comics: https://huggingface.co/ogkalu/Comic-Diffusion

- Modern Disney style (modi, mo-di): https://huggingface.co/nitrosocke/mo-di-diffusion

- Silco: https://huggingface.co/jinofcoolnes/silcomodel/tree/main

- Lara: https://huggingface.co/jinofcoolnes/Oglaramodel/tree/main

- theofficialpit bimbo (26 pics for 2600 steps, Use "thepit bimbo" in prompt for more effect): https://mega.nz/file/wSdigRxJ#WrF8cw85SDebO8EK35gIjYIl7HYAz6WqOxcA-pWJ_X8

- DCAU (Batman_the_animated_series): https://huggingface.co/IShallRiseAgain/DCAU/blob/main/DCAUV1.ckpt

- https://www.reddit.com/r/StableDiffusion/comments/yf2qz0/initial_version_of_dcau_model_im_making/

- hand captioning 782 screencap, 44,000 steps, training set for the regularization images

- NSFW: https://megaupload.nz/N7m7S4E7yf/Magnum_Opus_alpha_22500_steps_mini_version_ckpt

- Hardcore: https://pixeldrain.com/u/Stk98vyH

- Trained on 3498 images and around 250K steps

- porn, sex acts of all sorts: anal sex, anilingus, ass, ass fingering, ball sucking, blowjob, cumshot, cunnilingus, dick, dildo, double penetration, exposed pussy, female masturbation, fingering, full nelson, handjob, large ass, large tits, lesbian kissing, massive ass, massive tits, o-face, sixty-nine, spread pussy, tentacle sex (try also oral/anal tentacle sex and tentacle dp), tit fucking, tit sucking, underboob, vaginal sex, long tongue, tits

- Example grid from training (single shot batch): https://cdn.discordapp.com/attachments/1010982959525929010/1035236689850941440/samples_gs-995960_e-000046_b000000.png

- Trained on 3498 images and around 250K steps

- disney 2d animation style: https://huggingface.co/nitrosocke/classic-anim-diffusion

- Kim Jung Gi: https://drive.google.com/drive/folders/1uL-oUUhuHL-g97ydqpDpHRC1m3HVcqBt

- Pyro's Blowjob Model: https://rentry.org/pyros-sd-model

Embeddings

If an embedding is >80mb, I mislabeled it and it's a hypernetwork

Use a download manager to download these. It saves a lot of time + good download managers will tell you if you have already downloaded one

- Text Tutorial: https://github.com/AUTOMATIC1111/stable-diffusion-webui/wiki/Textual-Inversion

- Make sure to use pictures of your subject in varied areas, it gives more for the AI to work with

- Tutorial 2: https://rentry.org/textard

- Another tutorial: https://imgur.com/a/kXOZeHj

- Test embeddings: https://huggingface.co/spaces/sd-concepts-library/stable-diffusion-conceptualizer

- Collection: https://huggingface.co/sd-concepts-library

- Collection 2: https://mega.nz/folder/fVhXRLCK#4vRO9xVuME0FGg3N56joMA

- Collection 3: https://cyberes.github.io/stable-diffusion-textual-inversion-models/

- Korean megacollection:

- https://arca.live/b/hypernetworks?category=%EA%B3%B5%EC%9C%A0

- (includes mega compilation of artists): https://arca.live/b/hypernetworks/60940948

- Original: https://arca.live/b/hypernetworks/60930993

- Large collection of stuff from korean megacollection: https://mega.nz/folder/sSACBAgC#kNiPVzRwnuzs8JClovS1Tw

- Large Vtuber collection dump (not sure if pickled, even linker anon said to be careful, but a big list anyway): https://rentry.org/EmbedList

- Waifu Diffusion collection: https://gitlab.com/cattoroboto/waifu-diffusion-embeds

Found on 4chan:

- Embeddings + Artists: https://rentry.org/anime_and_titties (https://mega.nz/folder/7k0R2arB#5_u6PYfdn-ZS7sRdoecD2A)

- Random embedding I found: https://ufile.io/c3s5xrel

- Embeddings: https://rentry.org/embeddings

- Anon's collection of embeddings: https://mega.nz/folder/7k0R2arB#5_u6PYfdn-ZS7sRdoecD2A

- Collection: https://gitgud.io/ZeroMun/stable-diffusion-tis/-/tree/master/embedding

- Collection: https://gitgud.io/sn33d/stable-diffusion-embeddings

- Collection from anon's "friend" (might be malicious): https://files.catbox.moe/ilej0r.7z

- Collection from anon: https://files.catbox.moe/22rncc.7z

- Collection: https://gitlab.com/rakurettocorp/stable-diffusion-embeddings/-/tree/main/

- Collection: https://gitlab.com/mwlp/sd

- Senri Gan: https://files.catbox.moe/8sqmeh.rar

- Collection: https://gitgud.io/viper1/stable-diffusion-embeddings

- Henreader embedding, all 311 imgs on gelbooru, trained on NAI: https://files.catbox.moe/gr3hu7.pt

- Kantoku (NAI, 12 vectors, WD 1.3): https://files.catbox.moe/j4acm4.pt

- Asanagi (NAI): https://files.catbox.moe/xks8j7.pt

- Asanagi trained on 135 images augmented to 502 for 150296 steps on NAI Anime Full Pruned with 16 vectors per token with init word as voluptuous

- training imgs: https://litter.catbox.moe/2flguc.7z

- DEAD LINK Asanagi (another one): https://litter.catbox.moe/g9nbpx.pt

- Imp midna (NAI, 80k steps): mega.nz/folder/QV9lERIY#Z9FXQIbtXXFX5SjGf1Ba1Q

- imp midna 2 (NAI_80K): mega.nz/file/1UkgWRrD#2-DMrwM0Ph3Ebg-M8Ceoam_YUWhlQWsyo1rcBtuKTcU

- inverted nipples: https://anonfiles.com/300areCby8/invertedNipples-13000_zip (reupload)

- Dead link: https://litter.catbox.moe/wh0tkl.pt

- Takeda Hiromitsu Embedding 130k steps: https://litter.catbox.moe/a2cpai.pt

- Takeda embedding at 120000 steps: https://filebin.net/caggim3ldjvu56vn

- Nenechi embedding: https://mega.nz/folder/E0lmSCrb#Eaf3wr4ZdhI2oettRW4jtQ

- Touhou Fumo embedding (57 epochs): https://birchlabs.co.uk/share/textual-inversion/fumo.cpu.pt

- Abigail from Great Pretender (24k steps): https://workupload.com/file/z6dQQC8hWzr

- Naoki Ikushima (40k steps): https://files.catbox.moe/u88qu5.pt

- Abmayo: https://files.catbox.moe/rzep6d.pt

- Gigachad: https://easyupload.io/nlha2m

- Kusada Souta (95k steps): https://files.catbox.moe/k78y65.pt

- Yohan1754: https://files.catbox.moe/3vkg2o.pt

- Repo for some: https://git.evulid.cc/wasted-raincoat/Textual-Inversion-Embeds/src/branch/master/simonstalenhag

- automatic's secret embedding list: https://gitlab.com/16777216c/stable-diffusion-embeddings

- Niro: https://take-me-to.space/WKRY9IE.pt

- Kaneko Kazuma (Kazuma Kaneko): https://litter.catbox.moe/6glsh1.pt

- Senran Kagura (850 CGs, deepdanbooru tags, 0.005 learning rate, 768x768, 3000 iterations): https://files.catbox.moe/jwiy8u.zip

- Abmono (14.7k): https://www.mediafire.com/file/id2uh4gkzvavsbc/abmono-14700.pt/file

- DEAD LINK Deadflow (190k, "bitchass"(?)): https://litter.catbox.moe/03lqr6.pt

- Aroma Sensei (86k, "aroma"): https://files.catbox.moe/wlylr6.pt

- Zun (75:25 weighted sum NAI full:WD): https://www.fluffyboys.moe/sd/zunstyle.pt

- Kurisu Mario (20k): https://files.catbox.moe/r7puqx.pt

- creator anon: "I suggest using him for the first 40% of steps so that the AI draws the body in his style, but it's up to you. Also, put speech_bubble in the negative prompt, since the training data had them"

- ATDAN (33k): https://files.catbox.moe/8qoag3.pt

- Valorant (25k): https://files.catbox.moe/n7i9lq.pt

- Takifumi (40k, 153 imgs): https://freeufopictures.com/ai/embeddings/takafumi/

- 40hara (228 imgs, 70k, 421 after processing): https://freeufopictures.com/ai/embeddings/40hara/

- Tsurai (160k, NAI): https://mega.nz/file/bBYjjRoY#88o-WcBXOidEwp-QperGzEr1qb8J2UFLHbAAY7bkg4I

- Wagashi (12k, shitass(?)), no associated pic or replies so might be pickled: https://litter.catbox.moe/ktch8r.pt

- jtveemo (150k): https://a.pomf.cat/kqeogh.pt

- Creator anon: "I didn't crop out any of the @jtveemo stuff so put twitter username in the negatives."

- Nahida (Genshin Impact): https://files.catbox.moe/nwqx5b.zip

- Arcane (SD 1.4): https://files.catbox.moe/z49k24.pt

- People say this triggered the pickle warning, so it might be pickled.

- Gothica: https://litter.catbox.moe/yzp91q.pt

- Mordred: https://a.pomf.cat/ytyrvk.pt

- 100k steps tenako (mugu77): https://www.mediafire.com/file/1afk5fm4f33uqoa/tenako-mugu77-100000.pt/file

- erere-26k (fuckass(?)): https://litter.catbox.moe/cxmll4.pt

- Great Mosu (44k): https://files.catbox.moe/6hca0u.pt

- no idea what this embedding is, apparently it's an artist?: https://files.catbox.moe/2733ce.pt

- Dohna Dohna, Rance remakes (305 images (all VN-style full-body standing character CGs). 12000 steps): https://files.catbox.moe/gv9col.pt

- trained only on dohna dohna's VN sprites

- Onono imoko

- Raita: https://files.catbox.moe/mhrvmk.pt

- Senri Gan: https://files.catbox.moe/8sqmeh.rar

- 2 hypernetworks and 5 TI

- Anon: "For the best results I think using hyper + TI is the way. I'm using TI-6000 and Hyper-8000. It was trained on CLIP 1 Vae off with those rates 5e-5:100, 5e-6:1500, 5e-7:10000, 5e-8:20000."

- om_(n2007): https://files.catbox.moe/gntkmf.zip

- Kenkou Cross: https://mega.nz/folder/ZYAx3ITR#pxjhWOEw0IF-hZjNA8SWoQ

- Baffu (~47500 steps): https://files.catbox.moe/l8hrip.pt

- Biased toward brown-haired OC girl (Hitoyo)

- Danganronpa: https://files.catbox.moe/3qh6jb.pt

- Hifumi Takimoto: https://files.catbox.moe/wiucep.png

- 18500 steps, prompt tag is takimoto_hifumi. Trained on NAI + Trinart2 80/20, but works fine using just NAI

- Power (WIP): https://files.catbox.moe/bzdnzw.7z

- shiki_(psychedelic_g2): https://files.catbox.moe/smeilx.rar

- Akari: https://files.catbox.moe/b7jdng.pt

- Embeddings using the old version of TI

- Takeda Hiromitsu reupload: https://www.mediafire.com/file/ljemvmmtz0dqy0y/takeda_hiromitsu.pt/file

- Takeda Hiromitsu (another reupload): https://a.pomf.cat/eabxqt.pt

- Pochi: https://files.catbox.moe/7vegvg.rar

- Author's notes: Smut version was trained on a lot of doujins and it looks more like her old style from the start of smut version of Ane doujin (compare to chapter 1 and you can see that it worked). 200k version is looking a bit more like her recent style but I can see it isn't going to work the way I hoped.

- By accident I started with 70 pics where half of them were doujins to give reference for smut. Complete data is 200 with again those same 35 doujins for smut. I realized that I used half smut instead of full set so I went back to around 40k steps and then gave it complete 200 picture set hoping it would course correct since non smut is more recent art style. Now it looks like it didn't course correct and will never do that. On the other hand recent iterations are less horny.

- Power (Chainsaw Man): https://files.catbox.moe/c1rf8w.pt

- ooyari:

- 70k (last training): https://litter.catbox.moe/gndvee.pt

- 20k (last stable loss trend): https://litter.catbox.moe/i7nh3x.pt

- 60k (lowest loss rate state in trending graph): https://litter.catbox.moe/8wot9a.pt

- Kunaboto (195 images. 16 vectors per token, default learning rate of 0.005): https://files.catbox.moe/uk964z.pt

- Erika (Shadowverse): https://files.catbox.moe/y9cgr0.pt

- Luna (Shadowverse): https://files.catbox.moe/zwq5jz.pt

- Fujisaka Lyric: https://files.catbox.moe/8j6ith.pt

- Hitoyo (maybe WIP?): https://files.catbox.moe/srg90p.pt

- Hitoyo (58k): https://files.catbox.moe/btjsfg.pt

- kunaboto v2 (Same dataset, just a different training rate of 0.005:25000,0.0005:75000,0.00005:-1, 70k): https://files.catbox.moe/v9j3bz.pt

- Hitoyo (another, final vers?) (100k steps, bonnie-esque): https://files.catbox.moe/l9j1f4.pt

- Fatamoru: https://litter.catbox.moe/pn9xep.pt

- Dead link: https://litter.catbox.moe/xd2ht9.pt

- Zip of Fatamoru, Morgana, and Kaneko Kazuma: https://litter.catbox.moe/9bf77l.zip

- Tekuho (NAI model, Clip Skip 2, VAE unloaded, Learning rate 0.002:2000, 0.0005:5000, 0.0001:9000): https://mega.nz/folder/VB5XyByY#HLvKyIJ6U5nMXx6i3M__VQ

- manually cropped about 150 images, making sure that all of them have a full body shot, a shot from torso and up, and if applicable a closeup on the face

- Images not from Danbooru

- Best results around 4000 steps

- Reine: https://litter.catbox.moe/saav38.zip

- Embed of a girl anon liked (2500 steps, keyword "jma"): https://files.catbox.moe/1qlhjf.pt

- Carpet Crawler: https://anonfiles.com/i3a2o0E5y0/carpetcrawlerv2-12500_pt

- Embedding trained on nai-final-pruned at 8 vectors up to 20k steps. Turned into ugly overtrained garbage over 125000 steps so this is the one I'm releasing. Not good for much other than eldritch abominations.

- https://www.deviantart.com/carpet-crawler/gallery

- recommend using it in combination with other horror artist embeddings for best results.

- nora higuma (Fuckass, 0.0038, 24k, 1000+ dataset, might be pickle): https://litter.catbox.moe/tkj61z.pt

- dead link: https://litter.catbox.moe/25n10h.pt