Building A Own Proxy List: An Step-by-Step Guide

In internet-driven world, the ability to explore the web anonymously and smoothly has become progressively important. Whether you are engaging in web scraping for data analysis or simply looking to protect your online privacy, having a reliable proxy list is necessary. A well-crafted proxy list not only augments your browsing experience but also protects your identity and helps you bypass geographical restrictions.

This guide will take you through the process of building your own proxy list from the beginning. We will explore various tools and techniques, including proxy scrapers and checkers, to ensure you have availability to premium proxies tailored for your unique needs. You will learn how to collect proxies for no cost, verify their speed and anonymity, and distinguish between different categories of proxies like HTTP, SOCKS4, and SOCKS5. By the end of this guide, you will be armed with all the knowledge needed to create a robust and dependable proxy list for your projects.

Comprehending Proxies

Proxy servers serve as go-betweens between a consumer's device and the web, enabling users to connect to websites and services via a different Internet Protocol address. This delivers multiple benefits, including improving security, circumventing geographic restrictions, and enhancing protection. By channeling internet traffic via a proxy, users can hide their original IP address, thus aiding maintain disguise throughout online activities.

There are different categories of proxy servers, including Hypertext Transfer Protocol, HTTPS, and SOCKS proxies. HTTP proxies are often utilized for internet browsing and process only HTTP traffic, while HTTPS proxies deliver an further security enhancement by encrypting the data. SOCKS proxies, on the alternative, are more flexible and can process multiple kinds of data, making them suitable for applications not limited to just web browsing, for example video games or peer-to-peer sharing.

When considering deploying proxies, it is essential to know the distinction in distinguishing private and shared proxy servers. Private proxy servers are reserved to a specific user, providing better performance and anonymity, while Public proxy servers are shared between various users, frequently leading to lower speeds and greater chances of IP bans. Choosing the appropriate type of proxy depends on individual needs, especially when it comes to tasks like data scraping or automated tasks.

Setting Up Your Proxy Scraper

To successfully set up your proxy scraper, the first step is to select the appropriate tool that aligns with your specific needs. Several choices are available, ranging from basic online tools to more sophisticated software solutions that require some expert understanding. When selecting a proxy scraper, take into account factors such as speed, dependability, and the ability to filter proxies by category, such as HTTPS or SOCKS. Free proxy scrapers might prove to be a decent starting point, but if you demand quick performance and higher quality, paying in a premium tool could yield better findings.

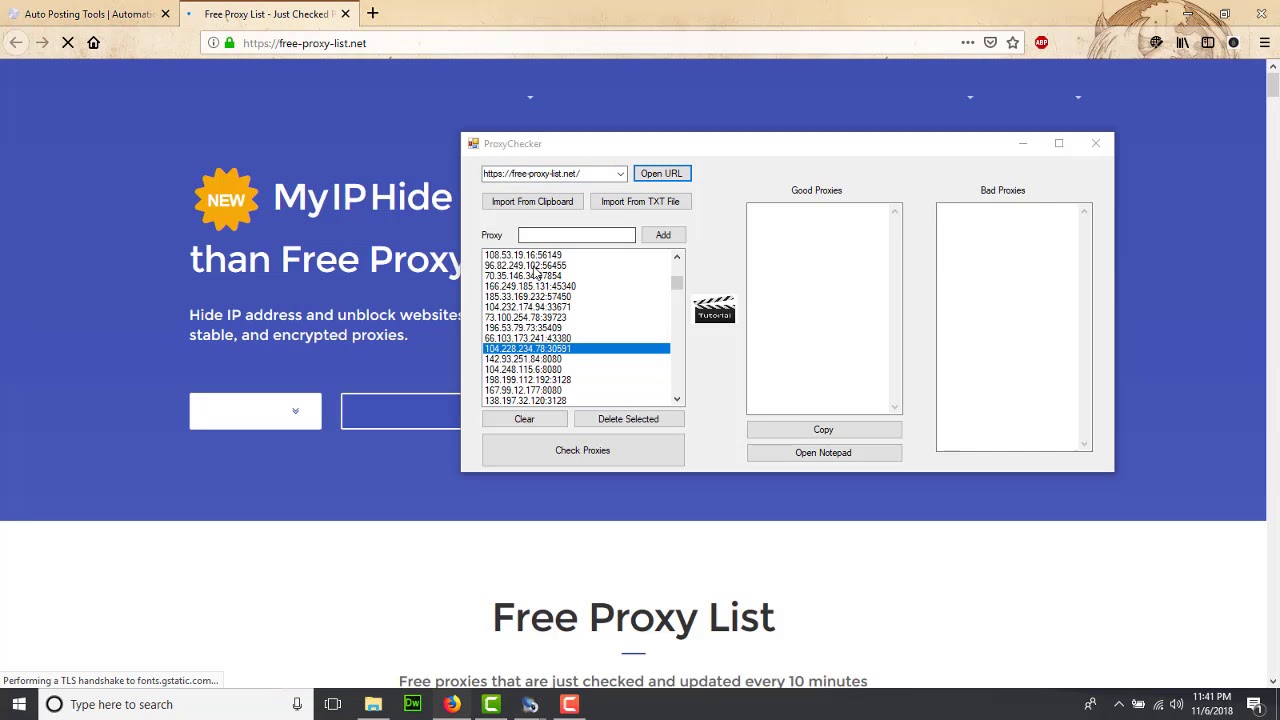

Once you have picked a proxy scraper, it's critical to arrange it appropriately for optimal performance. Depending on the tool, you could need to input various parameters such as intended websites, scraping durations, and the type of proxies you intend to gather. Most scrapers permit you to adjust these settings, allowing you to target specific regions or categories of proxies. Additionally, you should acquaint yourself with the interface and options to ensure you are using the tool to its complete capacity.

After configuring the arrangement, it's necessary to run a sample scrape to assess performance and productivity. This initial run will help you detect any concerns with the proxy origins or settings. Pay close heed to the reply durations and success rates of the proxies gathered. Make modifications based on the findings, such as refining your proxy providers or adjusting the settings for enhanced performance. This cyclical process will help establish a solid proxy list tailored to your scraping needs.

Finding No-Cost Proxy Server Sources

When searching for free proxy server sources, it's important to discover a range of websites and forums dedicated to sharing proxy server lists. Websites that focus on offering complimentary proxy servers often maintain their collections current and organized based on multiple criteria, such as connection speed, kind (HTTP or SOCKS), and privacy level. Getting involved with internet communities can also yield helpful suggestions and recently discovered sources. Sites like GitHub and Reddit.com can be fantastic places to discover user-posted proxy collections that are frequently refreshed.

An additional effective method is to use proxy server harvesting tools specifically made to harvest proxy servers from various locations across the web. These applications facilitate the task, reducing you the resources of manually hunting for proxies. You can find both complimentary and paid editions of these proxy server tools, but a variety of no-cost tools offer substantial features. Look for scrapers that allow you to filter outcomes based on criteria like speed and privacy, as this will help you build a high-quality proxy list for your needs.

Additionally, consider checking data extraction websites and search engine optimization tools that provide proxy providers. Some websites feature selected proxy server collections along with feedback of their performance. They often include tutorials on how to collect proxy servers for no-cost, detailing the most effective methods and strategies. By utilizing these materials, you can build a varied and reliable proxy collection, which is crucial for effective web scraping and automated processes.

Assessing Proxies Quality

As building a list of proxy servers, verifying their quality is crucial to guarantee that you obtain consistent access for your needs. A reliable proxy ought to show rapid response times, consistency, and security. Utilizing a high-quality proxy checker can help you evaluate these standards, allowing you to filter out those that do not satisfy your criteria. Additionally, automating tools built for proxy checking can save effort by running mass checks on your proxy list, allowing you to promptly identify functional proxy servers.

Speed is one of the most key elements in proxy quality. You can assess how quickly proxies respond to calls, which directly affects your web scraping performance or automation tasks. Make sure to check the delay, as a elevated response time proxy can considerably slow down operations. There are best tools to scrape free proxies that specifically assess proxy latency, allowing you to create a prioritized list of the fastest proxies for your requirements.

Another essential aspect of assessing proxy standards is to verify for anonymity. This means ensuring that your proxy does not reveal your real IP address, which is crucial for maintaining privacy. You can use various online services to find out if your proxies are open, masked, or elite. The best proxies will maintain a strong level of privacy while ensuring reliable functionality. By thoroughly testing these aspects, you can create a robust proxy list suited to your particular applications.

Testing Proxy Server Anonymity

When using proxy services, comprehending their level of privacy is crucial for ensuring confidentiality and security during online activities. There are several types of proxies, such as visible, concealed, and premium proxies, and recognizing the differences will help you select the appropriate one. Transparent proxies show your internet identity to websites, while hidden proxies conceal it. High-end proxies provide the highest level of obscurity by never identifying themselves as proxy servers at all, making them perfect for sensitive tasks.

To check the anonymity of a proxy, you can utilize various online tools and services that verify how your internet identity appears when accessing through the proxy server. These utilities usually indicate whether your true IP is exposed or hidden. A recommended approach is to compare results from various testing sites to ensure consistency in levels of anonymity. It is also advised to monitor for domain name system leaks that might accidentally expose your true IP address even when using a proxy.

If you discover that a proxy does not offer the required level of privacy, you may need to consider changing to a alternative type. The top free proxy checkers and verification tools offered in 2025 can assist in identifying premium proxies that ensure enhanced anonymity. Always keep in mind to explore and employ trustworthy sources for proxy harvesting to boost the security of your data collection activities.

Employing Proxies for Data Extraction

As you engaging in web scraping, utilizing proxies is key to ensure anonymity and avoid IP bans. Proxies function as intermediaries between your scraping tool and the target website, permitting you to send requests without disclosing your true IP address. This is notably important when scraping massive amounts of data or often accessing the same site, as numerous websites have methods to detect and restrict automated scraping efforts.

Various types of proxies can be employed for web scraping, including HTTP, SOCKS4, and SOCKS5 proxies. HTTP proxies are suitable for web requests, while SOCKS proxies are more flexible and can process different types of traffic. Determining the right proxy type is based on your particular scraping needs. For instance, if you require high anonymity and the ability to access different protocols, SOCKS5 proxies are a robust choice.

To boost your scraping efficiency, consider using a proxy scraping tool to gather a list of free proxies. However, ensure you also check their performance using a proxy checker to assess speed and anonymity levels. Incorporating fast proxy scrapers along with reliable proxy verification tools will assist you develop a reliable proxy list, assuring that your web scraping tasks are performed seamlessly and without interruptions.

Optimal Practices for Proxy Server Administration

As handling your list of proxies, it's crucial to regularly update it to guarantee maximum efficiency. This entails checking the proxies regularly to evaluate their response time and anonymity levels. Using a trustworthy proxy validation tool will help you spot inactive proxies and filter out unusable server connections. This approach merely boosts your scraping effectiveness but also helps you prevent blocks from target websites.

Additionally, it is essential to understand the distinction between HTTP, SOCKS4-based, and SOCKS5 proxy types. Each variant has its specific applications and advantages. By choosing the right proxy type for your project, whether for web scraping or automated tasks, you can boost efficiency and reduce problems related to connection and functionality. Familiarizing yourself with these distinctions will enable you to take knowledgeable choices when building and refining your proxy list.

Lastly, consider the provider of your proxy servers diligently. While free proxies can be tempting, they often come with poor speed and reliability. Spending on private proxies or a reliable provider can significantly improve your data harvesting results. Furthermore, regularly evaluating the quality of your proxy servers will help you locate top-performing choices, allowing more effective data extraction plus data harvesting techniques. By adopting these optimal strategies, you can secure your management of proxies is productive and productive.