Hello, If your here it’s probably because I’m reviewing a model. Anyways, I created this rentry page so I don’t have to constantly repeat my setup every time. Besides that, I’m kind of an amateur in these kind of topics so do correct me if I’m wrong or missing something in some things. Also I do use gifs so open them in a new page if you encounter some as they may not repeat themselves.

Roleplay Setup

I've been roleplaying with LLM's for a few years now, it was back when character ai was a popular thing. I was frustrated with the lack of nsfw capabilities so I did some research here and there and discovered SillyTavern and Hugginface. Here is the setup I was satisfied with after a bunch of testing and reading some guides. My reasons of going with such a setup is explained at a later section. I use few areas of the Sillytavern card system, mostly only the Description, Character Note and Author Note. Anything else is not used.

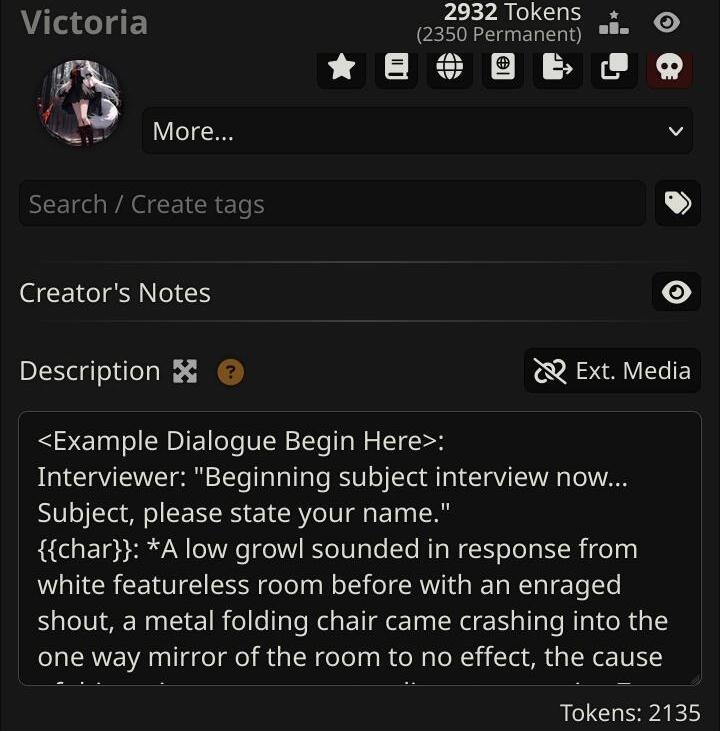

Description

To start off, as an example I’ll be using the card ‘Victoria’ by ignosum. Now usually in this section the key content here is example dialogue, since the card itself employs the Alichat format, there’s minimal modification, if there’s no given example dialogue, usually I copy and paste from greetings. The format’s usually:

<Example Dialogue begins here>

{{char}}: “Example Dialogue”

{{char}}: “Stuff Stuff”

<Example Dialogue Ends here>

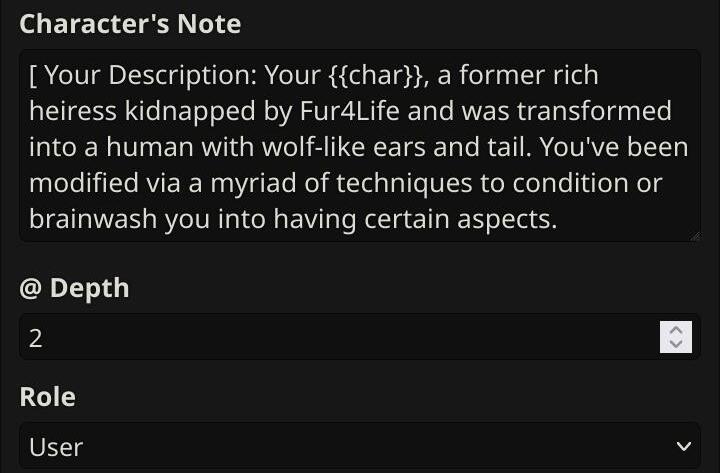

Character Note

Here, all the attributes, info and other descriptors of a character are listed. Their role is listed as User and set at depth 2. The format is usually plain text that depicts information into categories and the info is described into 2nd person.

Author Note

Here info about the personality or very important info is listed. Their role is listed as System and set at depth 2. Same as Character Note.

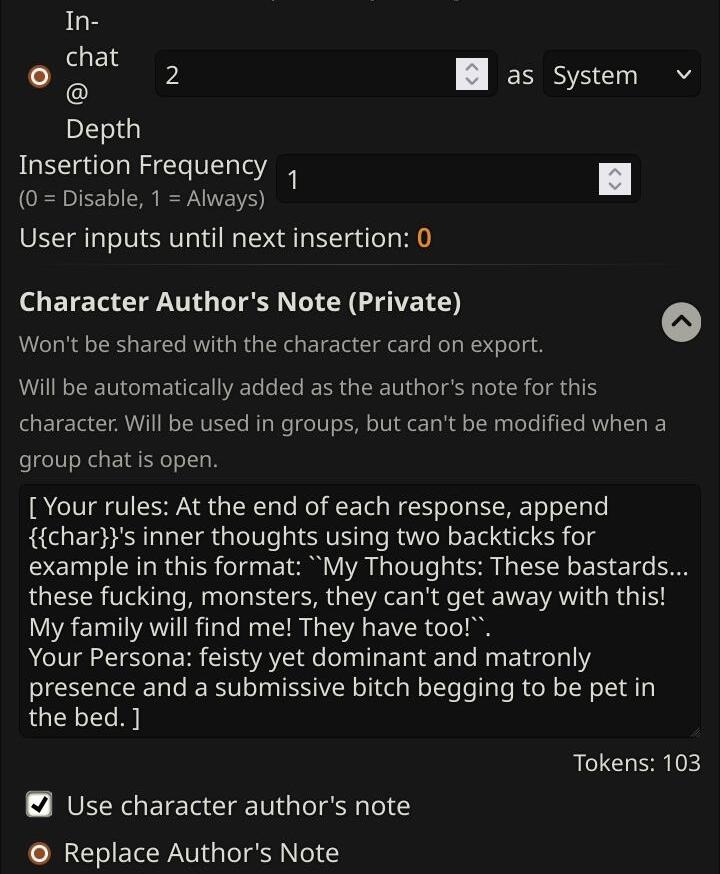

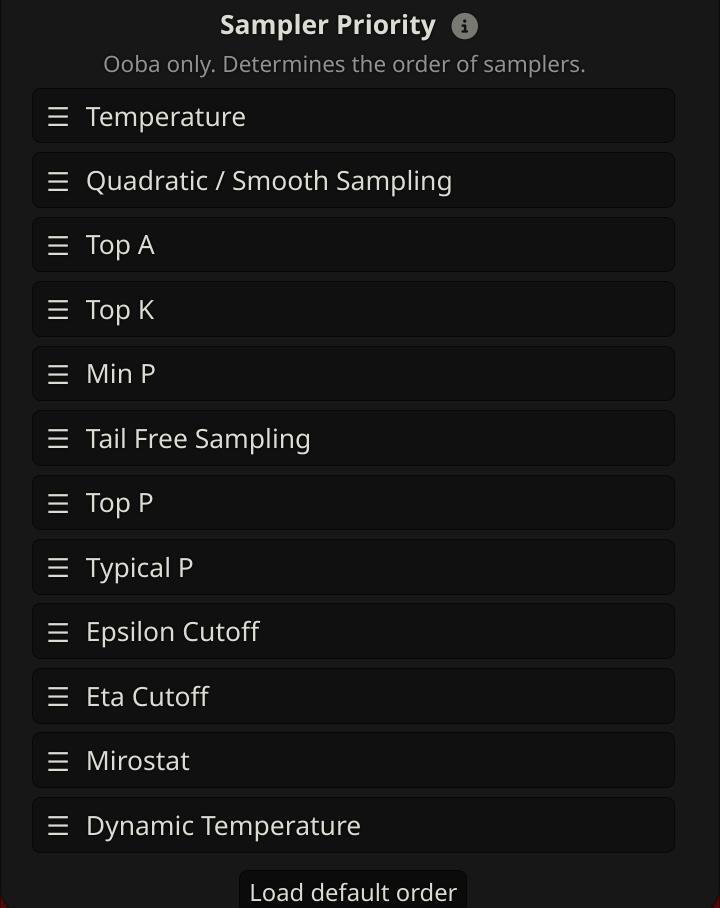

Sampler Settings

It differs really depending on which model and billion parameter I use, I’ll specify when using a specific model. The sampler settings I use which consists of using only Temperature, Top_A, and Smoothing Factor and its different variants are what I call the Pandora’s Box series. I also set Temperature at the top of the sampling order and use DRY Repetition Penalty.

Backend

The backend I use is a Colab duly provided by Oobabooga’s Text-Generation-Webui. I use the launch flags:

When using exllamav2_hf:

--n-gpu-layers 128 --loader exllamav2_hf --cache_4bit --max_seq_len 8192 --alpha_value 2.5 --no_inject_fused_attention --no_use_cuda_fp16 --disable_exllama --no_flash_attn

When using llamacpp_hf:

--n-gpu-layers 128 --loader llamacpp_hf --n_ctx 8192 --alpha_value 2.5 --no_inject_fused_attention --no_use_cuda_fp16 --disable_exllama --disable_exllamav2 --streaming-llm --no_flash_attn

Frontend

The latest staging SillyTavern on Android, runs on Termux.

Rationale

This section will cover my reasoning on the setup I made.

Rationale on Description

Putting example dialogue in description was mostly inspired by the Alichat format. I also put it there opposed to using SillyTavern’s example dialogue box because:

- In my experience some time ago, the LLM model would sometimes regurgitate example dialogue and it was not consistent.

- Something has to occupy Description.

There’s some xml tags as so to inform the model that it is indeed example dialogue.

Rationale on Character Note

The usage of Author Note was quite recommended in some guides as a sure way of repeatedly and consistently telling the model of the necessary information. Such a principle can also be applied through the usage of Character Note. The reason I prefer Character note over the traditional Description is because of one key thing: ‘Information Consistency’ and because of the way on how context piles up.

If I were to use Description to store character descriptors, there is, in my experience, a good chance the model would take ‘creative liberties’ on the info that is provided.

As you can see, as the LLM model itself is quite easily influenced by what’s most recent, It’s most preferable for me to use Character Note especially their in depth option, which is set at depth 2 which means that the information stated is pushed to the model before the 2 most recent messages each succeeding message sent. The lesser the depth, the more impact it has on the LLM model receiving the information and thus, more grip the LLM has on that specific info so much so the LLM does not decide to use ‘creative liberties.’. I set it at 2 as setting it at 1 would mess up the model’s sense of 3rd person narration and speak in 2nd person with confusion of who is user, the mysterious ‘you’ person and the character. The reason I modified the text to describe the character’s attributes in 2nd person is due to a reddit post by u/shrinkedd where they talk about an alternative concept on system prompts, I noticed the usage of 2nd person in the system prompt given and decided to implement it as there were claims of improved natural immersion in roleplaying in the post’s comments. Does it work? Not exactly sure, but there are some noticeable tidbits here and there, and it’s useful in finding if the model decided to go OOC in the midst of a roleplay session. The role of Character Note is User, it’s a preference said by u/shrinkedd who commented on a different reddit post so I incorporated it due to their reasoning, and it does make sense as the LLM model does pay more attention to info said by user as user’s role as both actor and initiator of the roleplay, it’s also useful with the info sent being in 2nd person.

Rationale on Author Note

To my surprise whilst testing, I discovered you can use at least 2 different author notes at the same time. One being the main Author Note and the other being Character Note, just make sure to set their roles separately. In this case, the role is set to System at in depth 2. Here usually only the important information is sent to the LLM model with something as important as the personality, it’s efficient as the System role can be used to reinforce the LLM model’s behaviour.

Rationale on Sampler Settings

This was the most biggest cog I had to figure out for the longest time. Before the appearance Artefact’s tool, I was forced to use Sampler Settings recommended by other people and generalize them to an extent. Even then, I wasn’t particularly sure if it was optimal for the LLM model I was using and as an amateur with only an idea of how the various sampler settings work I had to test them repeatedly and had to feel the results vaguely on how good it felt. Now with Artefact’s tool, I can have more control on how I can set and test the sampler settings.

Pandora’s Box Series

When I was messing with the Smoothing Factor, I noticed something different with the results having similar characteristics so I decided to set it at smoothing factor 0.01

Turns out that using last temp makes the Temperature itself too stable regardless of its value. It doesn’t quite click with me with how the randomness factor (Temperature), a key component of every roleplay session is rendered into a unchanging state of stability. Sure, it’s useful for non-roleplaying purposes in achieving objectivity and other tasks, but for me I like some creativity from the LLM that can be visibly scalable. Thus, the Pandora’s Box Series will have Temperature first in sampling priority. The Pandora’s Box series focuses on the top 48 tokens based on the avocado prompt from Artefact’s tool as a reference. The name Pandora’s Box comes from the fact that whilst testing, results vary wildly as the temperature scaling is sensitive. The goal of this series is to push the Temperature’s creativity to the edge whilst maintaining the minimum stability and dynamicness.

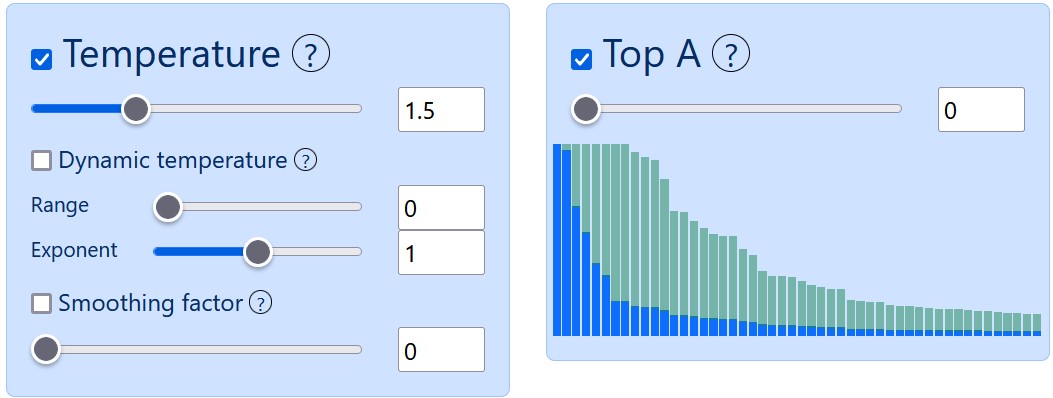

Temperature

The aspects of temperature to be used is only both Temperature and Smoothing Factor. I like to use Smoothing Factor because of how it ‘smoothens’ the collection of tokens to be considered into a more equal descending curve from the most top likely to be chosen token to least likely chosen token, it gives tokens on the lower spectrum a more equal chance to be chosen without the top most likely tokens hogging higher probability percentages and most least likely tokens having almost the same probability percentages compared to using only Temperature.

Using only Temperature:

Using both Temperature and Smoothing Factor:

<-

The lower the value of Smoothing Factor, the more ‘creative’ the model becomes. The higher the value of Smoothing Factor, the more ‘deterministic’ the models become. When testing, I usually use both of them in conjunction. Besides that, do note that the Temp values for Pandora’s Box may differ from model to model, a pattern I noticed is that higher parameter LLM models can tend to have leeway with a higher creativity, while lower parameter LLM models require a stricter Temperature. It’s probably due to the fact that the selection pool for lower parameter LLM models is so low that the chance for ‘fancy’ words to appear scales highly with Temp.

Other than that, I can’t particularly use Dynamic Temp because it conflicts heavily with Smoothing Factor. Smoothing Factor’s designed to be based on a singular base value of Temperature. And Smoothing Factor is really sensitive, especially at lower values. There was a reddit post by u/sophosympatheia discussing the viability of Smoothing Factor which confirms this as I was one of the commenters there who helped out a bit.

Token Cutters

The token cutters I refer to are Top_K, Top_A, Tail-Free Z, Top_P, Min_P and Typical_P. They specialize in cutting tokens based on a certain critea. The key thing here I considered when choosing a token cutter tool was ‘Token Diversity’. By token diversity, I aimed to conserve as many tokens as I could to a certain extent. Why? To expand the LLM’s vocabulary with possible ideas. So I excluded Top_K as it would consider tokens regardless of if it is suitable, making it not dynamic for token diversity.

Using Top_K with an objective prompt

<-

Using Min_P with an objective prompt

<-

<-

I also excluded Top_P and Typical_P due to Top_P’s function of essentially being similar to Top_K except in terms of adding up top tokens’ probability to a set value which is not dynamic enough. This leaves me with Min_P, Tail Free Z, and Top_A. The token cutter one can use from those three really depends on how much tokens you want to cutoff, so here’s an analogy to explain them. Imagine the whole token pool the LLM model will choose from as a giant meat slab, you want to have a nice dinner but too much can be unhealthy or ruin your quality dinner experience so you have the following tools to cut the meat slab with: A scalpel (Top_A), A large normal knife (Min_P), and a big cleaver (Tail Free Z). For me, I like to eat as much as possible so I’ll use a scalpel to trim a bit.

Repetition Penalties

Repetition penalties are in my opinion, mostly useful for models that suffer from samey word usage. The classical Repetition Penalty is great but they’re flawed to an extent. According to Kalomaze or u/kindacognizant:

... more of a bandaid fix than a good solution to preventing repetition...

So I didn’t really use any kind of repetition penalty until I encountered this interesting github pull in text-gen-webui called DRY. It fixes most of the problems of the traditional repetition penalties and it’s approved by many so I decided to use this in conjunction to Pandora’s Box.

Rationale on Backend and Frontend

Well.... I don’t particularly have enough financial buying power to get a high spec NASA computer. So I settled with Colab after extensively searching for free alternatives. I also got access to a few different google accounts so it’s not a bother with the hours I’m given. I have a preference for Text-Gen-Webui because of it’s ease of access, accessibility, their context extending feature, and exllamav2’s model compression which helps greatly with colab’s free T4 gpu 15 gb vram limit. Here’s a rundown on the launch flags I used:

- --n-gpu-layers 128 is default for the model to completely run on vram, running on colab’s ram is very slow.

- --loader exllamav2_hf for 20b models and if there are any available exl versions for lower parameter models, otherwise I’ll create my own repositories for the GGUF versions which is the predominant format on Huggingace and use --loader llamacpp_hf

- --cache_4bit can fit a 20b 4.25 bpw at 8k context in free colab. Quite a steal.

- --max_seq_len 8192 for exllamav2 and --n_ctx 8192 for llamacpp.

- --alpha_value 2.5 alpha value’s explained in the misc section. Actual value depends on LLM model’s config.json and model’s context capability.

- --no_inject_fused_attention --no_use_cuda_fp16 –disable_exllama to save on vram as I don’t use them. Will also use –disable_exllamav2 if using llamacpp

- --no_flash_attn as colab’s T4 gpu is a turing gpu which is not compatible which is a shame as if it does work I could fit another 2k context.

- --streaming-llm is used when using llamacpp to increase context processing speed.

Note that I use the ‘hf’ version of the loaders, I did some digging around and heard that the transformers versions is better because of the compatibility with many sampling parameters and processing speed. For frontend, it’s more of a preference.

Miscellaneous

This section will cover extra stuff I put here.

Table for testing Pandora’s Box

During testing, I created this table via Artefact’s tool based on the avocado prompt for convenience purposes in finding the optimal Pandora’s Box setting for every specific LLM model.

Top A version

Low Probabilities

| Temperature | Top_A | Smoothing Factor | Token |

|---|---|---|---|

| 1 | 0.01 | 0.27 | 28th token: functioning 0.035% |

| 0.98 | 0.01 | 0.25 | 30th token: improvement 0.031% |

| 0.99 | 0.01 | 0.22 | 32nd token: synthesis 0.026% |

| 0.95 | 0.01 | 0.2 | 33rd token: strengthening 0.025% |

| 0.98 | 0.01 | 0.21 | 34th token: creation 0.025% |

| 0.95 | 0.01 | 0.19 | 35th token: best 0.024% |

| 0.96 | 0.01 | 0.19 | 37th token: suppression 0.023% |

| 0.97 | 0.01 | 0.19 | 38th token: absorption 0.023% |

| 0.98 | 0.01 | 0.19 | 40th token: nervous 0.021% |

| 0.99 | 0.01 | 0.19 | 43rd token: supply 0.021% |

| 0.95 | 0.01 | 0.17 | 46th token: optimum 0.019% |

| 0.99 | 0.01 | 0.18 | 48th token: loss 0.019% |

High Probabilities

| Temperature | Top_A | Smoothing Factor | Token |

|---|---|---|---|

| 0.95 | 0.02 | 0.25 | 23rd token: integrity 0.081% |

| 1 | 0.03 | 0.23 | 28th token: functioning 0.080% |

| 0.96 | 0.02 | 0.2 | 30th token: improvement 0.080% |

| 1 | 0.04 | 0.18 | 32nd token: synthesis 0.080% |

| 0.96 | 0.04 | 0.16 | 34th token: creation 0.080% |

| 0.99 | 0.05 | 0.16 | 35th token: best 0.084% |

| 1 | 0.05 | 0.16 | 37th token: suppression 0.080% |

| 0.97 | 0.05 | 0.15 | 38th token: absorption 0.075% |

| 0.96 | 0.06 | 0.14 | 39th token: support 0.086% |

| 1 | 0.06 | 0.15 | 40th token: nervous 0.084% |

| 0.97 | 0.06 | 0.14 | 42nd token: stability 0.082% |

| 0.98 | 0.06 | 0.14 | 43rd token: supply 0.084% |

| 0.95 | 0.06 | 0.13 | 45th token: right 0.076% |

| 0.96 | 0.06 | 0.13 | 46th token: optimum 0.081% |

| 0.97 | 0.06 | 0.13 | 48th token: loss 0.078% |

Top_K version

Low Probabilities

| Temperature | Top_K | Smoothing Factor | Token |

|---|---|---|---|

| 0.95 | 30 | 0.25 | 30th token: improvement 0.022% |

| 0.95 | 31 | 0.21 | 31st token: digestive 0.022% |

| 0.95 | 32 | 0.21 | 32nd token: synthesis 0.021% |

| 0.95 | 33 | 0.2 | 33rd token: strenghtening 0.025% |

| 0.95 | 34 | 0.2 | 34th token: creation 0.023% |

| 0.97 | 35 | 0.2 | 35th token: best 0.022% |

| 0.95 | 36 | 0.19 | 36th token: building 0.021% |

| 0.96 | 37 | 0.19 | 37th token: suppression 0.023% |

| 0.97 | 38 | 0.19 | 38th token: absorption 0.023% |

| 0.95 | 39 | 0.18 | 39th token: support 0.023% |

| 0.96 | 40 | 0.18 | 40th token: nervous 0.023% |

| 0.96 | 41 | 0.18 | 41st token: structural 0.022% |

| 0.96 | 42 | 0.18 | 42nd token: stability 0.021% |

| 0.97 | 43 | 0.18 | 43rd token: supply 0.022% |

| 0.98 | 44 | 0.18 | 44th token: efficient 0.021% |

| 0.99 | 45 | 0.18 | 45th token: right 0.023% |

| 0.99 | 46 | 0.18 | 46th token: optimum 0.023% |

| 1 | 47 | 0.18 | 47th token: preservation 0.022% |

| 1 | 48 | 0.18 | 48th token: loss 0.021% |

High Probabilities

| Temperature | Top_K | Smoothing Factor | Token |

|---|---|---|---|

| 0.99 | 30 | 0.21 | 30th token: improvement 0.084% |

| 0.97 | 31 | 0.17 | 31st token: digestive 0.080% |

| 0.97 | 32 | 0.17 | 32nd token: synthesis 0.079% |

| 0.96 | 33 | 0.16 | 33rd token: strenghtening 0.084% |

| 0.96 | 34 | 0.16 | 34th token: creation 0.080% |

| 0.95 | 35 | 0.15 | 35th token: best 0.078% |

| 0.97 | 36 | 0.15 | 36th token: building 0.083% |

| 0.97 | 37 | 0.15 | 37th token: suppression 0.081% |

| 0.95 | 38 | 0.14 | 38th token: absorption 0.084% |

| 0.95 | 39 | 0.14 | 39th token: support 0.079% |

| 0.96 | 40 | 0.14 | 40th token: nervous 0.079% |

| 0.97 | 41 | 0.14 | 41st token: structural 0.083% |

| 0.97 | 42 | 0.14 | 42nd token: stability 0.082% |

| 0.97 | 43 | 0.14 | 43rd token: supply 0.077% |

| 0.99 | 44 | 0.14 | 44th token: efficient 0.079% |

| 1 | 46 | 0.14 | 46th token: optimum 0.083% |

| 0.97 | 47 | 0.13 | 47th token: preservation 0.078% |

| 0.97 | 48 | 0.13 | 48th token: loss 0.078% |

alpha_value

One of the reasons I favored Text-Gen-Webui over koboldcpp is it’s context roping system. This is due to the fact that koboldcpp’s automatic context system is quite buggy. I’m not sure if it’s fixed now but I’m quite biased to using this feature. According to oobabooga in the text gen wiki:

alpha_value: Used to extend the context length of a model with a minor loss in quality. I have measured 1.75 to be optimal for 1.5x context, and 2.5 for 2x context. That is, with alpha = 2.5 you can make a model with 4096 context length go to 8192 context length.

Using this information I created a formula for alpha_value that is: (0.25*x) + y, where x is the number of times for every additional 2k is added onto the model’s original context capacity. For example, if you plan to increase an LLM’s context limit of 4k into 6k, which is 4k x 1.5 = 6k, x would be 1 as only 'one' 2k is added into 4k and y would be 1.5, so (0.25 * 1)+1.5 = 1.75.

References and Supplementary Links

https://www.reddit.com/r/SillyTavernAI/comments/1anku9d/thoughts_about_roleplaying_system_promptsan/

https://www.reddit.com/r/SillyTavernAI/comments/1d08xvq/comment/l5lpm2d/

https://artefact2.github.io/llm-sampling/index.xhtml

https://gist.github.com/kalomaze/4473f3f975ff5e5fade06e632498f73e

https://gist.github.com/kalomaze/4d74e81c3d19ce45f73fa92df8c9b979

https://github.com/oobabooga/text-generation-webui/wiki/04-%E2%80%90-Model-Tab

https://github.com/oobabooga/text-generation-webui/pull/5677