DSR enhanced security and "formal verification" course

C builds the fundament of the free world!

Note: C is the only 'human understandable' and processor independent programming language that is close to machine code without having to learn x86-84 or aarch64 or RISC-V 64bit assembler. Also therefore C will never go away!

Famous C programs are: Linux, Minix, seL4, GNOME GTK desktop, GnomeBuilder, Gimp, CPython, Lua, LuaJIT, TCC, GCC compiler suite, CompCert, QuickJS, GraphViz ...

- C builds the fundament of the free world!

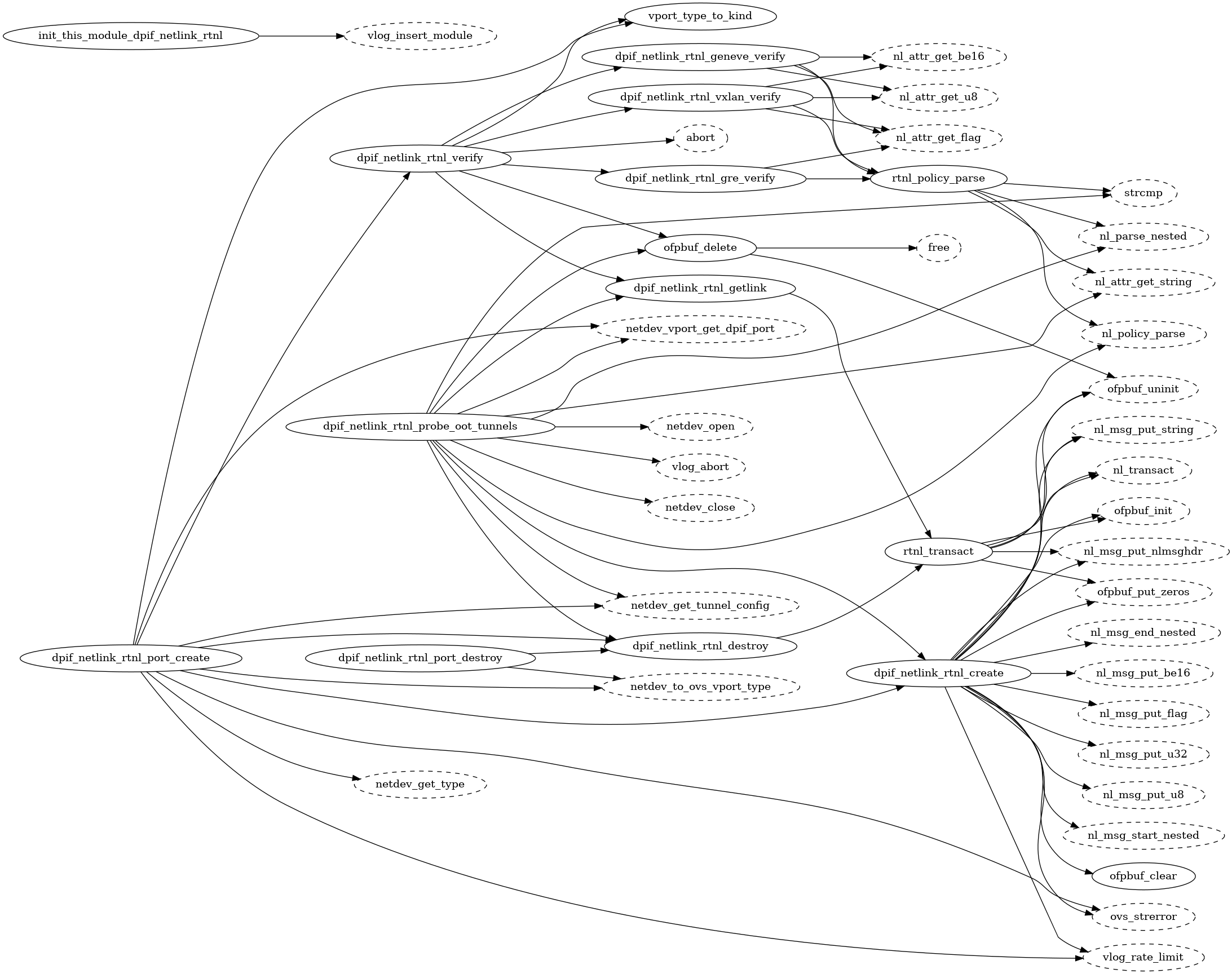

- A simple C program and it's "call graph"

- How can this be done with "printf debugging"?

- Working in Gnome Builder

- Generated machine code x86 assembler

- What we are seeing here?

- What are these "movq" and "movl" instructions?

- How fast are computers and how can i tune them?

- Algorithmica.org - best homepage for modern algorithms of all times!!!

- How complex is it to write a webserver in C?

- How do i know if my code is secure?

- Why I Love Using Vim To Write Code

- Say hello to Kachegrind

- Howto generate your own call graph from C sources

- What's the System V Unix ABI Application Binary Interface?

- All you need to know about the Intel/AMD x86-64 architecture

- The "billion dollar mistake" undone

- Analyzing Linux "System.map"

- Fabrice Bellard's artwork

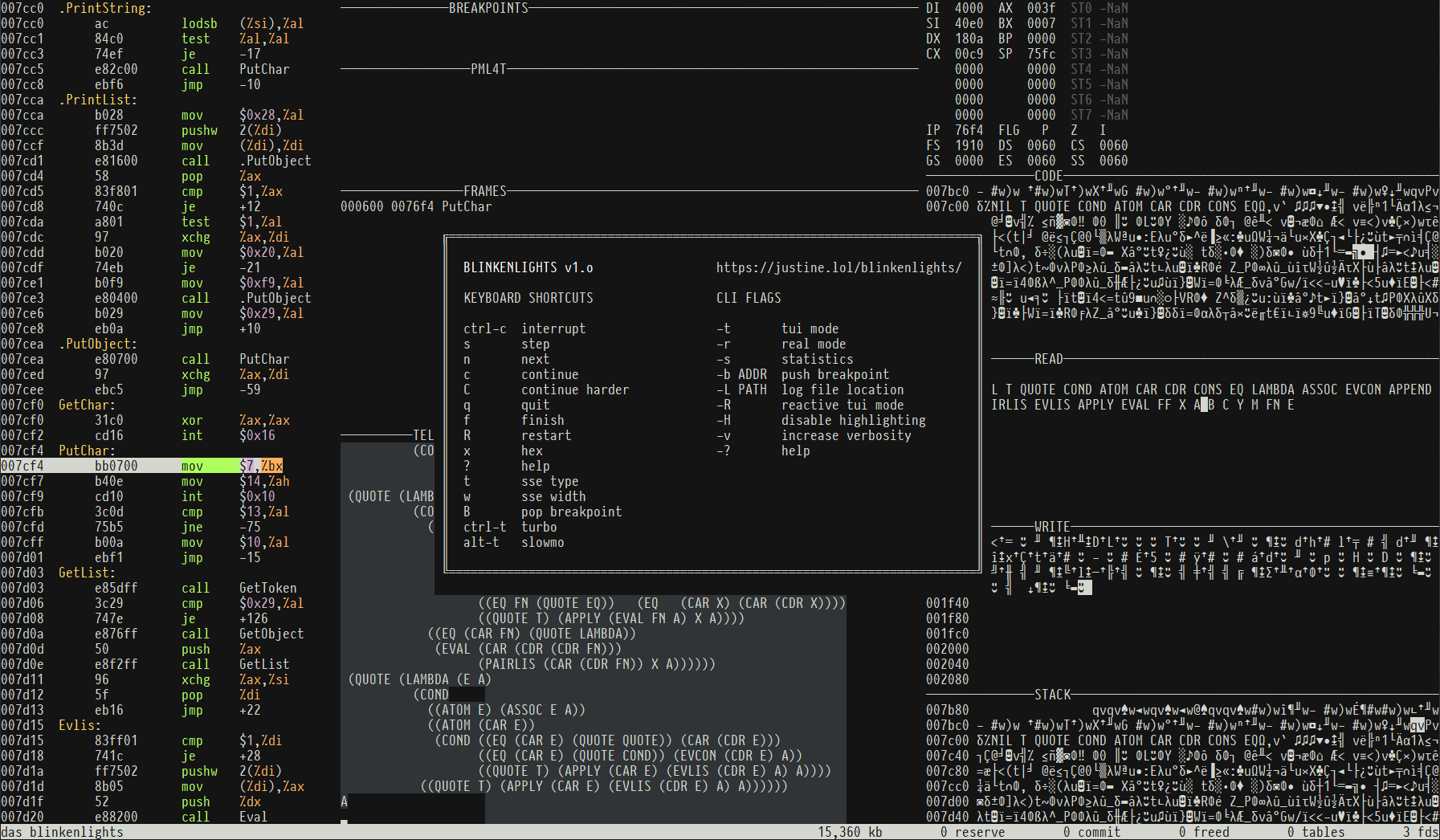

- Justine Tunney's artwork

- Lots of brackets - what's the idea behind "bra" and "ket"?

- The "movfuscator"

- Gource, see how your project advanced over time

- Self hosted languages, no longer implemented in C

- Programmer's thoughts for the weekend ...

A simple C program and it's "call graph"

How can this be done with "printf debugging"?

"The most effective debugging tool is still careful thought, coupled with judiciously placed print statements."

— Brian Kernighan, "Unix for Beginners" (1979)

How would you transform this into Graphviz format?

https://graphviz.org/doc/info/command.html

https://www.graphviz.org/pdf/dotguide.pdf

How would you toggle or remove these lines with sed?

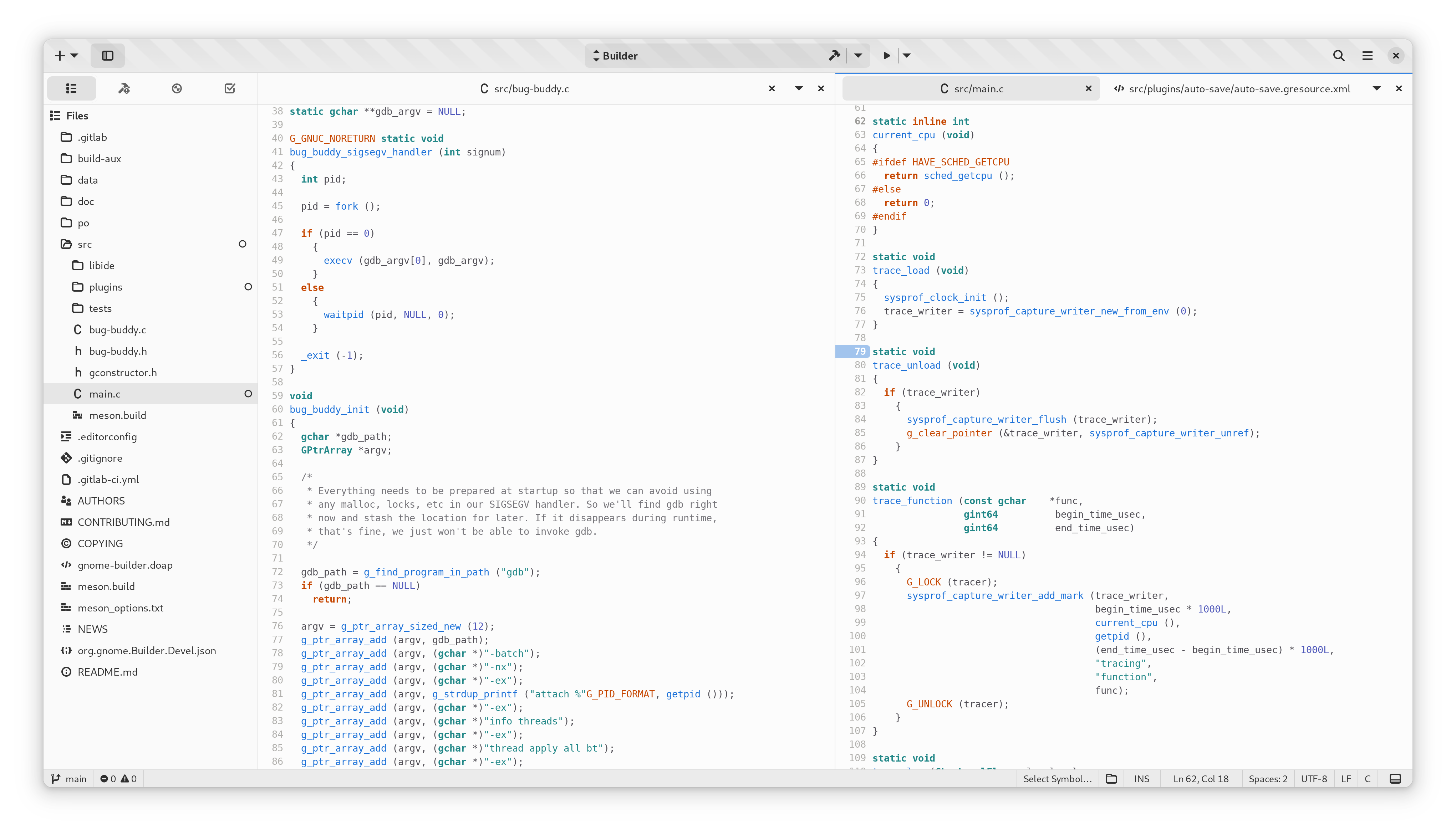

Working in Gnome Builder

Made by Christian Hergert and these were his motivations: https://wiki.gnome.org/ChristianHergert/Builder

A simplistic IDE

A simple IDE that makes writing applications requiring the following dead simple.

- Serialization of Data Objects to SQLite or DQLite

- Serialization of objects to JSON

- Adding plugins to your application in additional languages

- Writing applications in C, Vala, JavaScript, or Python

- Adding objects to your project in any of the above languages

- Writing documentation for your project

- Writing help files for your project

- Translating your project

- Handling building your project with automake

- Communicating over DBus to a Daemon (better use Unix IPC!)

- Making your program listen on DBus

- Generating release builds of your project (make dist)

- Profiling your application (integration with Perfkit)

- Debugging your application (integration with Nemiver/GDB)

- Checking that your application maintains ABI (for C and Vala)

- Find memory leaks using Clang (better use GCC -fanalyzer!)

- Integrated documentation from Devhelp, show as you type. (use Unix man pages!)

- Adding settings to your application with GSettings

- glib-genmarshal, glib-mkenums support

- Status of files in Git.

What's missing, is what the new GCC -fanalyzer can do for you:

https://developers.redhat.com/articles/2024/04/03/improvements-static-analysis-gcc-14-compiler

Generated machine code x86 assembler

Converted with: https://www.codeconvert.ai/assembly-code-generator

What we are seeing here?

First, let me note something: In Assembler one important register of the CPU is not mentioned. It's the Instruction pointer (IP), who indicates where in memory the instructions are executed. He automatically gets incremented after having executed one instruction. 64 bit machines are divided up in MMU protected memory areas, called kernelspace and userspace, virtual machines, containers, nested containers, frames, stack segments with further divivision into data segment, text segment, code segment, variable segments. They are called scope, namespaces, global or local variables ... whitch all come with their own individual rules, limitiations, which are stored inside as environment variables. You might know this from $ bash or $ python programming "environment variables", that always have to be set. Sometimes they are 'hard coded", predefined outside the environment, but mostly inside the environment, which causes many toubles. Those environment variables typically describe what resources the process is allowed to use. Whenever the Instruction Pointer jumps into another memory segment, there are strict rules to fulfill. One of them is the CDECL convention that make Unix System V. Tools, debuggers GDB, PTRACE, ... are adapted to that conventions. Whenever the IP changes into another memory aerea, register variables have to be dropped, firstly to free ressources for the new task and secondly to restore the old environment before jumping back.

Back again to the code: What is that strange "pushq %rbp"?

rbp is the frame pointer on x86_64. From there you can use relative indexing of the huge 64 bit address space. 64 bit variable name all begin with an "r". 32 bit code, used in the "i386" and 80486 processor would have an ebp frame pointer, always beginning with an "e". 16 bit 8086 CPUs only have a "bp" without the "e".

In the generated code, it gets a snapshot of the stack pointer (rsp) so that when adjustments are made to rsp (i.e. reserving space for local variables or pushing values on to the stack), local variables and function parameters are still accessible from a constant offset from rbp.

For more, read: https://files.osdev.org/mirrors/geezer/osd/libc/index.htm

A typical Assembler fragment:

What are these "movq" and "movl" instructions?

These are variations of the more general mov command (moving data to a memory location or a register) The instruction is commonly used for tasks such as copying values, initializing variables, and passing arguments to functions.

The movb instruction moves a single byte of data, the *movl instruction* moves a 32-bit (4-byte) data, and the movq instruction moves a quad word (4 words of 16 bits = 64-bit or 8 bytes) data piece starting at the current specificed memory address to another location. Here indirect addressing (like in C pointers) is often used.

What does movq (%rsp), %rsp do here with the assembly stack pointer?

This particular instruction grabs the quadword pointed to by the current stack pointer, and loads it into the stack pointer, overwriting it. The syntax with (%rsp) is called indirect addressing.

Altogether, the mov instruction takes between five and eleven cycles, depending on its operands and their alignment (starting address) in memory. The CPU does the following for the mov memory, reg instruction: Fetch the instruction byte from memory (one clock cycle).

I highly recommend reading the "cheatsheet" and going through the tutorial:

https://cs.brown.edu/courses/cs033/docs/guides/x64_cheatsheet.pdf

https://cons.mit.edu/fa18/x86-64-architecture-guide.html

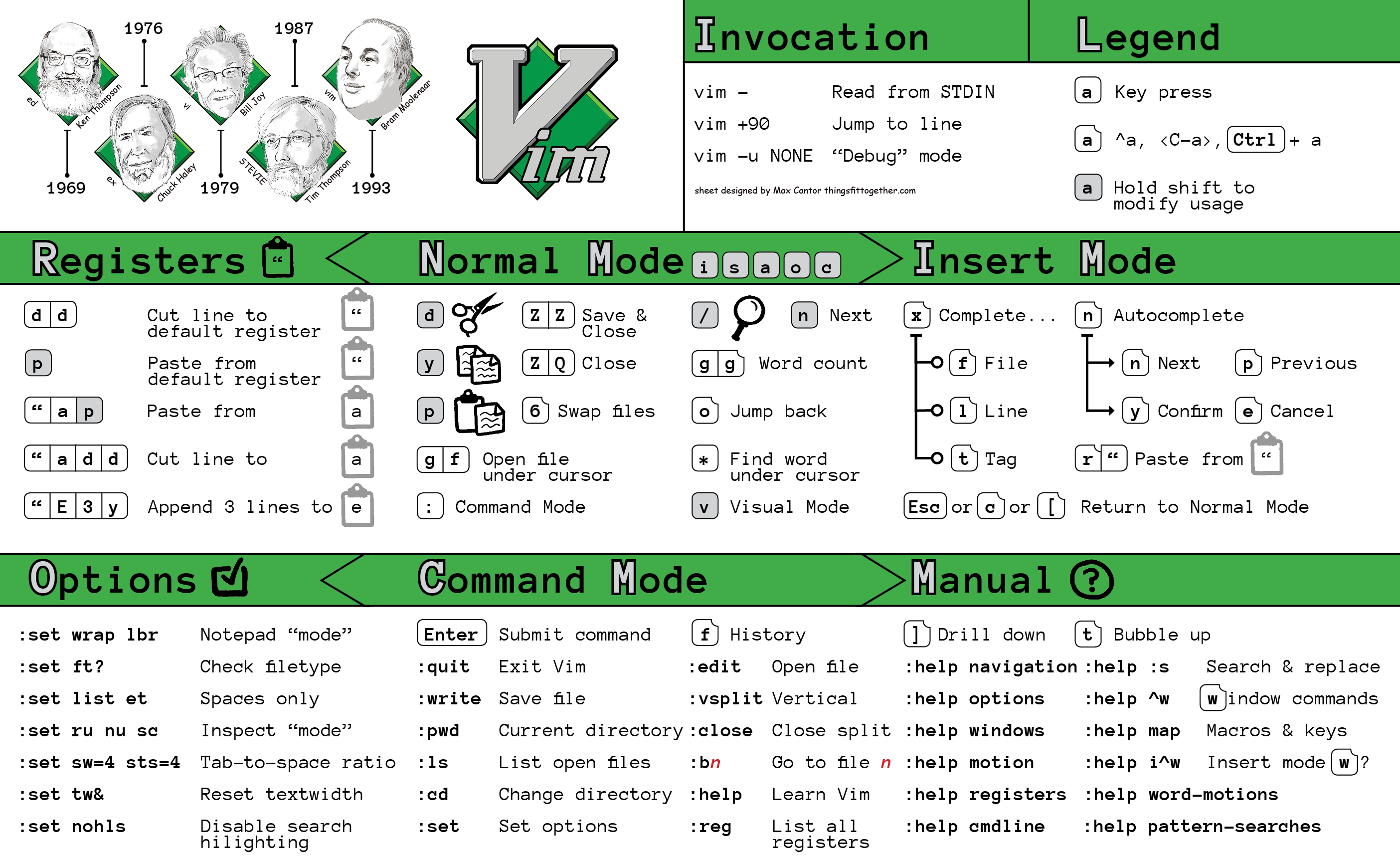

Are there e.g. cheetsheets for Python, C, Bash, Awk, Sed, Vi programming?

Python: https://automatetheboringstuff.com/#toc

C: https://www.codewithharry.com/blogpost/c-cheatsheet/

Bash: https://devhints.io/bash

Awk: https://linux-audit.com/cheat-sheets/awk/

Sed: https://gist.github.com/ssstonebraker/6140154 and https://quickref.me/sed.html

Vi: https://devhints.io/vim

Neovim has same capabilities like VSC: https://neovim.io

NeoVIM for Python setup: https://www.playfulpython.com/configuring-neovim-as-a-python-ide/

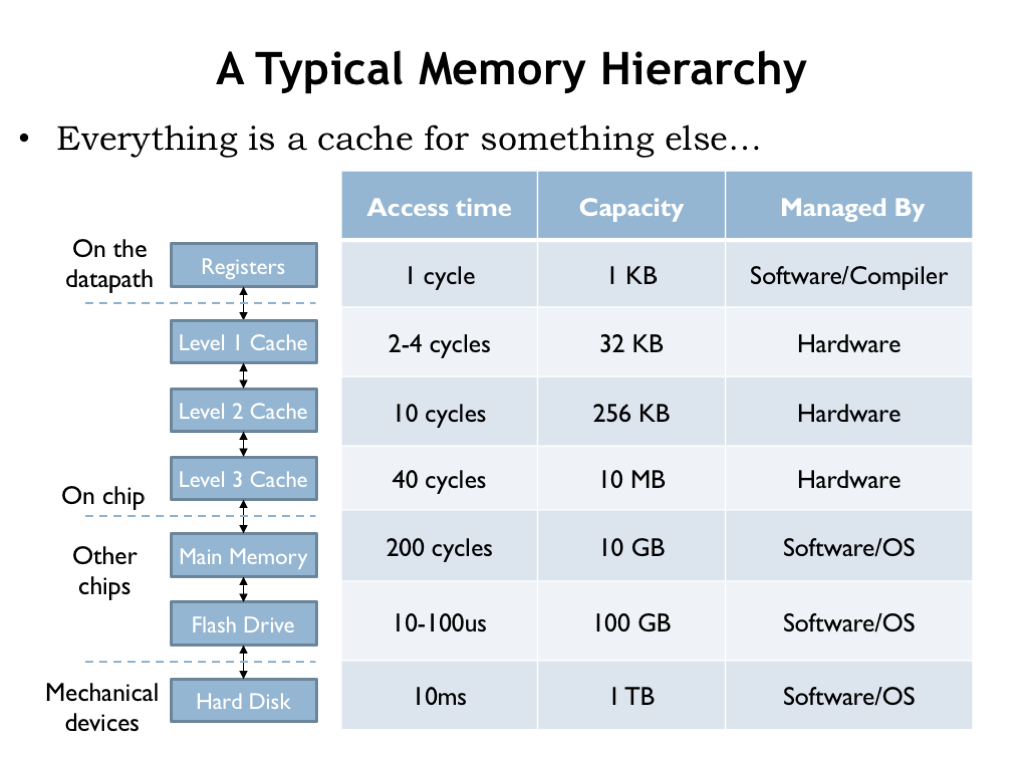

How fast are computers and how can i tune them?

From: https://computationstructures.org/lectures/info/info.html

From here you can see that keeping everything (data and code) in 1st level cache, which is 32KB, can dramatically improve performance. So carefully choose your algorithm, reduce code size and operate on small amount of data only. Divide up "BIG-DATA" into small packages of small data! Always let a second processor core (or thread) preload data from flash SSD or hard drive. You might have noticed, that almost all Python modules have been replaced by its C or C++ counterparts. Why? Generated C code is ultra compact and due to it's nature - close to assembler - always mindblowingly faaaaast!

What algorithms to choose best?

- In general: Use "branchless" computation algorithms

- Use jump tables

- Use register variables for inner loops

- Always keep the 4 ALUs/core filled

- Split up tasks fitting into 1st level cache 32KB algorithms only

- Avoid all kinds of locking, choose lock-free algorithms

- Use wait-free algorithms, let idle threads help others

- At fetching more data from DDR5 RAM think about RAS and CAS latencies

- Use CXL: Directly connect your motherboards over PCIe 5.0 cables for AI

- Make sure, that you can pull data in parallel over all 12 memory channels (25-50GB/s each) a typic EPYC server has, using full theoretical bandwidth

- Use fastest PCIe 5.0 NVMe 2.0 drives as swap

- Use the AKKA/LMAX "disruptor pattern"

- Use event-driven algorithms

- Use highly flexible Entity Componen System (ECS pattern) from game industry

- Use CQRS

- Use Virtual Memory Addressing with Big Data

- Recompile with collected profiler information

- Always adapt your algorithms

https://martinfowler.com/articles/lmax.html

https://en.wikipedia.org/wiki/Memory_timings

https://en.wikipedia.org/wiki/CAS_latency

https://people.freebsd.org/~lstewart/articles/cpumemory.pdf

https://docs.kernel.org/driver-api/cxl/memory-devices.html

https://www.phoronix.com/review/crucial-t705-linux/2

https://www.flecs.dev/flecs/

https://en.wikipedia.org/wiki/Command_Query_Responsibility_Segregation

http://www.lmdb.tech/doc/

https://ftp.gnu.org/old-gnu/Manuals/gprof-2.9.1/html_mono/gprof.html

Algorithmica.org - best homepage for modern algorithms of all times!!!

Algorithmica.org is the most advanced collection of modern algorithms for the massive parallel "multicore" computing age. Unlike Donald E. Knuth's famous books https://en.wikipedia.org/wiki/The_Art_of_Computer_Programming the algorithms are described, implemented, and even benchmarked in "The C language":

Example: The S-tree, blindingly fast, faster even than Google's famous "Abseil Hash Tree:" https://en.algorithmica.org/hpc/data-structures/s-tree/

Google's famous Abseil Hash: https://youtube.com/watch?v=JZE3_0qvrMg

Compare to Strager's Hash benchmark - 340 million RPS! https://youtube.com/watch?v=DMQ_HcNSOAI&t=32m20s

All about CPUs: https://computationstructures.org

The one and only: The C programming language - by Kernighan and Ritchie:

https://colorcomputerarchive.com/repo/Documents/Books/The%20C%20Programming%20Language%20(Kernighan%20Ritchie).pdf

Also watch (highly recommended):

Jacob Sorber: Understanding and implementing a Hash Table (In C, of course!): https://youtube.com/watch?v=2Ti5yvumFTU

10x Faster than Rust and C++ libraries: the PERFECT hash table:

Strager's excellent analysis and tuning: https://youtube.com/watch?v=DMQ_HcNSOAI

Second best Algorithm homepage:

https://www.partow.net/programming/hashfunctions/

How complex is it to write a webserver in C?

Not at all! C and especially the Unix environment provide you with everything you will ever need. Every Unix comes with full documentation installed on you hard drive. These include manuals for Unix commands as well as the complete documentation for all libraries. Here you can watch a programmer using this preinstalled man pages for:

"Making minimalist web server in C": https://youtube.com/watch?v=2HrYIl6GpYg

https://github.com/nir9/welcome/blob/master/lnx/minimalist-web-server/server.c

Why is it in C so complicated with "bind", "listen" and "accept"? With "bind" you program your network card to tell, what protocols are allowed to pass through. It's a filter to massively reduce CPU load. With "listen" you tell your network card, how many network connections it is allowed to pass through to the TCP/IP network stacks in the kernel. Note, that you need one kernel thread for each network connection. They're reserved, started ahead of time like "worker threads". So be careful not to unneccessarily waste resources! And finally the "accept". With this i put load (activate the task scheduler) on those incoming network connections to get the data processed. Note, that in this example the program exits after one get request. So you have to wrap it into a {while (true)...} endless loop. But here you should use either threads or simple fork() the process. On Linux the old fashioned (most "experts" erroneously think so!) forking (cloning the complete binary in memory) comes for free, because of KSM (Kernel Samepage Merging). Identical binaries get consolidated in memory, only consume 1x physical memory space, despite 1000 of independent virtual instances exist. Thanks to MMU and its virtual pointers to executable code it even doesn't cost CPU clock cycles.

And here is the generated Assembler:

How do i know if my code is secure?

Thats what the GCC -fanalyzer option is for. The analyzer searches for all known bugs that can happen to beginners. It's doing a very complex analysis and makes C code, typically known to be critical, as safe or even more safe as Rust code:

It's doing exactly that, what a "formal verifier" or a security code reviewer typically is doing. Unluckily the analyzer does not work for C++ code! :-(

Why I Love Using Vim To Write Code

You can't forget the commands! It's intuitive human language. You can build complete sentences with subject, predicate, object, attribute with relative clauses, that exactly decribe in human language what you intend to do. Watch this eye-opener:

https://youtube.com/watch?v=wlR5gYd6um0

Say hello to Kachegrind

While with "printf debugging" on only see the "branches taken", with Kachegrind you see them all.

Excellent tool for analysis!

Howto generate your own call graph from C sources

https://github.com/chaudron/cally

What's the System V Unix ABI Application Binary Interface?

https://wiki.osdev.org/System_V_ABI#x86-64

All you need to know about the Intel/AMD x86-64 architecture

https://web.stanford.edu/class/archive/cs/cs107/cs107.1196/guide/x86-64.html

https://cons.mit.edu/fa18/x86-64-architecture-guide.html

The "billion dollar mistake" undone

You might have heard about it: https://www.infoq.com/presentations/Null-References-The-Billion-Dollar-Mistake-Tony-Hoare/

In C you have "Null References" - everywhere! E.g. at a declaration of a pointer without initialization. Often pointers cannot be set to point to some position, because the information is not (yet) available. Mostly in JIT engines, but also in databases, videoplayers, "gif" animation routines or at inserting a binary module with dlopen(). Sometimes pointers are initialized as "void pointers" and then casted later in the code to fulfill their purpose. So they generally can't be avoided.

Programming languages manufacturers, who claimed to have solved this problem with their "secure language", are - liars. We always will need runtime checks in computing!!!

Very often we have so called "dangling pointers", who point to nothing useful or some pointers that point to a structure, that does not correpond to the type or already had been deleted, removed from memory with the standard unix kernel function call munmap(), the so called "use after free" problem:

Look, it's a nightmare: https://cve.mitre.org/cgi-bin/cvekey.cgi?keyword=use+after+free

You only can find those bugs with a thoroughly "code review" by C experts. Or better concepts e.g. in the Rust programming language, e.g the concept of "Ownership" together with a "Borrowchecker" and "Lifetimes".

Rust has a user forum to discuss those problems there: https://users.rust-lang.org/t/dereferencing-raw-pointer-causes-use-after-free/72876/4

Ownership: https://doc.rust-lang.org/1.8.0/book/ownership.html

Borrowing: https://doc.rust-lang.org/1.8.0/book/references-and-borrowing.html

Lifetimes: https://doc.rust-lang.org/1.8.0/book/lifetimes.html

Luckily, the GCC is able to do find those bugs (and a whole lot more!) and warn you:

https://gcc.gnu.org/onlinedocs/gcc/Static-Analyzer-Options.html#index-fanalyzer

Of course C, as multi paradigm language close to Assembler, can emulate all those concepts. We're discussing them in detail later.

Analyzing Linux "System.map"

The file "System.map" for Linux is like header.h declaration files for the GCC or TCC C Compiler. Is shows the initial starting C - e.g. systemd - programm, what functions it can use in the Linux kernel, their types and arity., before the ELF linker becomes available. Here you find e.g. all POSIX functions - which were redefined in 2024 btw. - listed: fopen(), fclose(), socket() ...

https://raw.githubusercontent.com/raspberrypi/firmware/master/extra/System.map

E.g. now you can e.g. ask: "How many sort functions are there in the Linux kernel?"

Simply click on the above file with your browser and type in "sort" into your "Search on page" ("Suche im Fenster") search window and the sort functions will be counted:

It's 28!

Wasn't that easy?

Fabrice Bellard's artwork

Fabrice Bellard's QEMU - User Mode Linux

He's one of the master brains behind the VirtualPC QEMU and the TCG behind KVM:

https://en.wikipedia.org/wiki/QEMU

https://en.wikipedia.org/wiki/Kernel-based_Virtual_Machine

Also see: https://play.google.com/store/apps/details?id=com.cuntubuntu&hl=de

and Colinux: http://www.colinux.org/

They's both based on User Mode Linux (UML). You can compile Linux without drivers and hand over system calls like fopen() to the underlying machine.

That's how Alpine Linux is doing it within Docker Containers:

https://gist.github.com/Lakshmipathi/7cb1b1074b84445d27ce3196a3658c1f

Linux Server running in browser

https://bellard.org/jslinux/vm.html?url=alpine-x86.cfg&mem=192

Within you can use all Linux commands such as bash, awk, sed, tr, uniq, more, ls, tcc, strip, ldd, objdump, Vi ... and you can use tcc as" Just In Time" (JIT engine) compiler to not run bash scripts, but C written scripts with CMake files, also written in C.

C as a scripting language

#!/usr/local/bin/tcc -run -

https://wiki.gentoo.org/wiki/Tcc#Inability_to_run_tcc_as_a_script

https://ciesie.com/post/tinycc_dynamic_compilation/

QuickJS is Fabrice Bellard's own version of Node.js

It has remarkable features, even Node.js does not have:

QuickJS is a small and embeddable Javascript engine. It supports the ES2023 specification including modules, asynchronous generators, proxies and BigInt.

It optionally supports mathematical extensions such as big decimal floating point numbers (BigDecimal), big binary floating point numbers (BigFloat) and operator overloading.

Main Features:

- Small and easily embeddable: just a few C files, no external dependency, 210 KiB of x86 code for a simple hello world program.

- Fast interpreter with very low startup time: runs the 76000 tests of the ECMAScript Test Suite in less than 2 minutes on a single core of a desktop PC. The complete life cycle of a runtime instance completes in less than 300 microseconds.

- Almost complete ES2023 support including modules, asynchronous generators and full Annex B support (legacy web compatibility).

- Passes nearly 100% of the ECMAScript Test Suite tests when selecting the ES2023 features. A summary is available at Test262 Report.

- Can compile Javascript sources to executables with no external dependency.

Garbage collection using reference counting (to reduce memory usage and have deterministic behavior) with cycle removal. - Mathematical extensions: BigDecimal, BigFloat, operator overloading, bigint mode, math mode. (These are Sound Types like in Dart!!!!)

- Command line interpreter with contextual colorization implemented in Javascript.

Small built-in standard library with C library wrappers.

It is fully NPM compatible! (So it also runs TypeScript!)

Justine Tunney's artwork

Blinkenlights is a complete PC debugging environment

It replaces VMware, QEMU PC emulator and GDB at the same time:

Here you can see it live: https://justine.lol/blinkenlights/

A lambda calculus engine in 550 lines ...

It's a functional programming language with everything a modern language has, e.g. tail recursion, a garbage collector. In 550 lines only. Within even contained the documentation, a manual, tiny examples. https://justine.lol/lambda/

It's a Church-Krivine-Tromp - a stack machine, very much like the old x86 CPU design architecture with its PUSH and POP commands was. That's why Justine Tunney needed so less code to make things work. Same for Fabrice Bellard's OTCC.

The three kinds of notations for stack machines

Prefix: op *; op +; 7 push; 5 push; 6 push to calculate (7+5)*6 like in Lisp

Infix: 7 push; op +; 5 push; op *; 6 push; <execute> to calculate (7+5)*6 like in C

Postfix: 6 push; 7 push; 5 push, op +; op * to calculate (7+5)*6 like in x86 CPUs

These "Stack Machine Notations" can be easily transformed into each other by simple regrouping of operators and operands. That's what "the compiler" is for. That's why OTCC is so short with its only 2048 characters, self-compiling, btw.! Bringing the syntax of your "programming language of choice" into a form, the CPU is capable to operate. While C has its CDECL (rules for use of registers), also Stack machines have their rules howto feed them with operators and operands. One of them is called λ.

The prefix version is called "polish notation", the postfix version is called "reverse polish notation" and the infix version is called "algebraic notation" - we all know from math! C as "infix language" comes very close to math notation. That's why we speak of a whole "C family of languages".

And here you can also see, why the (cons) concept of always grouping one operator and two operands in a CONS cell together (* (+ 7 5) 6) is neccessary. With closing the last open bracket the computation can start automatically. While in Lisp you are freely allowed to add more values in a cell (+ 1 2 3 4) in the Lisp dialect Scheme you strictly have to follow the pattern (+ (+ 1 2) (+ 3 4)).

Getting rid of these (()((()(((() in Lisp

If those masses of (()((()()))) in Lisp disturbe you: https://www.draketo.de/software/wisp

Understanding the idea of λ

Lambda Calculus with Graham Hutton - Computerphile: https://youtube.com/watch?v=eis11j_iGMs (Also see: https://en.wikipedia.org/wiki/Church–Turing_thesis)

Lambda Calculus: PyCon 2019 Tutorial (Screencast) with David Beasley:

https://m.youtube.com/watch?v=5C6sv7-eTKg

Sector Lisp within 512 bytes

Here's Justine's solution in 512 Bytes (the old MSDOS boot sector limit) with 114 lines of lisp.lisp interpreter, written in λ calculus:

https://github.com/jart/sectorlisp

https://github.com/jart/sectorlisp/blob/main/lisp.lisp

Justines "sed" interpreter

Lots of brackets - what's the idea behind "bra" and "ket"?

https://www.mathsisfun.com/physics/bra-ket-notation.html

It's a notation of handling operands, expectations and measured states upto very high dimensionality. Whenever we see a notation like this here < ... | ... >, on the left side always are the expected values and on the right side we find what we have measured or observed. They both stand in a covariant and contravariant relationship.

In AI we train the left side, and after having done that we feed in the measured states and then we compare a multitude of states by building the vector or dot product:

https://en.wikipedia.org/wiki/Dot_product

What have two vectors < 0 | 1 > and < 1 | 0 > have in common?

0*1+1*0 = 0 - nothing! The < | expectations on the left side didn't match the "observed states" | > on the right side. NPU's, built into modern 2024 CPUs such as the SpacemiT Banana Pi F3 chip, can execute the dotproduct for very high dimensionality multiplying long vectors with each other in almost no time. Training with BFloat16, inference then in INT8 only. Works perfectly!

https://youtube.com/results?search_query=Banana+pi+F3

I can feed the output value, the <skalar> of a previous vector comparison into a new vector and then compare with another vector and so on:

Within e.g. Pytorch of Tensorflow, Python in the background is continuously rewriting its own abstract syntax tree (AST) for redimensioning and rearranging the flow of the stacks of cascaded <vector product> machines for finally optimal performance solving your problem, bringing down your error rate as quickly as possible. That's called "autograph".

The parameters of the optimized flow graph within TF or PyTorch you can extract from ONNX

That's what Nvidia is selling for plenty of money: Hot air! Welcome in the *"age of AI".

The "movfuscator"

It converts a C program into x86-64 mov instructions. And mov instructions only. The other x86-64 assembler instructions (see the Intel ISA) are not used!

The explaination why this is possible, you find in the documentation of Justines Lambda Engine: "The Lambda Calculus is a mathematical language with 1 keyword"

Imagine with how much you could reduce the number of transistors on a chip and still maintain full compatibility to a normal Intel / AMD CPU!?

https://github.com/xoreaxeaxeax/movfuscator/

Surprisingly you can program even games with this "single instruction" compiler:

Q: What kind of execution engine is this? (Hint: It's not any of the stack based machines!)

The answer you find in: https://esolangs.org/wiki/List_of_ideas

Gource, see how your project advanced over time

https://youtube.com/watch?v=aZf2g__6DkY

Self hosted languages, no longer implemented in C

As you can see, e.g. OCaml has left its Caml C roots behind and now is completly "self hosted", means implemented in itself.

https://github.com/ocaml/ocaml/blob/trunk/asmcomp/riscv/emit.mlp

Same happend to Go, Rust, Java (OpenJVM), C++ (former Cfront). Becoming self-hosted, implementing the programming language in its own "independent language" is a dream of most compiler builders.

Programmer's thoughts for the weekend ...

Back to last lesson - https://rentry.co/DSRsecuritycoursepart10 Next lesson: https://rentry.co/DSRsecuritycoursepart12