DSR enhanced security and "formal verification" course

Fundamental ideas in algorithms make the world of now!

- The long history of the "0"

- AI math and logical reasoning

- What is the logical AND telling me?

- What is OR telling me?

- What is XOR telling me?

- What's the XNOR telling me?

- Therefore ∴ and because ∵

- Logical implications: → ⟹ ⊃ ⊢ ⊨

- What does P⇐Q mean?

- What does P⇔Q mean?

- What does P ¬ Q mean?

- What do ≜, ∀, ∃, ∧, ∨, ⇒, ⇔, ⇐ mean?

- What does + mean?

- What relevance in a binary system means?

- What does * in binary system mean?

- What the ÷ operator means?

- What does - then mean?

- What does the dotproduct < · | • > mean?

- What does matrix multiplication A², A³ mean?

The long history of the "0"

“Zero has had a long history. The Babylonians invented the concept of zero; the ancient Greeks debated it in lofty terms (how could something be nothing?); the ancient Indian scholar Pingala paired Zero with the numeral 1 to get double digits; and both the Mayans and the Romans made Zero a part of their numeral systems. But Zero finally found its place around AD 498, when the Indian astronomer Aryabhatta sat up in bed one morning and exclaimed, "Sthanam sthanam dasa gunam" — which translates, roughly as, "place to place in ten times in value". With that, the idea of decimal based place value notion was born. Now Zero was on a roll: It spread to the Arab world, where it flourished; crossed the Iberian Peninsula to Europe (thanks to the Spanish Moors); got some tweaking from the Italians; and eventually sailed the Atlantic to the New World, where zero ultimately found plenty of employment (together with the digit 1) in a place called Silicon Valley.”

Dan Ariely: "Predictably Irrational: The Hidden Forces That Shape Our Decisions"

AI math and logical reasoning

AI is all about finding relationships "repeating patterns" from data in a high dimensional state space and to stepwise further reduce that state space until you - with high probability - can conclude "It's a Banana!" (yellow, longish, hand size, curved)

A couple of elementary math functions and logical symbols are used: ∧ - conjunction, ∨- disjunction, AND, OR, XOR, NOT, +, -, *, ÷, %, < * | * > - the dotproduct and mostly matrix multiplication.

Symbols used e.g. by TLA+: https://lamport.azurewebsites.net/tla/summary-standalone.pdf

Based on: https://en.wikipedia.org/wiki/Truth_table

Computer Science Metanotation talk by Guy Steele: https://youtube.com/watch?v=dCuZkaaou0Q&t=3060s

Slides: https://groups.csail.mit.edu/mac/users/gjs/6.945/readings/Steele-MIT-April-2017.pdf

Metanotation for the advanced: https://www.csl.sri.com/papers/csfw99/csfw99.pdf

Simple proof system in Hoare's Semantic Triple notation:

https://daltron.de/posts/hoare-prover/

What is the logical AND telling me?

AND tells us something about the "purity" of signals

| P | Q | AND | pure? |

|---|---|---|---|

| True | True | True | pure, keep! |

| True | False | False | throw away! |

| False | True | False | throw away |

| False | False | False | throw away |

In a (time) sequence or group, a set of information or 'states' if there only is one "black sheep" -> throw away the whole information! It maintains the pureness of a signal: "All or nothing!"

What is OR telling me?

If there is at least some truthfullness in a set of informations (or states), keep it! Another interpretation would be how well two people complement each other:

| P | Q | OR | match? |

|---|---|---|---|

| True | True | True | harmonic |

| True | False | True | either |

| False | True | True | either |

| False | False | False | no |

But also can be seen as "elimination of states": https://daltron.de/posts/hoare-prover/

Or simply as 32/64 bit parallel disjunction:∨

What is XOR telling me?

| P | Q | XOR | change? |

|---|---|---|---|

| True | True | False | (no change) |

| True | False | True | (change) |

| False | True | True | (change) |

| False | False | False | (no change) |

It's a notifier if there is change in information signal or flow! With this i can make a differential encoding to vectorize information, making e.g. pixels in a convolution window independent of size = scale independent, like zooming in and out into or from an object, but also independent on its position on x-y axis, which is called translation invariant. Also see Scale invariant:

Let's take the famous MNIST example: A bigger or bold O has same encoding as smaller italics O. Here the first lines of a convolution window scanning over a set of pixels and their "differential encoding". Repeated 0's, where nothing happens when shooting an arrow from left to right through, i am directly deleting here.

Means: With this I can encode or recognize the letter "O" independent of it's pixel size or thinness / boldness. Rest you can do with simple RegExp!

This encoding allows your computer immediately recognize objects independent of their size or boldness. It allows to tremendously reduce the size of your artificial neural network, making it portable onto tiny or even embedded machines.

Example for kids. Digit & Letter Recognition by https://snap.berkeley.edu/user?username=avi_shor

This little trick makes the new chinese $50 Banana Pi F3 board with its 8 cores in the SpacemiT chip as capaple as a 56 core Intel Xeon Server with TensorFlow or PyTorch accelerated by a NVIDIA card even. Look at Tesla's "Full Self Driving computer" which still is in "alpha" stage, despite Elon Musk's billion investment into a new NVIDIA computing center: https://youtube.com/results?search_query=Tesla+fsd+fail+compilation

Mercedes Drive Pilot is licensed since 1 year now on german roads, in cities and Autobahn (130km/h) even: https://youtube.com/watch?v=1gjweWq8qAc

Banana Pi F3 object recognition: https://youtube.com/watch?v=Ym-VcJgaGIY

It can do this and even play 9 1080p videos at 4.7 watts power consumption: https://youtube.com/watch?v=cHx1i--X1y4

Canon EOS booting Linux: https://youtube.com/watch?v=IcBEG-g5cJg

Casio cameras at 1000fps: http://arch.casio-intl.com/asia-mea/en/dc/ex_fh20/

Immersive experience of driverless Robotaxi in China's capital Beijing https://youtube.com/watch?v=NZLVjAjjCIg

What's the XNOR telling me?

Looking into https://en.wikipedia.org/wiki/Truth_table again:

| P | Q | XNOR | no change? |

|---|---|---|---|

| True | True | True | no change! |

| True | False | False | (change) |

| False | True | False | (change) |

| False | False | True | no change! |

It is the opposite of XOR. It is set to one, when no change is there (in state or over time).

Therefore ∴ and because ∵

The "because" symbol:∵

The "therefore" symbol: ∴

Example:

∵All men are mortal.

∵Socrates is a man.

∴Socrates is mortal.

Logical implications: → ⟹ ⊃ ⊢ ⊨

What does e.g. P⇒Q mean?

From true facts or a "truthful statement" there true conclusions can be made

"Ex falso quodlibet" - From untruthfulness anything can be concluded.

x > y ⟹ x² > y² (implies), but is that true? 2 > -3 ⟹ 4 > 9 ????

It has rained ⇒ earth must be wet

It has rained ⇒ it's not possible that earth is not wet

It hasn't rained ⇒ earth can nevertheless be possibly wet (for other reasons)

It hasn't rained ⇒ earth can be dry

Propositions as Types - Computerphile

What does P⇐Q mean?

Can I backwards conclude that:

Can the earth be wet despite it has not rained? Yes, can be true! (e.g. a cleaner robot at work)

Can earth (e.g. somewhere under a car) be dry despite it has rained? Yes!

Can earth (looking everywhere) be not wet if it has rained? No!

Can i conclude from (it having not rained), that earth still is (somewhere) dry? Yes!

Every fish is herring? No!

Every herring is a fish? Yes!

Is everything swimming in water a fish? No! (can be a whale!)

Do fish live in water? Yes!

Do whales live in water? Yes!

Can I conclude from this that whale and fish are identical? No!

Claim: "If pigs had wings, they could fly!" Your opinion?

What does P⇔Q mean?

One cannot be true without the other. (No human without god? Divinity?) Also see iff (if and only if!!!)

What does P ¬ Q mean?

Both states (or events) cannot be true (or not happen) at the same time.

What do ≜, ∀, ∃, ∧, ∨, ⇒, ⇔, ⇐ mean?

Here some(times), many(times), every(time), always and often play an important role in under what conditions assumptions can be made:

The "equal by definition" symbol: ≜

The allquantor ∀ - in all situations, under all conditions, for all states or / and times

The existance quantor ∃ - there exists a situation, where

Conjunction ∧ - both events or states coincide

Disjunction ∨ - both events (states) never happen (cannot be true) at the same time

Negation ¬ always the opposite happens (or is true)

Logically follows ⇒ same conditions -> same results

Both mean the same ⇔ same results? Then conditions must have been the same

Can be concluded from? ⇐ when you conclude from the results to the conditions

Person killed => Person dead (always true!)

Person dead => must have been killed? No, could have been old!

Note that killing is an action and being dead is a state(ment).

What does + mean?

More equal states, more relevance! The more often an event occurs, the more relevant the number gets, linearily.

What relevance in a binary system means?

It's a convention: Information (or state) futher to the left is more important than information further to the right:

00010000 means more relevant, than 00000100

From left to right: At each step further positioning to the right the relevance of information halves.

What does * in binary system mean?

It's the measure number when comparing two different weightend states, where different events are noted further left being more relevant than events noted further right:

Here i compare two states with more or less relevant information in it with each other:

2ⁿ ... 2⁴ 2³ 2² 2¹ 2°

0000 1000 * 0000 0100 = 8 * 4 = 32 = 0010 0000

So here two weights have a (strengthening) cross wise impact on each other. That's what the result of a multiplication measures.

Having combined weights is also possible:

0000 1010 * 0000 0101 = 10 * 5 = 50 = 0011 0010

What the ÷ operator means?

When multiplication means a cross wise strengthening impact then division is the opposite: It has a weakening impact.

The bigger the divisor, the lower the quotient, the further the initial dividend is coming down. It's a quantized, weightened weakening operator

What does - then mean?

It's a linear weakening operator, not weightend with halvening its relevance from left to right. The more often a "counter - event" happens, the less relevant it gets. It's force and anti-force, opposite direction.

What does the dotproduct < · | • > mean?

It measures how much one linear list of distinguishable (weightened 2ⁿ... 2°) states has in common with another linear list of different (weightened) states:

(0,4)·(5,0) = 0*5+4*0 = 0 -> the (measured) state on the right has nothing in common with the (precalculated, expected) state on the left.

In quantum mechanics it's called < · | (bra) and | • > (ket) = braket < · | •>

https://en.wikipedia.org/wiki/Bra–ket_notation

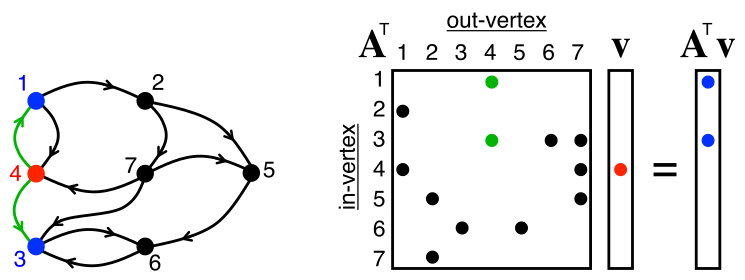

What does matrix multiplication A², A³ mean?

Also see https://en.algorithmica.org/hpc/algorithms/matmul/

In the last decade somebody noticed that there is a connection between graphs and matrices. A² counts the indirections and A³ the in-indirections of how e.g. how people indirectly know each other over how many hops, e.g. in LinkedIn or Xing. It allows to find clusters in social networks.

It also alowed to detect the <inner dependencies> within complex relationships, as AI does. While AI is based on bayesian probability and is tending to famous "hallucinations", the use of matrixes for encoding of knowledge with e.g. adjecency matrixes RDF semantic triples and backtracing by building A^(-1), inverting the matrix A, allows the precise reasoning about facts. This is the new way of doing AI - without "hallucinations"!

The Applications of Matrices | What I wish my teachers told me way earlier

https://youtube.com/watch?v=rowWM-MijXU&t=15m48s

Introduction to GraphBLAS with Python

https://youtube.com/watch?v=JUbXW_f03W0

GraphBLAS discovers hidden relationships within big data

GraphBLAS operations all are operations on mathematical semirings that fulfill special properties. Also compare with: 'abelian group', 'rings', 'semifields', 'idempotency'

To the official homepage: https://graphblas.org/

Practical use of GraphBLAS or OpenBLAS

What building A² reveals

In the above videos you could see, that multiplying a matrix by itself, building A² from a simple adjacency matrix, that this reveals something about its inner structure, revealing hidden logical connections, that cannot be seen otherwise. In the case of A² you see who is connected with whom over one indirection.

Watch! https://en.wikipedia.org/wiki/Adjacency_matrix

Calculating A³ reveals clusters in community structures or social networks

By computing A³ you see who is connecred with whom over 2 indirections. With this it slowly begins, that you can see e.g. community structures in your enterprise, only by looking at e.g. the internal mail metadata (logs of who communicates with whom) clearly showing who is relevant or can be layed-off). Extract the mail logs, give every employee a number (1-500) and then build the adjacency matrix.

Or use meta data logs provided by on Twitter/X, Telegram which btw. become highly relevant for our secret services finding "terrorist clusters" in social networks (or just a harmless community of people interested in Ornithology (birds).

Building neural networks with GraphBLAS

https://arxiv.org/abs/1708.02937

https://people.engr.tamu.edu/davis/GraphBLAS_files/HPEC19.pdf

No TensorFlow, no PyTorch, nothing!

So you might next time think about it in a different way what those lines of code do mean, when looking at them:

https://github.com/AndrewCarterUK/mnist-neural-network-plain-c/blob/master/neural_network.c

https://github.com/karpathy/llm.c

https://github.com/karpathy/llama2.c

Have fun!

Back to last lesson - https://rentry.co/DSRsecuritycoursepart12 Next lesson: https://rentry.co/DSRsecuritycoursepart14