ComfyUI for A1111 retards

Introduction

This is meant to help retards who use A1111 to switch to Comfy and feel comfy. As such, I will use terminology from A1111 to help you get familiar. Note however that you should try and move away from it as it is often misleading and hinders your understanding of underlying mechanisms in the long run.

Installation

If you're a casual user, here you can find the comfyest way of installing SD UI in the form of a Windows installer that guides you through the entire process or a self contained portable package.

If you're a power user, you don't need my help. Clone the repo, set up the venv, run main.py.

The orange button and UI basics

- In ComfyUI the orange button is called "Run" (1). You can queue as many runs as you want and go shitpost while they are executing (2). The runs will execute the state of the workflow as it was when you pressed that button, meaning you can keep making edits and queue them while genning.

- You can pan around by holding left mouse button in empty space and dragging the mouse. There are more camera controls in the bottom right corner, including a handy minimap (3).

- To get someone's workflow, just drag the image into the ComfyUI window. This also works on other types of metadata, like A1111, creating a basic analogous workflow out of it.

- ComfyUI does not execute unchanged parts of the workflow. This can be a huge time saver sometimes.

- Double click an empty space to search for nodes.

- Right click empty space -> "Add node" to look at the categorized list.

- Hold Ctrl to select multiple nodes or drag select. This

- Hold Shift to move multiple nodes at once.

- Pasting nodes with Shift+Ctrl+V keeps their inputs.

- You can turn any input widget (like a text box or a number input) into an input for a corresponding noodle and back. This lets you, for example, give multiple nodes the same input without copy pasting every time you make a change.

- You can have several workflows open at the same time (or even the same one with different settings) in different tabs (4). The runs from all of them can be added to the queue, so you can work on several different gens or have a multi-step workflow with ease.

- Custom nodes make UI better, we'll get to that. ComfyUI Manager is the best way to get them (5).

txt2img

This process actually consists of multiple parts, which A1111 hides from you, but ComfyUI doesn't. Just drag a A1111 gen into the window to get a basic one. I'm gonna use a random one I saved. Here's a full image of the workflow with metadata stored in it, but I encourage you to try and build it out yourself to get some practice.

Loaders

What do these colorful noodles do?

- Model, CLIP, VAE - just plug them in whenever they are required. If a node has both an input and output, chain them together. Observant among you will notice that this is how loras are loaded. Don't put them in your prompt, that's just a A1111 hack. Set both strengths to the same value - that's your lora strength.

Note

Contrary to what you might think from A1111's UI, you always use a VAE, but checkpoints include one which is used by default in A1111.

- Latent - this is the image you're generating, encoded in a special way to allow SD to do its magic. VAE is what encodes and decodes it. An empty latent (created by the corresponding node) is the starting point of txt2img workflows. This is also where you set the size of your gen.

- Finally, a CLIP Set Last Layer node is a more appropriately named CLIP SKIP. Just use whatever value you would in A1111 (except it's negative here). This is a good example of a lot of settings boxes being nodes applies to the right part of the workflow.

- On a related note, v-prediction models like EasyFluff do not need a yaml to function. Simply plug in a ModelSamplingDiscrete node into the chain and set it to v-prediction sampling.

Generation

Noodles:

- Conditioning - this includes everything that tells SD what to do. Prompts, controlnets, masks, local version of prompt editing.

- Image - this is the image in the pixel space, ready to be viewed on your monitor, saved on your disk and posted on vietnamese basket weaving forums.

Nodes:

- CLIP Text Encode - this is how you do prompts.

- KSampler - this is what does the actual generation. Has a lot of the familiar settings - seed, steps, CFG, sampler, denoise. Karras* is hidden under scheduler, because that's what it is. Set control_after_generate to "fixed" if you don't want to change the seed.

- VAE Decode - decodes the gen from latent space into pixel space, which is how all of your generations will end (stfu adetailers and upscalers, we'll get there). If you don't have enough VRAM it will automatically switch to the tiled version which is slower but smaller. You can force this by using VAE Decode (Tiled) but I recommend testing if this is faster or not.

- Save Image - self explanatory. There's also Preview Image which saves it in a temporary folder.

That's it. That's how txt2img works.

Prompting

Prompt weights work differently in ComfyUI than A1111. The weights have way more impact, so you don't need to go crazy on it. Prompt without weights, then add or subtract 0.1 for things that you want to (de)emphasize. I almost never have to go beyond 1.2. You can read about the difference more in depth here, there you can also grab a custom node that lets you use A1111-style weights.

img2img

It's almost the same, except you take an Image (the blue noodle), plug it into a VAE Encode node and then plug the resulting Latent into the input of KSampler instead of the Empty Latent. That's it. Don't forget to adjust the denoise.

Oh, where do you get the Image you ask? Use a Load Image node (put it in the "input" folder) or just use the one you got from VAE Decode in the previous section directly. Observe:

Here I also demonstrate in-built ways you can manipulate conditioning. In this case, I added some stuff to the negative prompt via Conditioning (Concat) node. It also acts the same way BREAK keyword does in A1111.

At this point you can test whether you're thinking like a real ComfyUI proompter by identifying a glaring mistake in the above workflow:

You can plug the latent directly into the second KSampler, no need to Encode or Decode in between. Not only you're wasting time, VAE operations are lossy, meaning you lose information/details by doing them an extra time. You can still Decode the intermediate latent separately if you want to save or preview it.

"Hires fix"

It's the same thing as above, but with an Upscale latent node in between. Or Upscale Image (using model) if you want to use a model. Note that for the latter you will need to decode it into the pixel space (as evidenced by Image input noodle). You can then encode it again if you want to do another pass with KSampler at low denoise for example. Flexibility is the name of the game here.

Saving images to a custom path

Contrary to a popular belief, you don't need any special custom nodes for this. Save image node accepts a parametrized path. For instance, "Gens/%date:yyyy-MM-dd%/%Load Checkpoint.ckpt_name%/Upscaled" will save the image as ComfyUI/output/Gens/<date>/<checkpoint name>/Upscaled_00001.png

Custom nodes

This is a big topic, so I will just leave a couple of recommendations for now.

First of all, install ComfyUI Manager. It's fantastic and is how you get the other nodes. Whenever you see red nodes failing to load on someone else's workflow, you can now simply hit "Install Missing Custom Nodes" in the Manager menu and it will list everything you need (provided they are in the Manager's database, which all mainstream nodes will be).

You should mostly install things based on your needs to avoid bloat, but I broadly recommend:

rgthree node pack - improves execution model and has a ton of useful nodes, including more flexible Reroutes.

pythongosssssssss custom scripts - this boosts the UI in various ways

workspace manager - very useful if you plan to have more than 1 workflow

various prompt encoding methods - this was already mentioned in the prompting section, with it you can try different math for prompt weights, including switching to A1111 style to keep your existing prompts

prompt control/prompt editing - adds prompt editing (stuff like [red:blue:0.5]) syntax. Note that this is already possible with built in nodes (ConditioningSetTimestepRange for example).

Subgraphs

In short, you can collapse any part of your workflow into a single node by using one of the buttons that appear above your selected nodes.

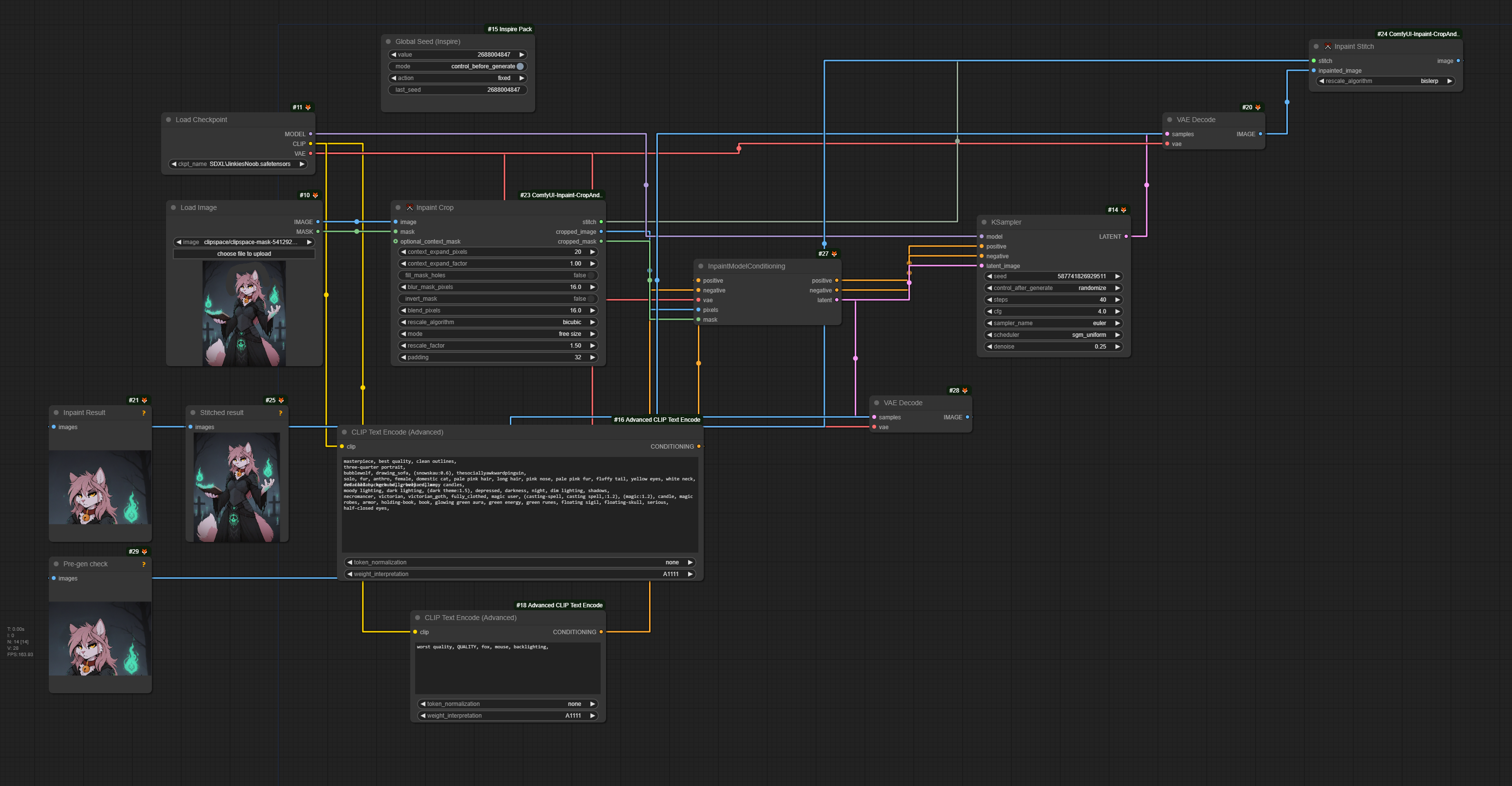

Below is anon's workflow demonstrating subgraphs and inpainting where "Inpaint" is a subgraph (evident by the clickable icon in the top right).

Inpainting

There are several ways to accomplish this, but the best one I found is the CropAndStitch custom node. As you can tell from its name, it cuts the selected region out of the image, upscales it, then pastes it back into the original image. This way you get good detail and hopefully a decent transition. Below a workflow posted by Anonymous, but I have used it very successfully myself.

Adetailer

ImpactPack has FaceDetailer node which performs the same function. Supply it with whichever detection model you wish, for that you will likely also need the Subpack.

Wildcards

There are several custom nodes that do it, but ImpactPack from the previous section comes in handy as well. The default location for your wildcard files is ComfyUI\custom_nodes\ComfyUI-Impact-Pack\wildcards.

Anons share their knowledge

General workflow

A nicely laid out workflow with notes to explain some tricks for prompting, advanced samplers, color matching and controlnet tile.

https://files.catbox.moe/9te335.png

Regional prompting

for the comfyui anons out there, after a few days of looking for how to reproduce the a1111 regional prompter output, I finally managed to do it. tried to tidy the workflow as much as I could, it's still a mess, but it should be fairly intuitive. the relevant custom nodes are

https://github.com/ramyma/A8R8_ComfyUI_nodes

the main nodes for regional prompting

https://github.com/pamparamm/ComfyUI-ppm

provides the latent to mask node which is pretty useful. it also has a regional prompting node but it seems you can't adjust weights, or I'm too dumb to figure it out

comfyui workflow

https://files.catbox.moe/xtt2rg.png

a1111 result

https://files.catbox.moe/ivylnp.jpg

you can use as many regions as you like (up to 11).

[OUTDATED] Group nodes

Outdated

Use Subgraphs instead

You can collapse parts of your workfow into a single node for easy replication and tidier workflows.

- Select the nodes (ctrl+click or ctrl+drag select)

- Right click on one of them

- Select Convert to group node

- Input the name of the group node

- The group node is now available as any other from Add node or node search.

You may subsequently edit the appearance (disabling or renaming widgets or inputs/outputs for example) of group nodes by right-clicking anywhere and selecting Manage group nodes.

Example

Below is my group node that replicates "Prompt is more important" behavior (it uses Advanced ControlNet custom node).

->

Components

If you're using ComfyUI Manager (you should if you use custom nodes at all), you have access to Components, which are group nodes sharable in json format between workflows and users. A sort of mini-workflow if you will. Here's a demo with 2 group nodes which you can paste into your workflow. And here's the component json of the group node from the example above.