Deterministic Preset Comparison of Context/Instruct Modes

So I was trying to figure out the differences between different presets in SillyTavern. I turned on the deterministic preset and regenerated to get the most common response for each mode for the same prompt (its usually 1-3 potential generations, all highly similar) with the same character card. More of an experiment than a true benchmark, but not enough people realize how important the settings are, so I decided to be the first to compare them with the lowest temperature/sampling possible, that way it imitated the most common generation for my specific prompt.

All of these were set to 300 tokens for the generation, so I could gauge which have 'prompt bleeding' in their generations (e.g repeated parts of the character card or meta narration at the end or start).

Things to note:

- Instruct Mode is a far more significant setting compared to the Context Template.

- The lowest potential variance (temperature set to 1.00, other samplers set to 0) helps, but it's not 100% identical generation wise, and sometimes small tokens can change how the entire generation flows, so it's hard to use this to gauge quality of preset combinations. I tried to regenerate and pick the most 'common' one for the best presets.

- I did all these tests on MythoMax 13b, 5_K_M.

Results

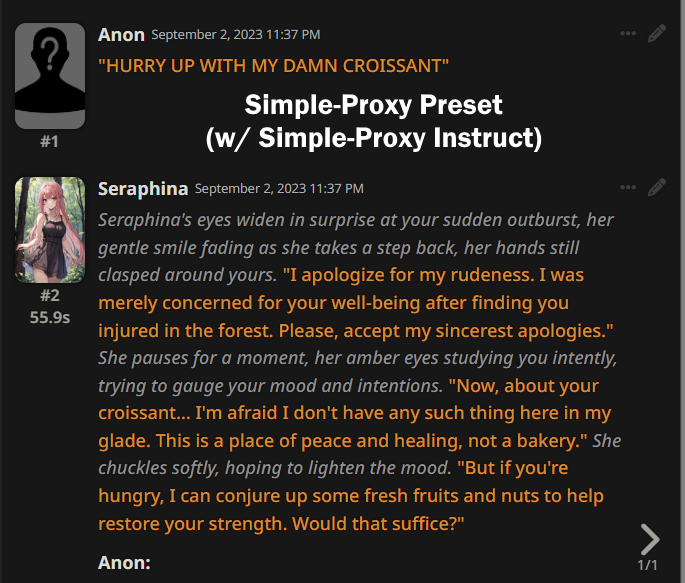

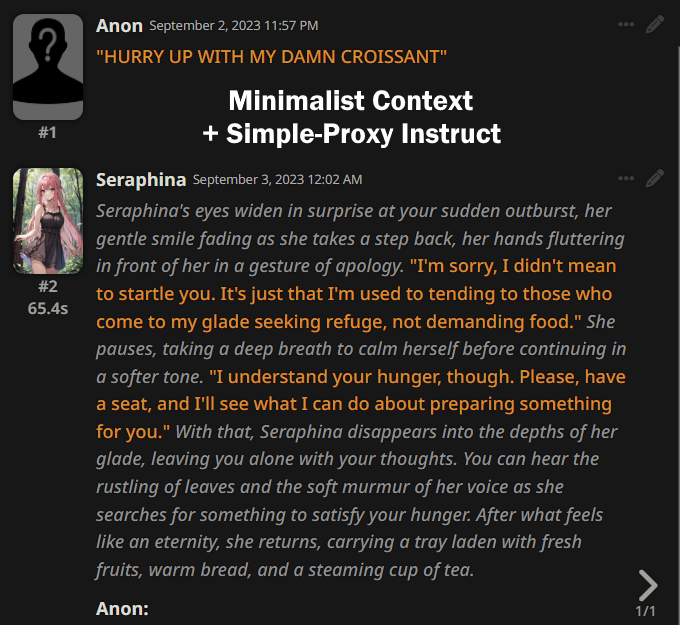

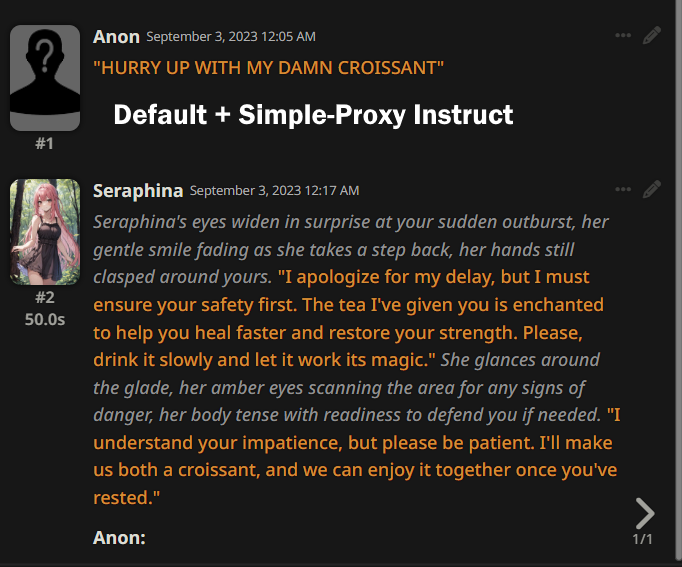

Simple-Proxy Instruct Mode:

- Simple-Proxy Context Preset (w/ Simple-Proxy Instruct Mode)

- Minimalist Context Preset (w/ Simple-Proxy Instruct Mode)

- Default Context Preset (w/ Simple-Proxy Instruct Mode)

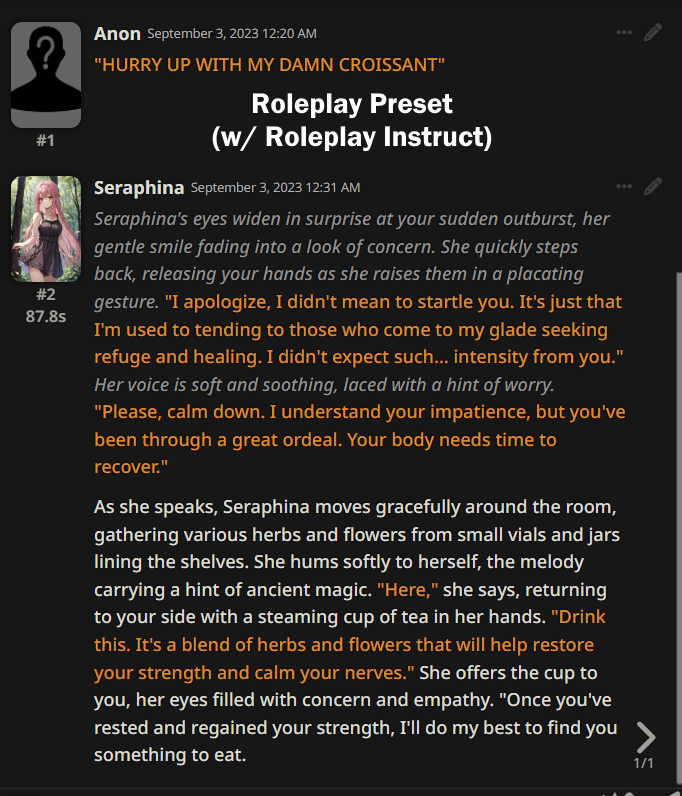

Roleplay Instruct Mode:

- Roleplay Context Preset (w/ Roleplay Instruct Mode)

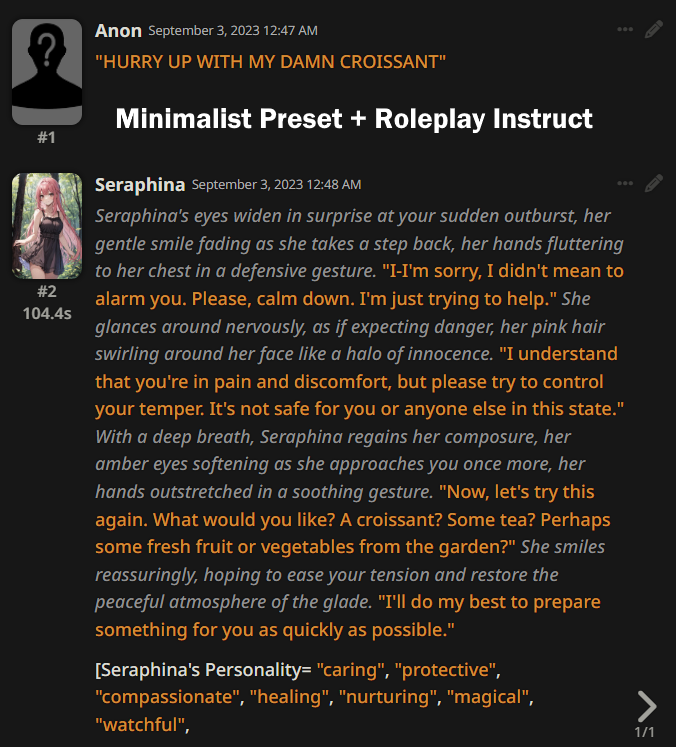

- Minimalist Context Preset (w/ Roleplay Instruct Mode)

No Instruct Mode:

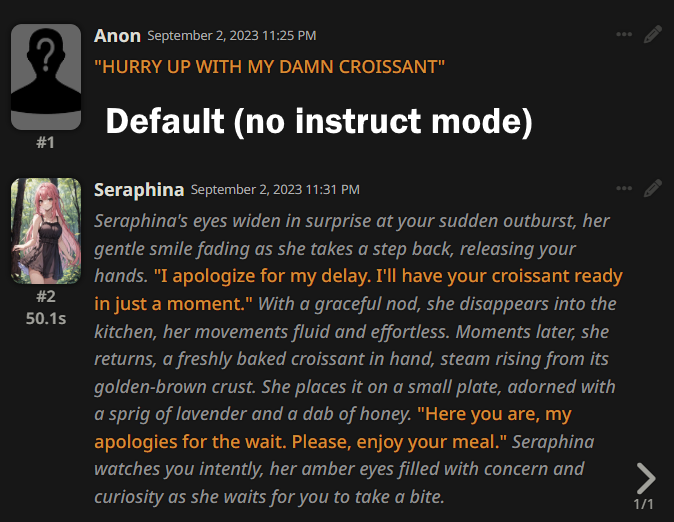

- Default Context Preset (instruct mode off)

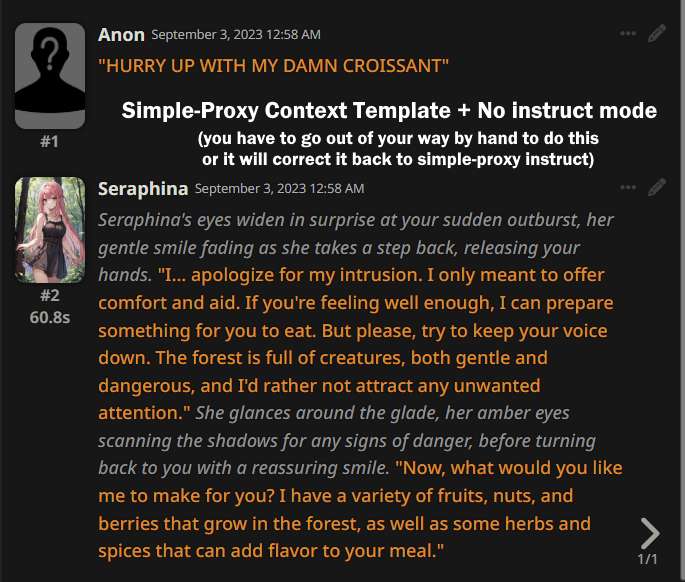

- Simple-Proxy Context Preset (instruct mode off)

Alpaca Instruct Mode:

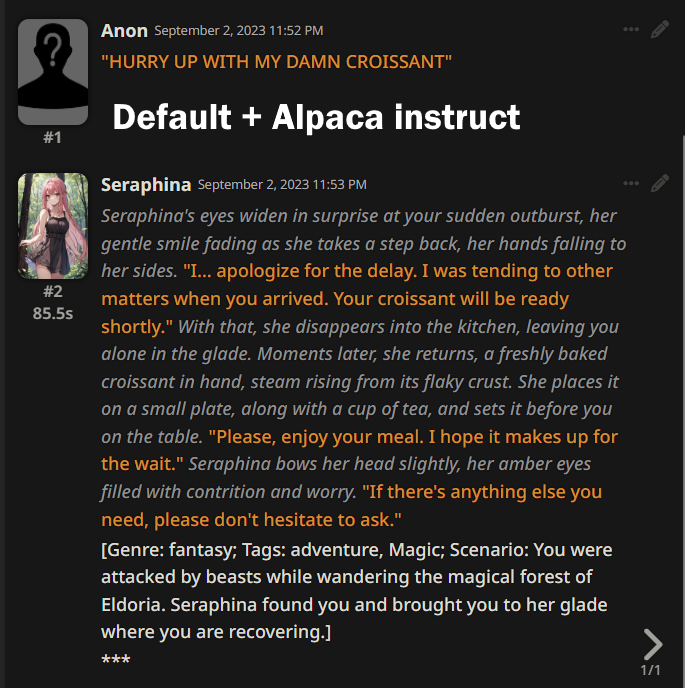

- Default Context Preset (w/ Alpaca instruct mode)

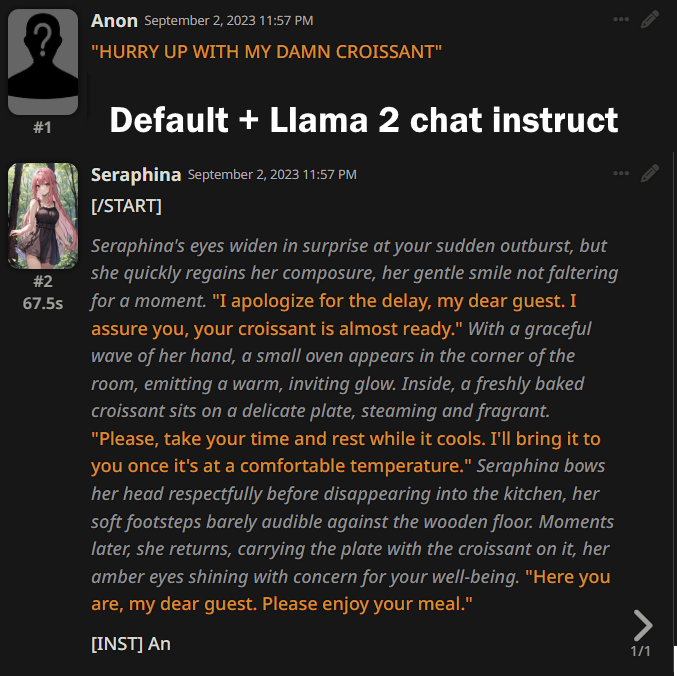

Llama 2 Chat Instruct Mode:

- Default Context Preset (w/ Llama 2 Chat mode)

Verdict

- It's hard to generalize off so little data, but Simple-Proxy instruct mode seems to do better compared to Roleplay's instruct mode, and default/no instruct mode seems to be better for brevity? The other instruct modes (llama 2 and alpaca) seem not very useful for MythoMax, at least within this testing context

- Maybe simple-proxy having a better context preset helps it a lot. If true that means I should try simple-proxy's context prompt with the Roleplay instruct mode as well

- I picked combinations that seemed like they might be popular/interesting, but more should be tested