Stable Diffusion spoonfeed installation guide

Last edit: 28/10/2025

READ THIS:

This guide is for NVIDIA GPU's on windows, AMD needs a different guide.

Nvidia GPUs are the primary supported manufacturer. AMD has support, but is harder to set up and runs less efficient. If you are on an AMD GPU check here instead: https://github.com/AUTOMATIC1111/stable-diffusion-webui/wiki/Install-and-Run-on-AMD-GPUs

What hardware do I need?

Specs

The most important variables are as follows:

Vram Size

For SDXL, 6GB is the bare minimum to get stuff generating. 8GB to have a good time. 12GB to be comfortable.

The newer larger models based on FLUX like Chroma or many video models like WAN appreciate even more VRAM. However SDXL is still the standard as of writing.

Card Speed

Your general card speed. Mostly Intuitive, as gaming benchmarks largely apply here when comparing cards among each other.

A lot of the AI specific benchmarks test on unrealistic criteria or are super outdated so I wouldn't rely on those.

Disk space

Can balloon very quickly, you want ~20GB of free space at the very least.

Less important:

RAM/CPU/SSD

RAM size can help with refiners (niche use). Video models also usually have to partially load into RAM.

CPU speed is largely irrelevant

Having your stuff on an SSD makes loading models and saving images much faster.

Some card recommendations:

Highly highly depends on availability, GPU shortages and the botched release of the 50 series makes recommendations more difficult.

Best value: 3060 12GB (Careful, there's an 8GB variant out there).

Medium: 4060 16GB

High-end value: 3090 24GB

Note: 50xx series cards often need specific branches of the programs we use to function properly.

I'M NOT GOOD WITH COMPUTERS: 1-click Installation

Get the 1-click installer from here

No real downside beyond not having proper version control with git. Forge is equivalent to reForge as of writing.

You can skip the sections for "What Frontend?" and "Installing reForge" in this case.

What Frontend?

I recommend either reForge or ComfyUI:

| WebUI (reForge) | ComfyUI | Krita |

|---|---|---|

| +Fast, relatively easy to understand | +Fast | +Ai tools integrated into a proper editing program |

| +Plenty of features, robust, does it's job | +Tons of individual support, gets cutting edge features first | +Great for inpainting (selectively changing regions) |

| - UGLY. | +Able to do more unconventional stuff with its workflows. | |

| - Much more prone to user error. Surprisingly convoluted at some simple tasks. | -Has a feature or two missing that one would like, extensions etc. |

Considerations:

Forge: Regular Forge can run Flux, has a different inpainting interface, but is also more prone to errors suddenly breaking something. reForge is more stable.

Invoke: A UI aimed at professionals. In the past a few people used this a bunch for it's inpainting features but Krita seems to have gotten more popular for that purpose as of late.

StabilityMatrix: Basically an installer for the UI's. Positives: The easiest UI for the technically inept. Downsides: Introduces middleware, you often have to wait for updates on new stuff, occasionally paywalls free software. I highly recommend against it but some people need it.

Don't:

A1111: Discontinued, Forge or reForge are modern continuations.

TL:DR

If the UI's were OS'es, reForge is windows, Comfy is Linux.

Krita is great if you are intending to edit your AI output to refine it.

I personally use reForge because it does everything I need an AI program to do, if I need an image editor to edit my stuff I just use GIMP. I also have a separate install for Comfy for model merging, works much much better over there. Comfy is great if you have autism and making workflows can be ~fun~.

'WebUI'

The term "WebUI" refers to the entire family of A1111 forks, so it includes both it and forge/reForge.

Installing reForge

Installing reForge

The official install guide can be found here if you get stuck. Specifically we want to be on the dev branch instead of main, as it has important features for the current meta models.

1: Install Git

2: Make sure Python 3.12 is installed. The version is important. Make sure to click 'add to path' when installing. Installing the wrong python version (newer versions) is the most common source of errors.

3: Navigate to the folder where you want your install to be. For this example it would be D:\AI

4: Click an empty space in your folder path: , type cmd, press enter. This will open a command line window in your chosen folder.

5: Type in git clone -b dev https://github.com/Panchovix/stable-diffusion-webui-reForge.git. To paste something into cmd you can right-click into the box on windows10. Press enter. It should pull the folder.

6: To update, do the cmd trick from (4) in the reForge folder, then git pull.

After installing:

Optional: Edit webui-user.bat, change the the respective line (line 6) to:

set COMMANDLINE_ARGS= --cuda-malloc --pin-shared-memory --cuda-stream

Adds some performance improvements. If your webUI starts crashing consider removing them.

Run webui-user.bat. You may see other executables, but you may ignore these.

This will download several dependencies and will take quite a while. Afterwards it will create the venv folder, which is the virtual environment in which the UI runs. In the end it should open a window in your browser.

How to switch your current A1111/Forge install to reForge

Note: I highly recommend just doing a fresh install to a new folder instead of switching branches. Switching creates more trouble than it's worth. Do a raw install via git to a new folder.

In the case of errors

Just google them. Plenty of people run into the exact same issues as you.

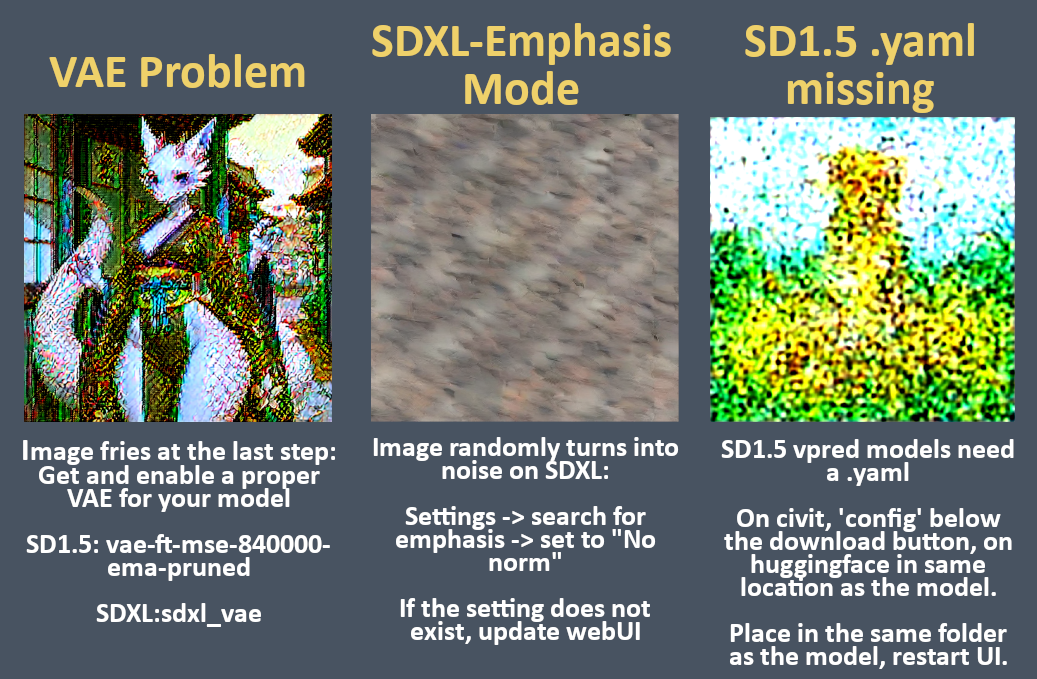

Some common issues:

Cannot find python

The webUI is picky with the correct version of python you need, which is still Python 3.12. If it cannot find python then you likely forgot to click 'add to path' when installing.

Alternatively go to the start menu, type "app execution aliases", open, and remove all entries mentioning python. Then try running the webui-user.bat again.

Some common ghetto fixes:

Deleting the venv folder:

Let's it regenerate the next time you run the WebUI. Occasionally this can fix a mismatch in dependencies, especially if you are using an older install.

Updating packages:

You can manually update packages inside your venv, if the webUI prompts you for something related to it. You can google how to do this and you usually find a direct how-to if you google the specific error in the log.

Running SDXL (NoobAI) (Recommended!)

SDXL is an architecture with a base resolution of 1024x1024.

Recommended VRAM minimum: 6GB, comfortable: 8GB-12GB

1: Download PersonalMerge V3.0 and place it into models/stable-diffusion. Even if the previews show anime, the model does furfaggotry just fine.

My NoobAI guide has some other options for models. I still recommend PersonalMerge for beginners however.

2: Download sdxl_vae.safetensors and place into models/vae.

The main model is your SDXL checkpoint. A VAE is required for a model to work properly, if you don't have one your images will look garbled.

With a minimal prompt your generation will look something like this, make sure to use 1024x1024 base resolution:

For further NoobAI prompt guidance and some template prompts you can look at my NoobAI guide

PonyXL models are still 'fine', but NoobAI ones are the new meta.

Additional settings:

1: Go to settings, search for quick, this brings up the quick settings that will put things to the top of the screen.

sd_model_checkpoint, sd_vae, CLIP_stop_at_last_layers, tac_tagFile Is what I use.

The tac_tagFile is available once you install the Autocomplete extension, which you should, you can find that in the useful extensions section.

2: Go to settings, search for emphasis, set to 'no norm'. This fixes a bug with emphasis on SDXL.

Running SD1.5 (Easyfluff/Indigofurrymix) (For weaker hardware below 6GB vram)

SD1.5 is an older SD version with less GPU requirements. Models like Easyfluff and Indigomix have their base resolution set at 768x768.

Recommended VRAM minimum: 4GB, comfortable: 6GB. You can probably go lower even.

Download EasyFluff v10-PreRelease or IndigoFurryMix SE02-vpred and place in models/stable diffusion.

IMPORTANT: you also need the corresponding .yaml config file in the same folder, which is models/Stable-Diffusion. For indigofurrymix you can find the config directly below the main download button. For EasyFluff you find it under the same huggingface link.

Download: vae-ft-mse-840000-ema-pruned and place in models/vae.

Once in the webUI, choose your model, choose the VAE, set clip skip to 1.

Next scroll down to the many tabs below your prompt window until you see "RescaleCFG for reForge", enable it. 0.7 is good for Easyfluff, 0.5 is good for Indigo.

EasyFluff prompting:

EasyFluff has a known tendency to crank up yellow colors. (sepia:1.2) in negatives helps, however with a simple prompt like this one, it obviously tints the image pretty hard. If you encounter a tendency towards yellow tones, try adding sepia or warm colors into the negatives.

Easyfluff Embeddings:

Easyfluff users like to chuck in the furtastic negative embeds to help the model with some of it's shortcomings. If you ever see 'ubbp' 'bwu' 'dfc' 'updn' in a prompt then that's these Download. These are dropped into the embeddings folder, in the root of your reForge install. You add them by putting them into the negative prompt, seperated by commas like usual.

Image by anon

Optional things: Read once you have genned a few images successfully

Useful Extensions

Extensions can be found in the extensions tab of the WebUI, you can search for them, install them, and restart the WebUI directly from here.

If an extension is not found here, you can still install it via a Github link in the "Install from URL" tab.

Vital:

WebUi Autocomplete Github Link

Lets you enter tags in the prompt box and it will auto complete tags them based on the correct booru tags.

Once installed, navigate to Settings/Tag Autocomplete and select your tag list.

You can find an updated taglist for NoobAI, here, place in extensions/a1111-sd-webui-tagcomplete/tags.

Protip: Navigate to Settings/User Interface/User Interface and "tac_tagfile" to your set in your "Quicksettings list". This allows you to quickly change on the fly, useful if you also gen with other booru tags frequently.

Infinite Image Browser Github Link

Underrated extension. This offers you a scroll-able UI tab for all your images inside of the WebUI, with functionality to directly send them to txt2img, inpainting, etc. In general ,it greatly accelerates the speed at which I find stuff and copy/paste prompt portions.

You can also add folders to it for organization. I have a dedicated folder for prompt presets and templates, and one for prompts of other people.

Recommended:

Forge Couple Github Link

Forge's solution to regional prompting. I recommend using the 'advanced' selection option and making a custom separator, in my case I named it ~sep~. Structure your prompt as usual, for a scene with 2 characters you would use 2 separators to divide your prompt into 3 regions, background/main scene, char1, char2.

Afterwards go to the Forge Couple tab and manually drag and resize the boxes for the regions. Regional prompting is finnicky, styling will look different. You may need to fiddle with the weights (the w value) a little.

Contrary to popular belief, you do not need Regional Prompting to do duo scenes. What regional prompting primarily helps with, is avoiding prompt bleedover between two original characters. SD has no way of differentiating which character is which, by excluding zones, you can ensure the prompt gets associated with the right character.

Another common mistake is mistaking how Regional Prompting effectively functions in the current meta models. You do not want to prompt your 2 character regions, as if they are now solo regions, ie: Do not prompt (solo, female, anthro, squirrel). The primary composition gets determined by the first region, the character regions should only contain prompts that are specifically not meant for the other character.

The alternative to regional prompting is to simply inpaint the specific characters. This is my preferred solution these days, especially since characters are rarely divided cleanly. If a humans feet fall into the region of the anthro, they tend to get pawpads, stuff like that.

Wildcards Github Link

Alternative: Dynamic Prompts. Newer

The wildcard extension allows you to randomly select prompts through a list of tokens. For example it lets you prompt __location__ and selects one that you have specified in a locations.txt file. This is popular for all kinds of things, species, location, artist styles.

Once installed you can open your extension folder in extensions/stable-diffusion-webui-wildcards/wildcards and create one here. Wildcards are called by double underscoring and calling the txt filename. Each line is one selection, you may also use multiple prompts in one line. You may also use the same wildcard twice in a prompt. Below is a simple example of the txt formatting.

locationst.txt

Prompt: __location__

Lora Block Weights Github Link

Let's you schedule loras. More useful than it sounds, if you have a character lora that has a heavy influence on style, you could for example disable it after half steps. Swapping loras on and off adds a bit of generation time, but its worth it in some cases.

The syntax isn't very clear on the site, most of what you would do looks like this.

<lora:LucarioSDXL:1:stop=15>

<lora:PussyUncensor:1:start=15>

WebUI Inpaint Mask ToolsGithub Link

Tools that help with inpainting. Basic gist is: Automatically adjusting aspect ratio when inpainting masks that aren't square, automatic upscaling based on total resolution, idiot switch to cancel the gen if you forgot to press 'only masked'. 'Set it and forget it' sort of extension. Regardless, read the description. You should probably only use this if you know what it actually does. If new to inpainting, skip this for now.

As per taste:

Lobe UI Github Link

A subjectively nice UI. I personally don't use it but I can imagine some might like this. Sleek black, kinda similar to SD.Next and similar frontends. A bit too much pointless clicking on tiny buttons and unnecessary bloat for me. Also the generate button isn't orange anymore. Unusable. In all honesty, probably not bad.

I heard this extension is especially useful if you use the WebUI on mobile. Supposedly a much much bigger upgrade there.

Photopea Integration Github Link

Embeds the Photopea image editor into the webUI. It's well integrated and primarily useful for inpaint adjustments. Has functions for sending over masks, generally is faster than using Photoshop or GIMP. Personally I'm just used to GIMP and I find the "UI inside of the UI" a little clunky.

Lora

To keep it short, a Lora is something you can add on top of your given model to teach it new concepts or a specific style. Basically target training. Examples include, a lora on a character, an artist style, a concept like 'pants on head'. You may also encounter terms like 'LyCORIS' or 'DoRA', which are functionally used in the same way. These models are dropped into models/lora, even for the other types mentioned.

Some Lora are trained on a specific checkpoint, for example a loras might be trained specifically on PonyXL, or be more generic and run under SDXL. If something is trained on SDXL it will generally perform decently in Pony as well, but something trained in Pony will often not work well in another model like SeaArt.

Where to download Loras:

Civit: You can filter for your given model in the top right. There's a dedicated filter for Illustrious(NoobAi) or Pony based models these days. Keep in mind that 'most downloaded' does not imply best or well trained.

Trashcollects: The /sgd/ kitchen sink rentry for various character and artist loras. Ctrl-F is your friend.

PonyNotes: Another mostly western and anime focused resource that has a ton of PonyXL loras.

Versions:

SD1.5 Lora will not work with SDXL and vice versa. Your WebUI will usually only list loras in the lora tab based on what type of model you have selected, but they are not always tagged properly so pay attention.

Embeddings

Embeddings or Textual Inversions are a smaller scale solution to target training compared to a lora. Whereas a lora changes the 'layers' of the onion that is your mode, an embedding instead allows your model to navigate the layer better. These are popular to some degree depending on the model, but much less vital in the SDXL era.

Embeddings, just like lora, are designed to work on a specific model. Don't use SD1.5 embeddings with SDXL either.

SD1.5 Embeddings:

FurtasticNegativeEmbeds. These are popular for EasyFluff specifically. Easyfluff struggles a bit with some anatomy and these embeds are a collection of undesirables for your negative prompt, like multiple extra bodyparts.

I generally recommend these.

SDXL Embeddings:

NONE! SDXL embeddings are the ultimate placebo. These are only popular with people who put (epic:1.2) in their positive, and whose negative is a 10 page essay. A lot of them actively make your model dumber as well.

The only crowd for these is usually the average civit.ai user, who needs to use crutches since they have no access to easy upscaling or inpainting.

Upscalers

AI upscaling in Stable Diffusion is a multi step process. By default, Stable Diffusion models are trained on a specific resolution, if you try to generate on much higher resolutions you end up with artifacts and 'hallucinations', such as extra limbs or characters, due to it wanting to fill that extra space.

Here's a convenient workflow for upscaling image.

First of all, download "4x_NMKD-Siax_200k" here

Download the model, then place it in models/ESRGAN.

If ESRGAN does not exist, create the folder. Afterwards restart the WebUI so it shows up.

Next set these values in hires.fix. Do not actually toggle hires.fix on.

The general strategy for genning/upscaling goes something like this:

1: Generate images at regular resolutions and aspect ratios according to the model (SDXL aspect ratios).

2: When you find a good image you would like to upscale, you press the ✨button under your generated image, it will then upscale your image according to the currently selected hires.fix settings. So make sure to do those beforehand (you don't actually toggle hires.fix on for this).

Hires.fix is a 3 step process usually. First it will generate your image, then it will run a simple upscaler, in this case Siax, then it will run img2img using your selected AI model. We don't want hires.fix always on, since it adds a significant amount of generation time. By just upscaling images you like with the ✨button, you can filter through good candidates much faster.

The old method is to instead toggle hires.fix when you find a candidate and recycle ♻ the seed. With the ✨button we skip the re-generation of the image however, which is faster.

Note: Don't accidentally choose the default 'Latent' as your upscaler. It sucks!

Troubleshooting