Miqumaxx box

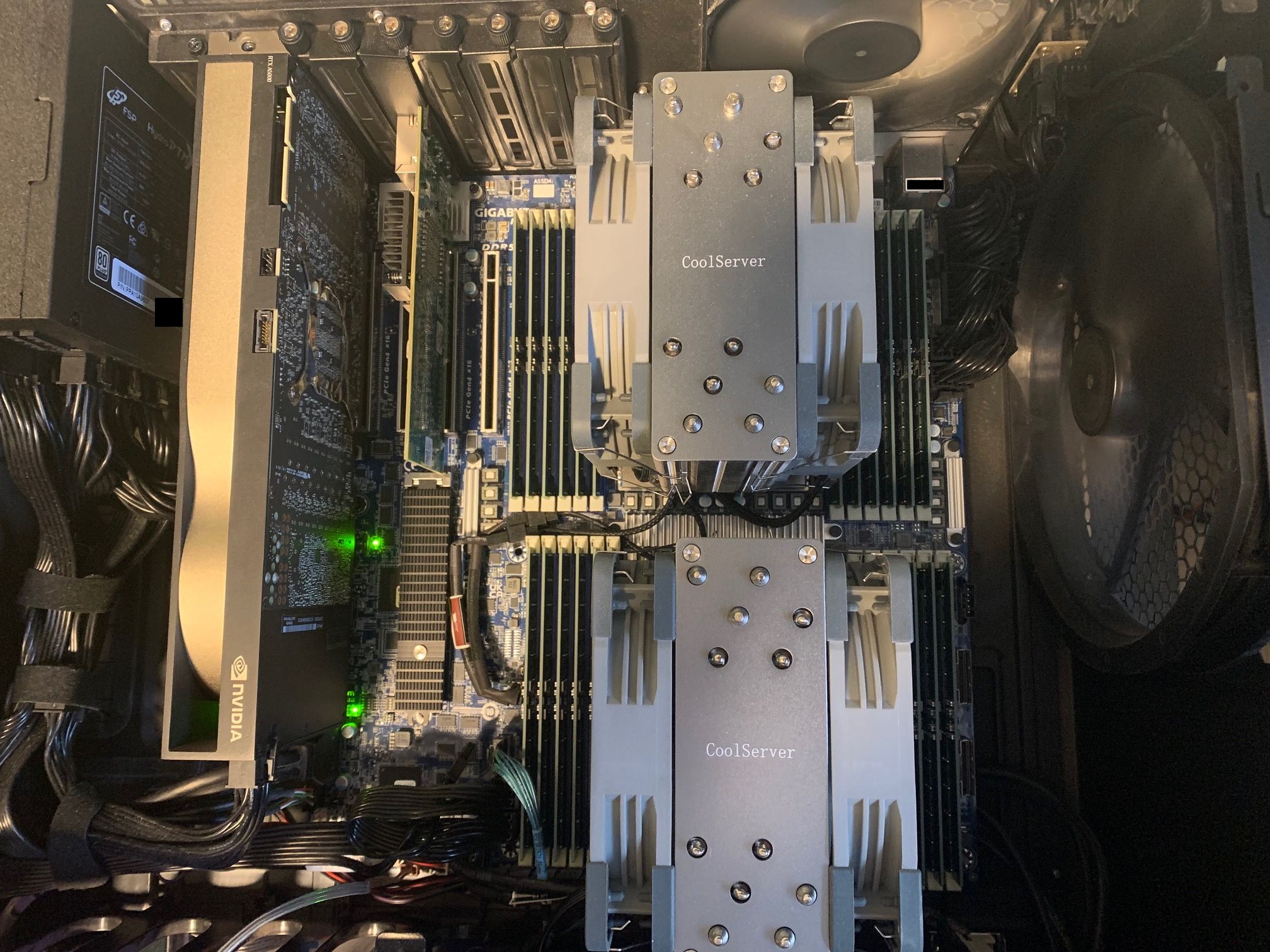

Richfag cpumaxxing rig

The big problem with running really high-parameter large-language models, is that fast inference requires massive amounts of memory bandwidth.

There are three ways:

- Stack GPUs for sweet, sweet GDDR bandwidth eg. v100maxx

- UMA richfag (Appleshit)

- CPuMAXx NUMA insanity

Dual-socket EPYC Genoa with 24-channels of DDR5-4800 at 920GB/s is what I went with

If you stack GPUs you will eventually:

- Run out of power on a PSU you can run on a standard breaker, so either put in a 20A or bigger breaker and run a 1600W+ PSU, or gang PSUs together. In any case, GPUs are power-hungry

- Need a shit-ton of fans and cooling. Also: noisy, hot hellbox

- Run out of PCIe lanes, slots and literal room in your case. You can use PCIe risers, potentially split larger slots into 1x, use mining frames and boards, etc, but all these things mean your Frankenrig is all over the place and kind of crazy

- Spend as much or more than other approaches. Also, trying to save slots and space by getting more VRAM per card means you're spending orders of magnitude more money. A single 80GB card is $15k+ even if you get a bargain somewhere

- Need to do model training to justify your investment in that many GPUs

- Laugh at anyone trying to do layer offload for any model you can fit in your VRAM. If you can do that, you'll be the fastest game in town

- Realize that even if you can squeeze the model in, you can't fit the model, the context, the tts model and the image gen model all into your VRAM. OOMing due to context can be heartbreaking.

If you go for Appleshit:

- You get a less supported arch. Metal works, but seems to have less performance than should be theoretically available

- Are stuck with exactly what you have for memory. Can't upgrade on-die RAM!

- Have basically no PCIe lanes for GPUs. Might be able to do something with Thunderbolt, but then you're back into heavy jank territory

- Can't actually use all that memory for inference. There are hacks to use more, but you'll never use anywhere close to 100%

- Suck for prompt processing, so even if inference is fast, you'll still be stuck waiting way too long for it to get past the initial prompt processing on each reply

- macos

- Spending as much as, or more than the other solutions for a less expandable solution.

- It is stylish, quiet and compact, though

If you CPuMAXx:

- You're spending a pile of cash up front ($6k USD as of the writing of this)

- Need to fill up all RAM slots to get max bandwidth, so an upgrade means swapping all sticks. BUT its easy to get 384GB/768GB/1.5TB or more of fast RAM. Try doing that with GPUs!

- Have to deal with NUMA bullshit, restricting max performance unless things are coded and balanced just-so

- Have tons of PCIe lanes for expandability

- Have tons of general purpose compute for other things. I run lots of selfhost VMs and labs.

make -j 128is satisfying - Leave any GPU you put in completely free for things it does well: Processing context, imagegen, TTS/STT, etc

- If A100 become cheap on eBay, you can still just start stacking them

- If some other tech that drops into a PCIe slot becomes available (Groq but cheap), then you can still stack those

- Can run the whole works on a 1000W PSU easily

- Run relatively cool, but CPU coolers can be hard to find

- My rig is quiet because I run big fans at low speeds. ymmv

- Can upgrade the CPU later when higher-end EPYC inevitably become cheap on eBay when companies start mass-upgrading

- Need a giant case to house the monstrosity. The CPU dies are HUGE, the memory slots take up massive real-estate, and it just doesn't fit nicely in a reasonably sized case

- You likely aren't going to be doing any training, bruh. CPUs aint GPUs. You can quant and merge pretty well though.

- You can play with frankenmerges and new models at FP16 release time. No llama.cpp integration PR lag!

- You can run Miqu 70b Q5 at 8T/s+ without doing anything special. More speedups likely (theoretically 20T/s+)

- You can play around with Mistral large and other ~120b class models at speeds of around 3T/s

- Big Mixture-of-experts models are a sweet spot, since they can be completely loaded into memory, but only a subset of parameters are active during inference. You can run a giant model like Deepseek v3/R1 600B or Qwen Coder 480b at a reasonable speed. R1 is about 10T/s with empty context. Models with smaller experts like mixtral 8x22 WizardLM and friends are downright fast. Qwen 3 235b is a real sweet spot model balancing speed and smarts. Kimi K2 and GLM4.5 are other modern models that people have been getting great results out of.

- For 405b class dense models you need more than 424GB+ to even run at a non-braindead quant+context. This build is at least able to run it. Speeds are in the 1T/s range.

- GPT-OSS from OpenAI may be a meme, but it also runs very fast on this setup. Over 50t/s are possible on the 120B using the official ggml-org GGUF files with no additional quanting.

This whole guide is probably huge cope vs. just building a GPU box. I could justify it to myself easier than stacking GPUs because I had lots of other legitimate uses for the CPU cores, memory, PCIe slots, storage capacity and fast networking.

BOM (parts sourced from QVL) :

There are newer chips out now. The new cpumaxxing meta is EPYC Turin since it can run DDR5-6000 or possibly even 6400. Fortunately the motherboard from this guide has firmware updates and can support the new chip. Hopefully engineering samples start to leak out of China for a good price. First ones seen in the wild on eBay were in the $3k+ per socket range

- eBay auction for an "Engineering Sample" gear. I got a Gigabyte MZ73-LM1 with two AMD EPYC GENOA 9334 QS 64c/128t Processors

- 24 sticks of M321R4GA3BB6-CQK Samsung 1x 32GB DDR5-4800 RDIMM PC5-38400R Dual Rank x8 Module from memory.net (cheaper than eBay)

- Good quality PSU (I used a FSP 1000W HydroPTM X Pro because I got a deal. No issues)

- Whatever storage you need. Warning that the m2 slot is NVME only. It has a 4x SATA breakout included with the MB. You can add up to 16 more drives with SlimSAS 8i breakout cables

- Giant case or whatever ghetto solution you want. I used an Antec 900 I had lying around, but needed to drill and tap new standoff holes. Make sure airflow is good and you can mount some big, slow moving fans to keep air cycling through without a lot of noise

- A couple of good SP5 CPU coolers. I got "CoolServer" brand 4U height coolers and they'll keep things room temperature at idle and only about 30C increase at full load on all cores

- Still worth it to throw a 24GB GPU in the thing. You can use the onboard video for a console so all VRAM is available for other things. I'm running a 24GB A5000, and even though all my inference is CPU, it still gets a shitton of use. eg. You can get fast text-to-speech and have SD renders of the scene as it progresses

Linux Details

- I'm running Linux Debian (Trixie) headless so all possible horsepower is going to inference

- Newer (6.6+) Linux kernels have big speedups on EPYC! 6.12 is the current best available in Debian testing

- I found turning off Transparent Hugepages to be key for remaining stable through high memory pressure situations

- nginx proxy on all services with the actual backend interfaces firewalled off from external interfaces. Who needs shit leaking to the internet? You're running local for a reason I assume

- Newer distros have gcc13/14, so you have to dick around with CUDA devkits to get things to compile (mainly forcing gcc 12/13 to be your compiler and editing apt sources to depend on libtinfo6 instead of libtinfo5 in nvidia packages)

BIOS settings:

- Don't even try to run in BIOS mode. I found it slow and unreliable. UEFI all the way.

- Make sure the xGMI link is running at full speed (4x)

- Turn off extraneous devices to free up PCIe lanes

- Tweak the NUMA layout to work for your workload (NPS0 is single image and is probably best if you don't intend to do any tweaking or multiuser stuff)

MoE Tensor Offload

You can offload just some of the tensors with the new --offload-tensors flag in llama.cpp, which can speed things up a lot at the expense of some VRAM. With my setup, I can increase generation speed by 50% if I use -ngl 99 -ot exps=CPU and squeeze the non-expert tensors all into GPU VRAM.

Batch size settings

Increasing your batch and ubatch sizes will increase your overall generation speed at the expense of some VRAM. Try using powers of 2 to see how it effects your generation speed. eg: -b 2048 -ub 2048.

Stupid NUMA tricks

You can set NPS to higher than zero and use the new --numa isolate and numactl flags in llama.cpp to run multiple LLMs, each isolated to its own NUMA node. Each one will run relatively fast (limited to memory bandwidth to those sockets/CCXs) and not impact the others. Its useful for multi-user or multi-agent setups.

eg.

numactl -N3 -m3 ./main -m miqu.gguf --no-mmap --numa numactl -t 16 -s 3955 -p "Hello"

will isolate to (zero-based) numa node 3.

You can see your numa map with numactl -H

Use gnu parallel to test simultaneous execution scenarios to get the ideal NPS for your situation.

Mostly you're going to want to run with empty caches and mmap to get decent memory locality for your backend. It can double your t/s if you get this part right

echo 3 > /proc/sys/vm/drop_caches; ./llama-cli -m deepseek-coder-v2-instruct-q8.gguf -t 60 --numa distribute -c 65535 -ngl 0 --interactive-first

Warning that doing this will take a long time to warm up the model as it has to fault all the bits of the model into memory as the MoE router hits all the experts. Use the same theory to start llama-server, ooba, kobold or whatever other long running frontend you want

If you just want the cheapest bang for your buck, go for the Mikubox classic or some variation thereof