Proxies for beginners: JLLM Alternatives

Made by FranOFran

Tired of the Jllm shenanigans? This is a total beginner's guide on what alternatives are there. Probably there are many more, but these are some I found and tried. Remember all these are based on personal opinion, experience and tastes, so my experience might be different than yours!

Trying to make this guide ADHD and dyslexia friendly, feel free to let me know if I can improve the presentation for that!

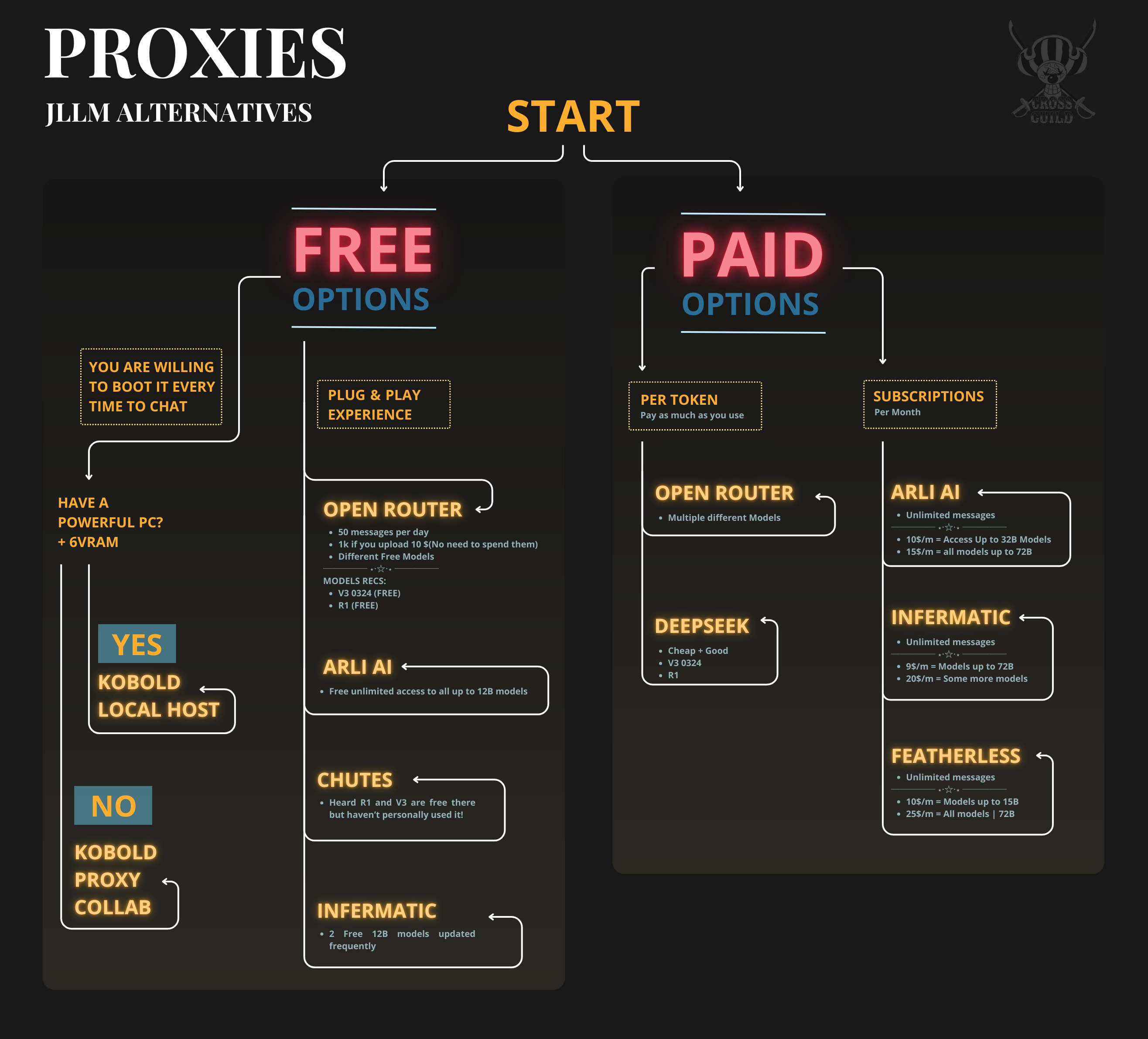

Here is a simplified version, read below for more details!

Updated on 11 April 2025

Basic AI lingo:

- Tokens = A token is a unit of text used by AI models. A single word can be 1-3 tokens depending on its length and complexity.

- Memory Context= How much the AI remembers of your RP, measured in Tokens. It always includes the bot permanent tokens and your persona's tokens, any remaining free space will use temporary tokens(first message, dialog examples and messages across your Rp. the longer you go on the chat the older temporary tokens will be forgotten)

- B= Usually shows on a model in front of a number, it basically means how smart a model is, usually they go like 12B, 32B, 70B etc. The higher the number the smarter the model is.

What I look for in an AI model(coming from the JLLM):

- Good memory

Jllm only has 3k-7k context nowadays, times of high traffic like Christmas lowers it a lot. I usually look for something with 16K+ of context. - Avoiding cliche behaviors and phrases

Examples: "maybe...just maybe", "jolts of electricity", "I'll ruin you for everyone else", etc., you know what I mean - Avoid overly horny behavior triggered for nothing

So you can have slow burn Rps, and/or Rps with plots that don't focus on NSFW. - More intelligence, be more in character, more knowledgeable

( for my case, more knowledgeable about the One piece lore). - Better special awareness + more logic

This is my personal model overview. Other people might have different experiences and opinions!

| Models | Price | Speed | Intelligence | RP Adaptability | Aggressiveness | Context Memory |

|---|---|---|---|---|---|---|

| JLLM | FREE | ⭐⭐⭐ | ⭐⭐⭐ | ⭐⭐⭐⭐⭐ | ⭐⭐⭐ | ⭐ |

| R1 | FREE or ⭐⭐⭐⭐⭐ | ⭐⭐ | ⭐⭐⭐⭐⭐ | ⭐⭐ | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ |

| V3 0324 | FREE or ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐ | ⭐⭐⭐⭐⭐ |

| Claude Sonnet | ⭐ | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ | ⭐⭐⭐ | ⭐⭐⭐⭐⭐ |

|

Updated on 11 April 20025

FREE PROXIES OPTIONS:

You can opt for free options or paid options. There are a lot of free options, however the paid ones usually are better in quality and easier to setup.

Kobold proxy collab

Pros:

- Has a lot of variety of small models from 7 to 22B, mostly 12 B's

- 24K memory context on 12B models even 16K on 14Bs

- Fast generation

- Medium easy integration on jai

Cons:

- A hassle to setup: Every time you want to use any chat, you have to manually set up the url at the proxies settings. Additionally, whenever you want to activate the proxy, it takes about about 5 min to boot it, then you have to set it up and only then you can chat. Also, if you close the collab browser page, it will kill your API key and you'll have to set it up again(wait the 5 min loading time again) so you can use it again. I don't recommend this if your wifi fails a lot

- I'm not sure on this one, but I heard there is a time limit for every day, I think 3 hrs, but I don't remember. I didn't use it much due to the hassle to set up

Feel free to explore, but these are just some cool model recommendations, I enjoyed!:

- EVA-Tissint(14b) | personal fav from there

- Starcannon(12b) | Personally, I liked it

- Magnum(12b) | people say it's good

- Mag-Mell(12b) | people say it's good

Here is the google collab link(done by Hibikiass)

Kobold local host

You can run your own llm on your own pc

Pros:

- You don't have to share the machine with anyone, I heard this gives you better response even with the same model

- You can choose any model you want and it will be free, there are thousands of options

Cons:

- Hard to install and setup at first

- Like the Kobold Collab, You'll have to boot it every time you want to use it

- You need a powerful PC with at least 6GB of VRAM to run even a small 7B model.

Arli ai

They have free small 12B models!

Pros:

- Easy to set up. Once you have it set up, you don't need to do anything else no more. Plug and play experience!

Cons:

- Slow response times. In my previous experience, I was as waiting 30s to 1 min per generation before the first word was generated.

- I liked the models, but I still felt like it lacked what I looked for for long term/complex RP, especially when it came to have knowledge of canon characters, however this bullet point is totally personal preference and you might find a model that you really like!

Open router

Pros:

- Has some different free models, Most of them are not entirely made for RP, they do a pretty good job!

- Most models have an extremely huge context

- Easy to set up. Once you do it, you don't have to touch it anymore

Cons:

- A lot of the free models aren't tailored to RP, so you will probably have to make a custom prompt to tailor it to your taste and play with the temp(at least from my experience)

- As of April 2025, you only get 50 free messages per account.

Model recs:

- Deepseek R1 (free) | Read more about it below at the paid Open router section.

- Deepseek V3 (free) | Read more about it below at the paid Open router section.

PAID PROXIES OPTIONS:

There are usually 2 general options within paid proxies. Subscriptions or pay per token(basically you paid as much as you use)

Subscriptions:

Arli ai:

Pricing:

10$/m = Access Up to 32B Models

15$/m = all models up to 72B

Pros:

- Unlimited messages

- Multiple diverse models up to 72B with high context!

- They keep adding more models!

- Easy to set up. Once it's done you don't need to touch it anymore unless you want to change models. Plug and play experience.

Cons:

- Slow replies, expect 30 to 1 min for the message to start generating the first word

Personal fav models from what I tried with my bots as of January 2025:

- Llama-3.3-70B-DeepSeek-R1-Distill

- Qwen2.5-72B-Evathene-v1.3

- Llama-3.3+3.1-70B-Euryale-v2.2

- Qwen2.5-72B-EVA-v0.2

Other subs I'm aware they exist but I didn't try

Infermatic: Link

Essential= $9/month

Plus= $20/month

Featherless: Link

Feather Basic= $10/month | Max. 15B models, Unlimited messages

Feather Premium= $25/month | All models up to 72B models, Unlimited messages

Pay per token:

You pay as much as you use. Price depends on each model

Open router

Pros:

- Extreme variety of models with extreme variety of price ranges, intelligence, most have huge context memory

Cons:

- If you are a reroll maniac, you can end up with a huge bill, fortunately you can put a limit to how much I wanna spend!

LLM recs notes:

- take it with a grain of salt, I recommend exploring other llms on your own, but these are some I personally liked, so if you're completely lost, feel free to give them a try.

- Price naming like "expensive", "Affordable" etc is based of compared to the subscriptions services like 10 and 15 per month

- Pricing $/M means that it's $ amount for 1 million of tokens)

- Some models have a lot of providers, that have different prices, if you want to use it with it's lower price, block the providers who offer these services for more

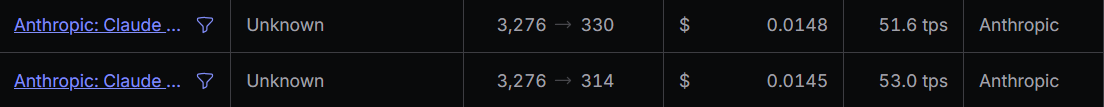

Claude sonnet 3.5 self moderated | Best quality | Expensive

Price: $3/M input tokens | $15/M output tokens | Total $18/M

example of model use on 29/01/2025

Pros:

- For me, Sonnet is king

- Has a lot of general lore for canon characters

- Characters feel in character a lot

- Stable

- Good plot and creativity

Cons:

- Expensive if you use Jai a lot. Using it with 16k of memory context, messages can go from 1~6 cents per message(from my experience), only use this on the regular if ur an oil prince/ess!

- Censored. There are ways around it with prompts and prefill but if you want to use it uncensored, you need to boot the collab page every time and run it every time you want to chat(like in kobolt collab)

Extra notes: Wanna go bankrupt? You can also use Claude Opus, I heard it's really good!

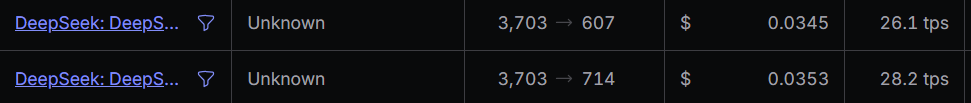

Deepseek R1 | Good quality for Price

Price: $0.75/M input tokens | $2.4/M output tokens | Total $3.15/M

- If you want to stick with paid R1, try to use Deepseek's official API! It's cheaper there and more stable

example of model use on 29/01/2025

Pros:

- Characters feel in character very accurately>

- Good body language and Mannerisms>

- Good spacial awareness, really good brain, especially with powers that require some logic and some LLms struggle to grasp the concept>

- As good as sonnet but for a much much smaller fraction of the price(in my personal experience, but needs a good custom advanced prompt)>

- A lot of canon character lore>

- Uncensored, no need for prefils/jailbreaks>

Cons:

- Slow to generate(while it's thinking, hence it's smartness). Faster than Arli's(from the time when I tested arli)>

- Issues with text formatting, but it's possible to mostly get rid of it with a good prompt.>

- Struggles with character development. It it will stick to characters too much not allowing much room for different behaviors, this can be improved with prompts, but it's still a high maintnence model that needs to be hand holded>

- Gives errors due server overloads at times(if you're using it on Open router, The official API doesn't do this)>

- Not sure if it's a con, but it's really aggressive compared to other LLMS and WILL be mean and cruel if needed sometimes without reason. No Positive bias>

Deepseek V3 0324 | Best quality for Price

- If you want to stick with paid V3, try to use Deepseek's official API!

Pros:

- A lot Faster than R1

- Good at being in character

- Good price

- Good character development and good prose

- Wide canon characters knowledge

Cons:

- Might have little repetition, but in my experience after using my prompts, I barely get any

- While it's uncensored, it does feel like anything that would typically be censored happens off screen and it doesn't focus on anything graphics like NSFW o violence, however if you have a good prompt you can bypass that

- I think it has a little of positive bias>

Honorable mentions

- Gpt-4o-mini (cheap, works well with canon characters, stable, censored).

- Mistral: Mistral Nemo (Almost free, nice quality for its price, uncensored).

DeepSeek API

- You can Choose in between the V3 and R1 (read about them at the open router section!) Their models are a lot more stable here than on Open router and cheaper(normal price but you don't compete with other provider's overpriced prices)

Pros:

- Lowest R1 or V3 prices! no errors like in open router even on the paid version. Some hours offer 75% of discount on R1!(good for Europeans)>

- Really cheap

- Faster than on Open router>

Cons:

- More or Same issues as the models on OR, as the issues are from the models themselves, However, I THINK the models perform better here>