RAIPFAQ

You've read the getting started guide, right?

https://rentry.co/SDXL-gettingstarted

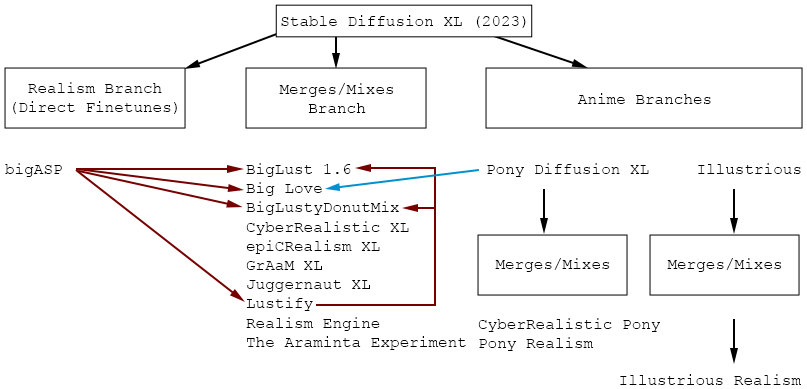

SDXL Family Tree

My rough understanding of the models' ancestry relevant to realistic NSFW SDXL. I didn't draw any connections I wasn't 90%+ certain about.

https://civitai.com/user/nutbutter/models = bigASP

https://civitai.com/user/coyotte/models = Lustify

https://civitai.com/user/waterdrinker/models = Big Lust mix

https://civitai.com/user/PurpleSmartAI/models = Pony XL finetunes

https://civitai.com/user/DonutsDelivery/models = Donut mixes

What's a LoRA?

I think of them like a patch or mod that adds something to a checkpoint/base model that the lora creator felt is missing or lackluster. There are style loras, character loras, loras to change brightness, contrast, and color temperature, etc. Celeb loras are character loras.

https://huggingface.co/blog/lora = big brain blog post

Can I Use BL16 lora with non-BL16 Checkpoint?

YES.

I used the same prompt (except for lora and celeb name) and seed across all gens. interpretations of results are like assholes, everyone has their own.

this sdxl based lora retains a good likeness across all but 1 checkpoints tested:

this biglust 1.6 lora is similar:

so yeah... sdxl or biglust lora and checkpoint doesn't matter that much for facial likeness imo. Pony is different enough that the likeness probably won't be kept using sdxl and biglust based checkpoints and i'm just gonna assume the same is true for Illustrious since that also has a more anime/illustration focused basis. Bad character loras that don't consistently output a good likeness exist in all flavors. I've probably used 1000 or more different character loras at this point and the morena and kristen loras used as examples above are both pretty damn good for keeping the likeness in a variety of prompted scenarios. the dataset (source pictures and captions/tags) and/or methodology used to create the loras is more important than the checkpoint the lora was trained on within this niche.

TL;DR don't be afraid to just try things instead of relying on anon's opinions, you might be pleasantly surprised.

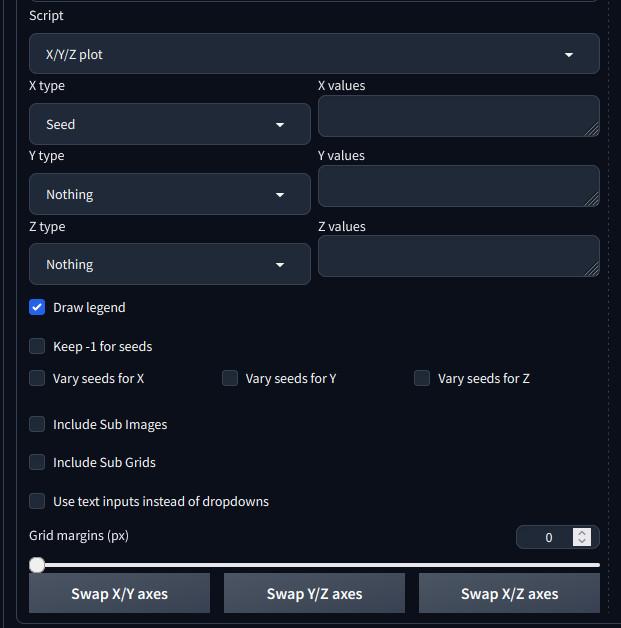

How did you make those grids?

That's a built-in default feature of a1111/forge since the the early days. Under scripts select X/Y/Z plot and have fun.

There is a way to do these in comfy as well but I'm not much help there.

Why Civitai No Have Celeb Loras?

Civitai doesn't allow them anymore because credit card companies:

https://www.unite.ai/civitai-tightens-deepfake-rules-under-pressure-from-mastercard-and-visa/

civarchive was born as a result

https://civarchive.com/

CivArchive is a cross-platform search engine for AI models. We don't host files ourselves — instead, we crawl various sources and index the models we find, using the file's SHA256 hash as its unique, permanent ID.

CivArchive was created in direct response to models being suddenly removed from CivitAI. Our core mission is to be a resilient, community-driven archive that preserves access to these valuable resources, ensuring the community's work remains accessible.

hugging face will remove celeb name loras too, i've seen a lot of dead hf lora links on civarchive. If you have the means to do so, save any character loras you find so they can be shared some other way.

Shareanon's database:

https://gofile.io/d/BhUUAU

As of December 2025 there is only one repo of celeb loras for z-image I know of and, for better and worse, all 1000+ loras are made by the same guy using the same techniques:

https://huggingface.co/malcolmrey/zimage/tree/main

https://civitai.com/user/malcolmrey/ = same guy on civit

https://huggingface.co/malcolmrey/flux/tree/main = flux loras

https://huggingface.co/malcolmrey/klein9/tree/main = new flux version loras

How do I know if the lora i have is SDXL, BL16, or something else?

Hopefully the base model a lora was trained on is noted in the filename. Regardless you can use this LoRA Metadata Viewer to find out the model it was trained on, tags, and more:

https://xypher7.github.io/lora-metadata-viewer/

You can run this metadata viewer locally and offline too just hit the github linked at the bottom of the page.

As of December 2025 that metadata viewer shows nothing for z-image turbo loras.

Want to Train a Character Lora?

https://rentry.co/sdxl-lora-training - malcomrey's method/guide

https://web.archive.org/web/20251228192142/https://rentry.co/biglust-training-and-loras - has advice and example loras as magnet links.

https://www.youtube.com/@ostrisai/videos - the creator of ai toolkit has videos on how to use that tool to create loras.

https://github.com/spacepxl/demystifying-sd-finetuning - interesting read

https://simpcity.cr/threads/betty-gilpin-sdxl-1-0-lora-how-to-train-an-sdxl-lora-in-about-an-hour.1362806/ - someone using onetrainer with example lora linked

Other Lora Related Notes I've Collected

Lora Tokens, Trigger Words, Tags, etc.

How is a name better than a token?

i'm not the anon you're talking to, but an actual name is 'loaded up' with prior knowledge. if you prompt z turbo for 'scarlett johansson', you'll get a temu version of her, but it's unmistakably trying to gen the correct person. you can use this prior knowledge and 'hijack' the tokens to train the model further on it, until you get enough likeness.

back in the old days, some tutorials recommended finding someone the model already knew and using that to train your lora. say you wanted to train a lora on a middle-aged white male with brown hair. then you could train a lora using the tokens 'tom cruise' or 'christian bale' or whatever, since the model wouldn't have to learn from scratch what you were trying to train.

back on topic: using 'sks woman' tries to hijack the 'woman' token, which is too generic, so you get no real prior guidance, and it uses 'sks' as a 'free' token that's not being used for anything in particular in the model, in order to teach your model by building on no prior knowledge of what your subject looks like.

personally, i am in favor of using correct names, but let's say your subject is named 'beyonce', but not the actual beyonce, just a 25 year old white model with blonde hair. would you train the lora using the token 'beyonce'? probably not, since you don't want the model to pick up on what little it knows about 'beyonce'.

in short: it's not an exact science, but in some situations it might be better to use actual names, specially if you want your lora to be able to gen multiple subjects without bleeding concepts (like genning 2girls and it's just clones of the same person since the model learned 'woman' = that subject).

How is a name better than a token?

because you can make use of what the model already associates with names, like gender and race, why throw that away? Gen a few images with just the name and if there isn't a strong association already in the model with something unwanted use it.

Using just "woman" makes it bleed harder to other subjects in the image

Captioning Images in Your Lora's Dataset

What's the best option (local VL models) for the end of 2025?

joycaption for NSFW, qwen3 8b vl for SFW. for boorutags its still wd tagger iirc.

Joycaption can do booru, but its not that good

Qwen3-VL, Joycaption, Florence-2-large-PromptGen-v2.0Or perhaps the new pixai-tagger or camiev2 or still wd-eva02/vit v3

There are no ideal choices.

nutbutter, the creator behind bigASP also gave us JoyCaption

https://huggingface.co/fancyfeast

https://huggingface.co/spaces/fancyfeast/joy-caption-alpha-two = live demo

https://github.com/fpgaminer/joycaption

https://civitai.com/articles/7697/joycaption-alpha-two-release

Catbox?

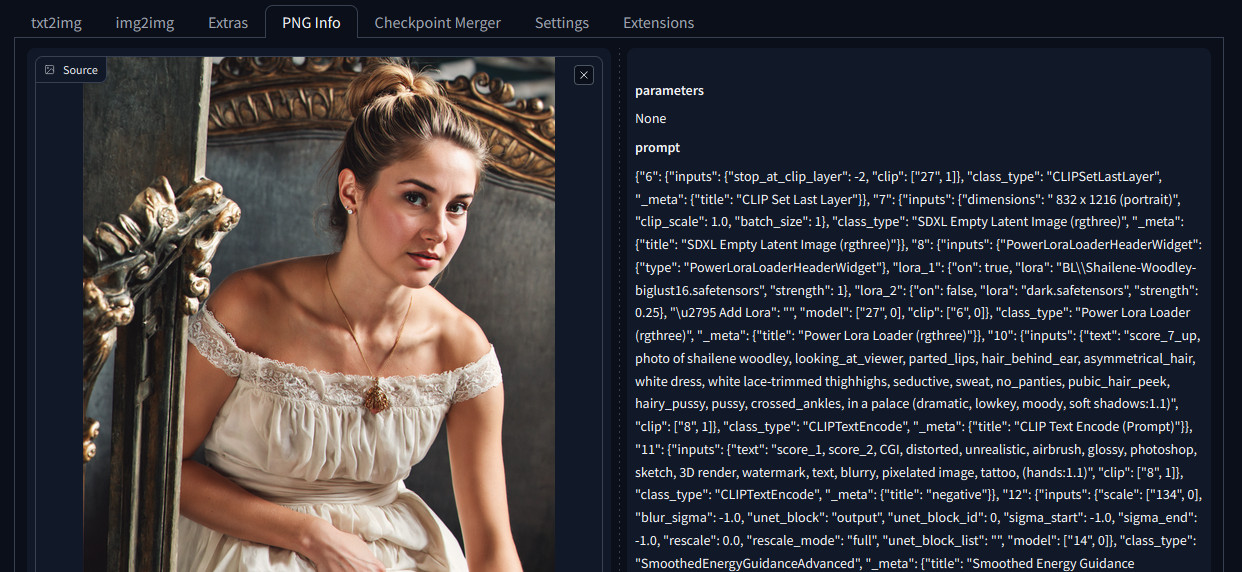

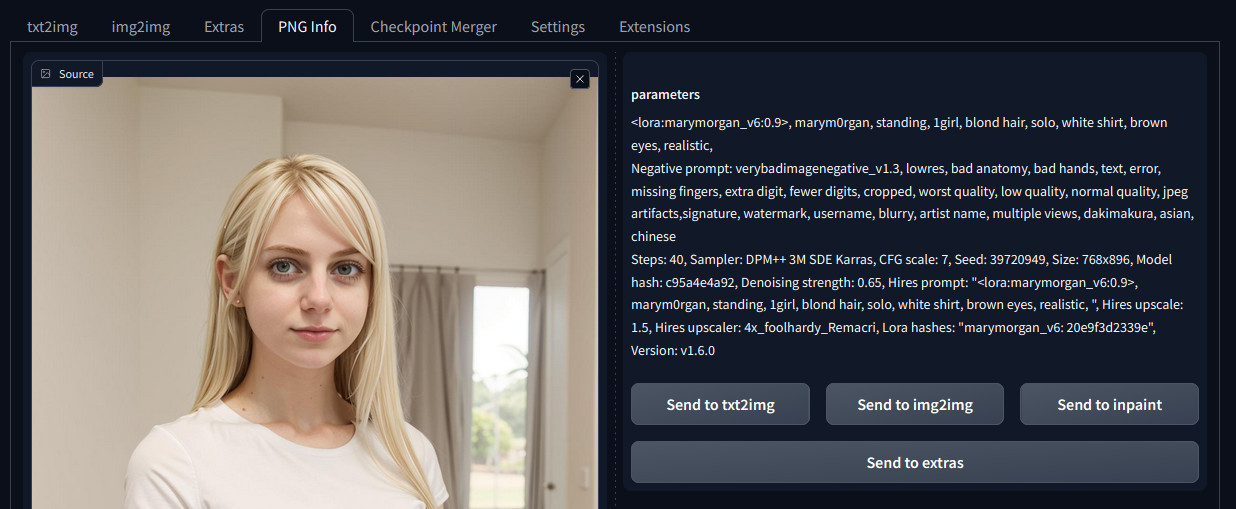

4chan and many other sites that host images strip metadata because people have doxxed themselves in the past because they weren't smart enough to remove the metadata containing gps coords recorded by their personal tracking device. https://catbox.moe/ does not. Someone asking for catbox wants to see that metadata so they know more about how the image was created than what you've already shown and said - this can be extremely helpful when you're having a problem, can't figure out why, someone else looking at your metadata or workflow might be able to find the 1 stupid thing you missed. link to the example img shown below is an example of a1111/forge style metadata. You might notice it's a jpeg, forge will put metadata in both png and jpeg files.

here's a link to an image with comfyui style metadata. If you drag this file into the png info tab of forge you'll get the metadata in JSON format. If you drag it into comfyui you get the workflow with all the nodes and shit.