A method to Create Your Own Proxy Scraper Starting from Zero

In today's digital world, where data is king, the ability to gather information quickly and efficiently is paramount. For marketers, proxy scrapers have emerged as essential tools in the kit for extracting data. A proxy scraper enables users to gather a collection of proxies from multiple sources, which can be used for web scraping, automation, and improving online anonymity. Yet, not every proxy is the same, and learning how to construct a reliable proxy scraper from scratch can markedly boost your data gathering skills.

In this guide, we will lead you through the steps to create your own proxy scraper, examining key ideas such as types of proxies, verifying their effectiveness, and testing their speed. Whether you're a seasoned programmer or a beginner looking to expand your skills, you will gain insights into building a trustworthy tool for obtaining free proxies, verify their functionality, and ensure they meet your needs for web scraping. From understanding the differences between HTTP, SOCKS4, and SOCKS5 proxies to identifying the top sources for premium proxies, this article will provide you with the insights needed to improve your data extraction efforts.

Grasping Proxies

Proxy servers act as intermediaries between your device and the web, allowing you to transmit requests and receive responses without openly revealing your internet protocol address. When you utilize a proxy, your connection is routed through the proxy server, which can provide various gains, such as enhanced privacy, better security, and overcoming geo-restrictions. This functionality makes proxies essential tools for tasks like web scraping, browsing incognito, and accessing restricted content.

Proxy servers come in a range of types, including HTTP, HTTPS, and SOCKS, with every serving particular purposes. HTTP proxies are used for web data flow, while SOCKS proxies are more versatile and can handle any type of traffic, including FTP and P2P. Understanding the variances between these types is vital for choosing the appropriate proxy for your purposes, whether you're scraping websites or administering online profiles.

Moreover, proxy servers can be classified as either free or paid. Public proxies are freely available to the public, but they often come with limitations like slower speeds and possible security risks. Private proxies, on the contrary, require a payment and offer enhanced performance, reliability, and privacy. Knowing these variances will help you choose high-quality proxies that meet the particular requirements of your tasks, especially when thinking about activities such as data collection and automation.

Creating Your Web Scraper

To begin developing your own web scraper, you must to select a coding language that you are familiar with. Ruby is a common choice due to its simplicity and the availability of many modules that can facilitate web scraping. Libraries like Requests for HTTP requests and lxml for parsing HTML are crucial. Additionally, HTTP proxy scraper might want to explore Scrapy, which is a robust framework designed especially for web scraping. Selecting the appropriate resources will simplify your coding workflow and enable you to concentrate on features.

Once you get your environment set up, you can start by identifying the sites that list free proxies. Many public proxy listing sites offer a wealth of data, but not all proxies are trustworthy. Your scraper should be robust enough to manage potential problems like page layout modifications or captchas. Creating a method to scrape data periodically can guarantee that you always have an up-to-date proxy list. Make fastest proxy scraper and checker to extract the key information such as IP address, port, and the kind of proxy (HTTP, SOCKS4, SOCKS5).

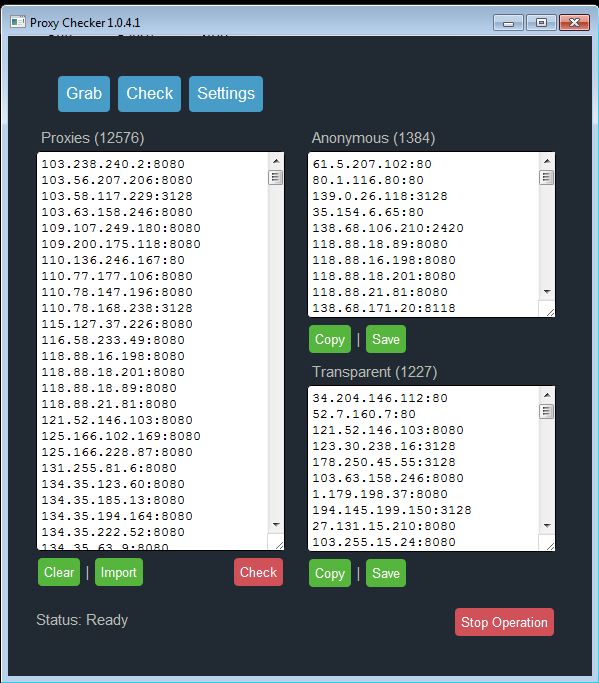

After scraping, the following step is to build a proxy validator to confirm the working status and speed of the collected proxies. This utility should evaluate each proxy with a reliable endpoint and track response times. A good proxy checker will also assess the anonymity level of the proxies, identifying whether they are open, non-transparent, or high-anonymous. By integrating this checker with your scraper, you can make sure that only high-quality proxies are kept in your list, greatly enhancing your web scraping efficiency.

Proxy Checking Checking

Ensuring that your proxies are functioning correctly is vital for successful web scraping and automation tasks. A dependable proxy checker can help you validate not only if a proxy is operational but also its response time and privacy level. This process involves dispatching requests through the proxies and obtaining responses to validate their operational status. Utilizing tools specifically created to test and verify proxies can save you time and enhance the productivity of your scraping process.

To measure the speed of your proxies, you can develop a simple script that measures the response time for requests issued through each proxy. This allows you to filter out slow proxies and concentrate on those that provide the best performance. Many users prefer quick proxy scrapers that include verification tools, making the process smooth. Furthermore, comprehending the difference between HTTP, SOCKS4, and SOCKS5 proxies can be advantageous, as each has its unique strengths that can affect your scraping operations.

Additionally, testing proxy privacy is essential to ensure your web scraping stays undetected. Tools that examine whether proxies reveal your true IP address can help secure your privacy. You can also use a proxy list generator on the web to create and validate high-quality proxies tailored for your specific scraping needs. By constantly verifying and updating your proxy list, you will improve your web scraping efficiency and minimize the risk of being blocked or marked.

Paid vs Free Proxies

When considering proxies for web scraping, one of the primary decisions to make is whether to use free or paid proxies. Complimentary proxies can be appealing due to their no cost; however, they often come with significant drawbacks. Many complimentary proxies are inconsistent, slow, and may often go offline. Additionally, they are often oversubscribed, meaning that performance can deteriorate severely, especially when many users are using the same proxy. Furthermore, complimentary proxies may have security risks, as they can be abused or observed by malicious users.

On the flip side, premium proxies offer numerous advantages that can greatly enhance your web scraping efforts. They typically provide quicker and more stable connections, ensuring that your scraping tasks run smoothly. Paid proxy providers often include various features such as exclusive IP addresses, better support for anonymity, and higher levels of security. Furthermore, many reputable providers offer regional diversity, allowing you to scrape data from different regions without being banned.

Ultimately, the decision between free and paid proxies is based on your particular requirements and goals. If your web scraping tasks are infrequent or of low importance, complimentary proxies may suffice. However, for increasingly consistent and critical applications, investing in premium proxies can significantly improve both productivity and security, making them a worthwhile consideration for serious web scraping jobs.

Employing Proxies in Automation

In today's digital landscape, automation is crucial for tasks such as data collection and web scraping. Proxies play a vital role in this process by acting as middlemen between your automated software and the sites you interact with. By utilizing proxies, you can mask your actual IP location, allowing you to bypass geo-restrictions and avoid getting restricted by sites that restrict access based on frequency or location.

When using proxies for automation, it is essential to select the appropriate type. HTTP proxy servers are suitable for web extraction through standard HTTP requests, while SOCKS proxies offer more versatility, capable of handling various protocol types beyond just HTTP. Recognizing the difference between SOCKS4 and SOCKS5 is important; the latter supports more advanced features like auth checks and UDP support, making it a superior choice for complex automated tasks.

To optimize your automated processes efforts, consider employing a proxy server verification tool. This ensures that the proxy servers you employ are working effectively and are not suffering from slow performance or high delays, which can impede your automated processes workflow. Employing a fast proxy harvester can help you locate high-quality proxies quickly, allowing a smooth scraping experience free from disruptions from restricted requests or slow reply durations.

Best Tools for Proxy Scraping

As you consider developing a custom proxy scraper, taking advantage of existing tools can significantly enhance the efficiency. Tools like ProxyHunter offer powerful features, enabling users to swiftly gather directories of proxies from multiple sources. These platforms often come with extra functionalities, including proxy verification and speed testing, helping to ensure that proxies you gather are both reliable and quick. Employing these tools can save time and streamline your scraping process, allowing for effective automation in web scraping tasks.

A further fantastic option is to leverage complimentary proxy scrapers available through the web. These tools not only provide users access to an extensive selection of free proxies, and they often come with built-in checking mechanisms to assess the speed and anonymity of individual proxy. When selecting a free proxy scraper, look for options that receive consistent updates and have a good reputation in the community, as this can considerably impact the quality and reliability of the proxies you get.

For users interested in bespoke solutions, Python libraries such as Scrapy and lxml can be incredibly useful. These libraries enable developers to create tailored proxy scrapers that fit their specific needs. By writing scripts that leverage such tools, users can effectively scrape proxies from multiple websites, verify their functionality, and build their own proxy lists. This approach not only aids in data extraction but also provides an opportunity to learn and expand your programming skills while working on hands-on applications.

Future Trends in Proxy Scraping

As web laws evolve and web scraping practices gain traction, proxy scraping solutions is expected to see significant progress. Automation will become increasingly advanced, enabling users to quickly collect and verify proxy databases without extensive manual effort. Enhanced algorithms will improve the efficiency and precision of proxy scrapers, making it simpler to find high-quality proxies appropriate for various purposes, such as web scraping and information retrieval.

Privacy and safety concerns will drive the need for more powerful proxy verification solutions and anonymity testing. Users will need to evaluate whether proxies can effectively conceal their identity and protect sensitive data. Future improvements may lead to better incorporation of machine learning techniques, which can help distinguish between reliable and questionable proxies based on historical performance and behavioral data.

Additionally, the growth of IoT devices and mobile applications will create new avenues for proxy scraping and checking. As more devices connect to the internet, the need for effective proxy management tools will grow. This change may result in specialized proxy systems tailored for specific applications, such as SEO tools and automated tasks, further expanding the proxy ecosystem in the coming years.