Web Scraping Like a Pro: Harnessing Free Proxy Tools

In the digital age, data scraping has become a vital mechanism for gathering information and analysis. Whether you are gathering insights for market research, competitor analysis, or content aggregation, the role of proxy servers can significantly enhance your scraping capabilities. However, navigating the world of proxy solutions can be tricky, especially with the myriad of options available. That is where no-cost proxy resources come into play, providing essential aids for those looking to extract information without incurring high costs.

Using a proxy scraper or validator not only helps you find the right proxy servers but also ensures that they function optimally for your needs. With the right tools, you can swiftly produce a reliable catalog of proxy servers, verify proxy speeds, and test for anonymity. As you embark on your journey to scrape the web like a pro, we will explore the best free proxy scraping tools, along with tips on how to make the most of them. Whether you are a beginner or a seasoned pro, understanding how to use these tools can elevate your data scraping abilities.

# Understanding Proxies: Hypertext Transfer Protocol vs SOCKS

Proxies serve as gateways between your computer and the internet, allowing you to route your web requests through these proxies. A couple of popular varieties of proxies are HTTP and Socket Secure, both with unique capabilities and applications. Hypertext Transfer Protocol proxies are tailored primarily for HTTP traffic and process calls for web pages, caching content, and processing HTTPS inquiries. They are often employed for web scraping, particularly when dealing with web pages that expect standard web request methods.

In contrast, Socket Secure proxies are more versatile, capable of handling different types of internet traffic, including Hypertext Transfer Protocol, FTP, and others. They function at a lower level of the Open Systems Interconnection model, meaning they do not analyze the information being transmitted. This makes SOCKS proxies suitable for operations that require disguise or need to bypass network restrictions, such as peer-to-peer file sharing or online gaming. When best proxy tools for data extraction comes to content scraping, Socket Secure proxies can be helpful for advanced workflows that involve various forms of data.

Selecting between Hypertext Transfer Protocol and Socket Secure proxies depends on your specific needs. If your focus is primarily on web scraping and you need faster performance with lower request volumes, HTTP proxies may be the better choice. However, if you're engaged in a range of data types or require extra privacy, Socket Secure proxies might be the preferred alternative. Understanding these distinctions can help you choose the right type of proxy service for your web scraping efforts.

Finding Free Proxy Sources

When it comes to web scraping, the accessibility of trustworthy proxies can significantly enhance your data gathering processes. One of the optimal ways to find no-cost proxy sources is by exploring online forums and groups dedicated to data extraction. Websites like the Reddit platform, the Stack Overflow website, and specialized scraping forums often have threads where users share directories of no-cost proxies they've used. Engaging with these forums can also provide information into the most reliable proxies and how to best utilize them.

Another effective strategy for sourcing no-cost proxies is through dedicated websites that provide proxy lists. These platforms curate and regularly update collections of working proxies, allowing users to sort by category, performance, and level of anonymity. Popular websites for free proxies often feature features that let you verify proxy status and performance, ensuring that you are working with the optimum options. Make sure to check these collections periodically, as the availability of free proxies can change swiftly.

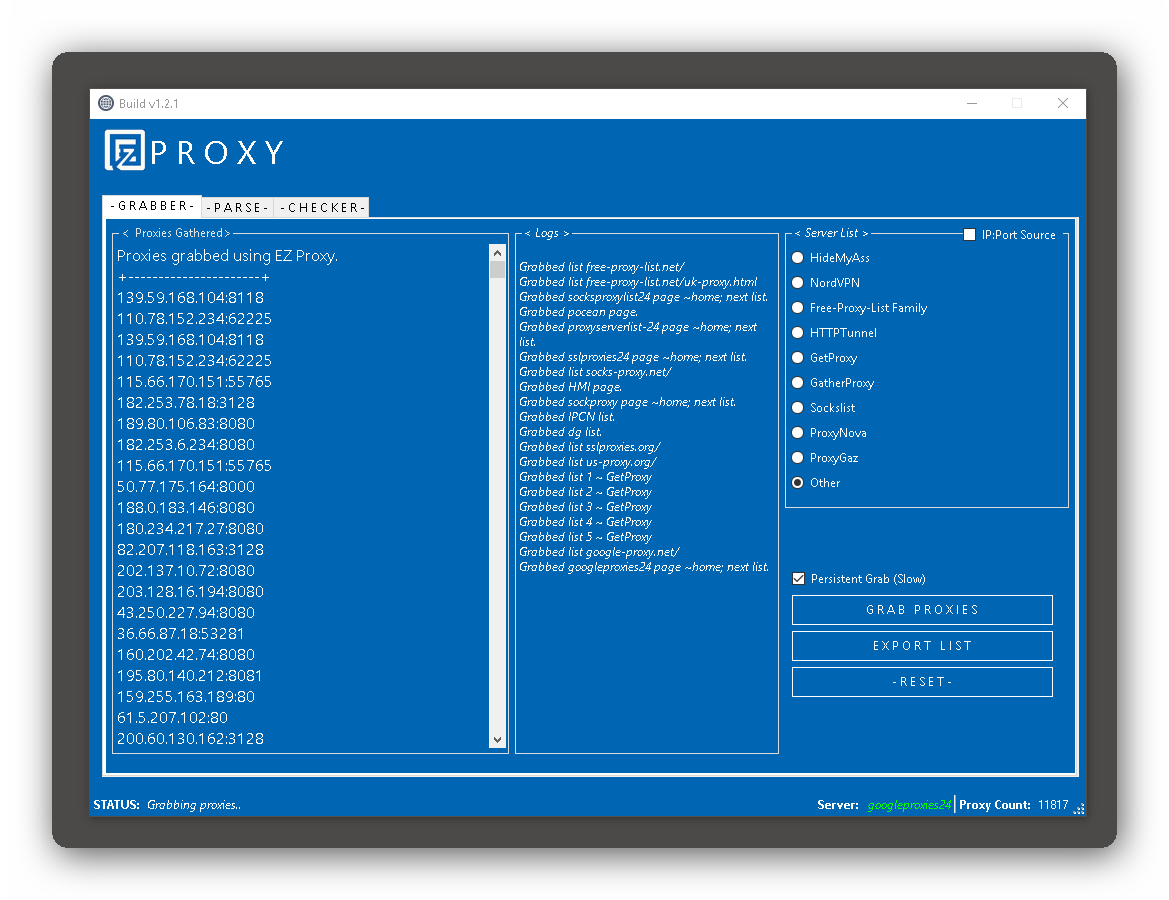

Lastly, leveraging software designed for proxy gathering can streamline the process of finding premium no-cost sources. Tools like ProxyStorm.com not only help scrape proxies from the web but also provide verification services to ensure that the proxies remain effective and quick. By combining these resources with your own investigation, you can build a robust list of proxies that will support your scraping activities efficiently.

Scraping and Testing Proxy Servers

In the realm of web scraping, employing proxies properly is essential for maintaining privacy and overcoming any usage limits that websites may impose. A reliable proxy scraper enables you to collect a list of proxies from diverse sources quickly. This procedure usually involves using free proxy scraping tools that can extract several proxies at once, enabling you to build a comprehensive proxy list customized for your specific scraping needs. Guaranteeing that you have a wide-ranging array of proxies, including both HTTP and SOCKS types, can enhance your scraping operation's overall resilience and efficiency.

Once you have collected a list of proxies, the subsequent critical step is to test their capabilities and reliability. A reliable proxy checker can verify whether the proxies are live, their reaction speeds, and levels of anonymity. These tests are essential because utilizing dead or slow proxies can greatly affect your scraping efficiency. You should look for tools that provide fast proxy checks and enable you to batch process multiple proxies at the same time. Knowing how to check proxy speed and test for anonymity will make sure that you only use proxies that meet your needs.

Finding high-quality proxies is an continuous challenge for any web scraper. While no-cost proxies can often be helpful, they come with drawbacks, including regular downtime and shared usage that might compromise your privacy. It's important to distinguish between private and public proxies, as the latter might not ensure reliable performance. By using trustworthy proxy sources alongside powerful scraping and testing tools, you can simplify your web scraping efforts, reduce the likelihood of detection, and improve your total data extraction strategy.

Best Proxy Tools for Web Scraping

Regarding web scraping, having the right proxy tools can create a substantial difference in efficiency and performance. One of the top choices for many is ProxyStorm, that offers a robust platform for gaining access to premium proxies suitable for scraping tasks. Thanks to the capability to use both HTTP and SOCKS protocols, ProxyStorm caters to diverse scraping needs while ensuring high speeds and trustworthiness. Additionally, it offers a user-friendly interface that facilitates the process of setting up proxies for different scraping applications.

A key tool is a no-cost proxy scraper, that enables users to gather proxies from diverse sources quickly. These tools can generate large lists of proxies that can be sorted based on latency and privacy, which simplifies to find appropriate options for your scraping projects. Using a fast proxy scraper ensures that you get the most responsive proxies, which can significantly impact the overall performance of your data extraction tasks.

For the functionality of the gathered proxies, a reliable proxy verification tool is crucial. A top contender in this space is the best proxy checker for 2025, which not only checks the status of proxies but also assesses their speed and privacy. This functionality helps users to filter their proxy lists and identify the most effective proxies for web scraping. Combining a proxy scraper with a robust checker, you can streamline your scraping operations, making sure that you are always using high-quality proxies.

Assessing Proxies Privacy and Speed

When using proxies for web scraping, it is important to ensure both their anonymity and speed. An anonymous proxy hides your real IP location from the target website, enabling you to browse without revealing your identity. To ensure that a proxy provides anonymity, one can use applications designed to assess anonymity levels. These tools can label proxies as elite, anonymous, or transparent, giving insights into how much information is leaked while using the proxy.

Performance is another critical factor when picking proxies for web scraping. A lagging proxy can hinder your scraping process, resulting to time-consuming delays and potential data loss. To measure proxy speed, specialized proxy checkers can be employed, enabling users to evaluate the response time of multiple proxies. This information can be important when creating a dependable proxy list for steady web scraping performance.

Combining privacy checks with speed assessment helps users find premium proxies suitable for their needs. Many free proxy scrapers feature features that permit users to narrow down proxies based on speed and privacy. By leveraging these features, web scrapers can ensure they are using the most efficient proxies, balancing speed with the required level of privacy for their scraping tasks. This proactive approach to assessing can significantly enhance the efficiency of web scraping operations.

Automation and Search Engine Optimization Software with Proxies

Proxies serve a vital role in streamlining tasks and enhancing the efficiency of Search Engine Optimization tools. When using with data extraction software or automation scripts, proxy servers assist in managing numerous queries without being banned. By routing traffic through different IP addresses, individuals can perform searches or extract information from multiple sites without raising alerts. This capability is essential for companies that depend on data collection and analytical processes to inform their strategic marketing efforts.

Numerous Search Engine Optimization tools are equipped with support for proxies to ensure data accuracy and holistic understanding. For example, tools that perform rank tracking or research on keywords often use proxies to fetch results from search engines without being limited by location-based restrictions. This enables marketing professionals to assess their website performance on a global scale and discover the strategies of competitors effectively. The incorporation of proxies in these tools not only reduces time consumption but also provides a competitive edge in the busy digital marketplace.

When evaluating proxies for automated processes and Search Engine Optimization, it's crucial to choose between private and public choices based on your needs. Private proxy servers offer better reliability and performance, making them suitable for high-volume operations, while shared proxy servers are often less efficient and can be more vulnerable. Furthermore, understanding the distinctions between Hypertext Transfer Protocol, SOCKS4, and SOCKS version 5 proxies can help users choose the most appropriate type for their automated tasks, optimizing both efficiency and anonymity.

Private vs Public Proxies: Advantages and Disadvantages

Personal proxies are dedicated to a single user, providing improved protection and confidentiality. Such proxies typically offer superior efficiency, quicker speeds, and more reliability compared to public proxies. Since a single user has access to the proxy server, the likelihood of being marked or blocked by websites are significantly reduced. Additionally, private proxies often come with client support, making it simpler to troubleshoot potential issues that may arise.

On the flip side, shared proxies are free and accessible to everyone, which makes them a common choice for users looking to extract data without incurring costs. However, this accessibility comes with substantial downsides. Shared proxies are frequently congested, resulting in slower speeds and increased downtime. They also pose a greater risk to user safety, as malicious actors can take advantage of these proxies, leading to potential data breaches. Furthermore, the disguise offered by shared proxies can be questionable, impacting web scraping effectiveness.

Choosing between private and public proxies ultimately hinges on your needs and budget. If you need consistent functionality and high levels of safety for sensitive tasks, private proxies are the preferred option. Conversely, if you are testing with web scraping or have minimal requirements, shared proxies can meet your needs without financial investment. Understanding these considerations is crucial for making an informed decision that aligns with your web scraping objectives.