Grasping Types of Proxies: Obtaining the Most Effective from SOCKS4 and SOCKS 5

In today's digital landscape, exploring online efficiently often requires the use of proxy solutions to improve anonymity, performance, and convenience. Among the multiple types of proxies accessible, SOCKS4 and SOCKS5 stand out due to their versatility and effectiveness. Comprehending these proxy types can greatly influence your online task, whether you are scraping data, performing SEO investigations, or just aiming to improve your online anonymity.

As individuals increasingly resort to proxy tools, terms like proxy harvesting tool, proxy verifier, and proxy validation tools become vital in the search for dependable proxies. With a plethora of options that include complimentary and subscription-based offerings, recognizing the distinctions between HTTP, SOCKS4, and SOCKS5 proxies is imperative for selecting the most suitable solutions for web scraping and robotic activities. In this article, we will delve into the details of SOCKS proxies, how to successfully extract and validate proxies, and share suggestions for the top tools currently offered.

Summary of Proxy Categories

Proxy servers serve as intermediaries between clients and web servers, allowing individuals to conceal their IP addresses and access information more securely and anonymously. Among the different types of proxies available, SOCKS 4 and SOCKS 5 have gained notable recognition, particularly for their capability in handling different kinds of network traffic. SOCKS operate at a lower level than HTTP proxy servers, which makes them able of handling a wide range of protocols, which opens up a variety of possibilities for individuals seeking variety in their online actions.

The main difference between SOCKS 4 and SOCKS5 is the additional functionality and safeguards that SOCKS 5 provides. SOCKS 5 supports both authentication and UDP traffic, which ensures a more secure link while enhancing performance for certain applications such as gaming or streaming videos. This additional layer of functionality makes SOCKS 5 a better attractive option for users who need both speed and anonymity, particularly in data-intensive tasks such as web scraping.

When considering the utilization of proxy servers for data extraction, it's important to evaluate the source and quality of the proxies. Complimentary proxies might seem attractive due to zero cost, but they often come with reliability issues and reduced efficiency compared to paid options. Premium private proxies typically provide better speeds and anonymity, which makes them the most desirable choice for dedicated web scrapers and businesses looking to automate information gathering processes effectively.

Characteristics of SOCKS Version 4 vs SOCKS Version 5

SOCKS4 and SOCKS5 are both internet protocols that enable the transmission of network packets through a proxy, but they have distinct features that cater to different needs. SOCKS4 is mainly used for Transmission Control Protocol connections and is relatively simple in design. It does not support user verification, which means any user can access the proxy without any login details. This can be beneficial for ease of use but poses a serious security risk, especially in scenarios requiring data privacy.

On the other hand, SOCKS5 improves functionality by supporting both Transmission Control Protocol and User Datagram Protocol connections, which allows it to handle more diverse internet traffic effectively. It also features sophisticated authentication methods, allowing users to establish secure connections through various verification methods like username and password. This capability makes SOCKS5 a more safer choice for users who require confidentiality and protection against data breaches during their online actions.

Additionally, SOCKS Version 5 provides capability to handle IPv6, making it a more forward-compatible choice as the web continues to evolve. This feature allows users to connect through both IPv4 and Internet Protocol version 6 addresses, facilitating broader compatibility with current web applications. The improvements in SOCKS5 make it a favored choice for complex applications, such as data extraction and file sharing, where speed and security are paramount.

Proxy Server Harvesting Strategies

Proxy Server harvesting is a crucial process for users looking to obtain trustworthy proxies for multiple purposes such as web scraping and automated systems. The predominant method involves using a specialized proxy server scraper software that can gather proxy addresses from different locations on the internet. These tools often have capabilities that allow individuals to set parameters for speed, anonymity, and type of proxy server, making it more efficient to find the right match for specific requirements. For example, a no-cost proxy scraper can sometimes yield thousands of proxy servers, but individuals must be careful as the reliability and speed of these proxies are often variable.

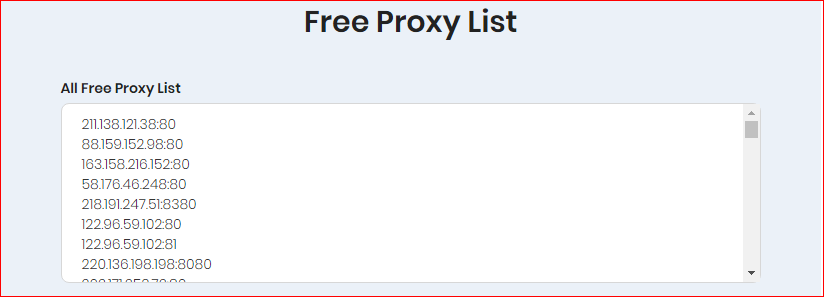

When scraping proxies, it is essential to focus on providers that regularly provide high-quality data. Websites that catalog free or premium proxy lists can be great starting points, especially if they provide reviews from users or evaluations. Fast proxy scrapers can streamline the process by removing slow or unreliable proxies, allowing users to focus on the top choices available. Utilizing a list of proxies generator online can also simplify the creation of tailored lists tailored to specific needs.

An additional important aspect during proxy server harvesting is the validation process. Once proxy servers are obtained, evaluating them with a reputable proxy validation tool is vital to ensure they are operating as expected. This entails assessing for performance, anonymity level, and whether the proxies are functional or inactive. Applications that provide detailed validation, such as a SOCKS proxy verification tool or a standard proxy validator, can help identify the top-performing proxies for data scraping tasks, differentiating between public and private proxies based on their efficacy.

Verifying Proxies Efficiency

While utilizing proxies for web scraping or automation jobs, verifying the proxies' capabilities is essential to make sure that they fulfill your criteria regarding quickness, reliability, plus privacy. The performance of a proxy can significantly impact the efficiency your activities run, making critical to regularly evaluate and validate the proxies you are using. A reliable proxy validation tool will allow you to determine the responsiveness rate plus caliber of the proxies in use, which helps you remove those that do not perform well.

In order to check proxy speed, you may use different web-based tools and create your own script using languages like JavaScript. These tools measure how quickly it responds for the proxy and help you identify the proxies that give optimal efficiency for your particular needs. Additionally, testing for flow rates helps you understand how much data can be transferred via a proxy, which is essential in heavy scraping tasks. Rapid proxy scrapers and also checkers are extremely useful for these tasks, enabling you to quickly check many proxies at once.

Concealment is a further aspect of performance that is crucial to consider. Checking proxy servers in terms of how anonymous they are is essential to make sure your data extraction processes go unnoticed and do not violate the terms of service of the target sites. A SOCKS proxy checker can specifically identify whether a proxy is reliable in masking your identity. Knowing the difference between the two types of SOCKS proxies can help when selecting the suitable option for your use case, ensuring optimal usage from your chosen proxies.

Choosing An Appropriate Proxy Provider

As choosing a proxy source, it's essential to evaluate the quality and trustworthiness of the offered proxies provided. Premium proxies will ensure quicker speeds, better anonymity, and lowered risk of bans during scraping data. It's smart to search for sources that focus in supplying dedicated and partially dedicated proxies, as these generally perform more efficiently for demanding tasks such as web scraping and data extraction.

A further important factor is the category of proxies available. Sometimes, a specific project might call for HTTP, SOCKS4, or SOCKS5 proxies because of their diverse functionalities. For example, SOCKS5 proxies are often preferred for their superior security and ability to handle more complex routing, while HTTP proxies may be enough for simpler tasks. Ensure the source you select provides the type that aligns your particular demands and use cases.

Lastly, consider the cost and accessibility of the proxy sources. There are both paid options available, each with its pros and cons. While free proxies might seem appealing, they often come with drawbacks such as reduced speeds, more regular downtime, and unreliable reliability. In contrast, paid proxies usually provide superior performance and customer support, thereby making them a worthwhile investment for serious web scraping and automation efforts.

Automating with Proxy Servers

Proxies play a crucial role in streamlining numerous web-based tasks by enabling users to redirect their traffic through various IP addresses. This not only assists in maintaining stealth but also in bypassing restrictions placed by websites. Automation can leverage proxy scrapers to gather data from multiple sources rapidly, making it an integral component for operations like web scraping, data extraction, and SEO activities. By incorporating proxies into these automation processes, users can efficiently manage the load and boost their effectiveness.

When utilizing automation, it's important to pick the right type of proxy for the specific task. SOCKS4 and SOCKS5 proxies are frequently used choices due to their ability to handle different protocols and provide enhanced security features. SOCKS5, in particularity, supports authentication and can manage various types of traffic, making it suitable for more advanced automation requirements. Using a speedy proxy checker can help ensure that the proxies in use are reliable and able of handling the required tasks without slowing down the automation procedure.

In conclusion, setting up a robust proxy management system is vital for effective automation. Tools like ProxyStorm can assist manage proxy lists, validate proxy speed, and ensure reliable proxies are being used at all times. Additionally, knowing how to test proxy anonymity becomes important, as automation processes often require a level of stealth to elude detection and blocking by intended websites. By grasping and implementing the best practices for using proxies in automation, users can improve their web scraping and data extraction strategies significantly.

Frequent Proxy Service Tools for Data Harvesting

While engaging in data extraction, equipping oneself with the correct instruments is vital for efficient and productive proxy management. Proxy scrapers allow operators to gather arrays of proxies from various sources. Many tools specialize in this function, with some having free options while others provide top-tier services. No-cost proxy scrapers can be useful for novice users or those looking to try functionalities without spending money. However, for more reliable and faster results, investing in a paid proxy scraping tool may provide higher quality proxies.

Once you have a collection of proxies, checking their accuracy and performance is essential. Proxy validators are necessary here, enabling users to assess whether proxies are active, how quickly they are, and their levels of anonymity. Certain of the top proxy checkers even include features such as recognizing the category of proxy (HTTP, SOCKS5, SOCKS5) and analyzing their usability for various types of scraping tasks. Tools like ProxyStorm can assist enhance this process, making it more manageable to handle multiple proxies smoothly.

In addition, users should think about the origins from which they source proxies. Reliable proxy sources can significantly impact the effectiveness of data extraction tasks. Employing premium proxy lists, whether created manually or produced through online tools, can make a positive effect in reaching barred content and preserving anonymity. Integrating effective scraping practices with the best tools available will provide a seamless process, whether you are using free proxies or paid options for automation and information gathering tasks.