Dive into Information Retrieval: Utilizing Proxy Servers Effectively

In this age of big data, the ability to collect and manipulate information swiftly is essential. As businesses and people increasingly utilize web scraping for market research, understanding the role of proxies becomes necessary. Proxies help users explore the web without being tracked and view content without being constrained by geographic barriers or bandwidth restrictions. However, with a plethora of proxy options available, knowing how to select and utilize them wisely can make all the difference in the efficacy of your data extraction efforts.

This piece investigates the intricacies of proxy utilization, from acquiring free proxies to employing advanced proxy checkers for verification. We will explore the most effective tools for scraping and managing proxies, including features like latency assessment and privacy tests. Additionally, we will outline the distinction between numerous types of proxies, such as HTTP/2, SOCKS4a, and SOCKS5a, as well as the differences between shared and dedicated proxies. By the end of this resource, you will be armed with the information to utilize proxies efficiently, ensuring that your data gathering efforts are both efficient and dependable.

Grasping Proxy Servers: Types and Uses

Proxy servers serve as bridges between a user's device and the target server, providing various functionalities based on their type. One common type is the Hypertext Transfer Protocol proxy, which is designed for handling web traffic and can facilitate activities such as content filtering and storing. These proxies are commonly used for tasks like web scraping and browsing the internet privately. On the flip hand, SOCKS servers are more flexible and can handle any type of traffic, including TCP and UDP, making them appropriate for a variety of applications other than just web surfing.

The choice between various types of proxy servers also relies on the level of anonymity needed. HTTP servers might offer restricted anonymity, as the source IP address can occasionally be exposed. SOCKS4 and SOCKS5 proxies, on the other hand, provide enhanced privacy features. SOCKS5, in particular, offers authentication and works with UDP protocols, making it a favored option for use cases requiring high anonymity and performance, such as online gaming or streaming platforms.

When using proxy servers, understanding their specific use cases is critical for attaining the desired results. For instance, web scraping projects usually benefit from fast proxies that can overcome barriers and ensure consistent access to target websites. Additionally, automated tasks often demands reliable proxy providers that can handle multiple requests without compromising speed or data integrity. Selecting the right kind of server based on these needs can greatly enhance the efficiency of data extraction initiatives.

Proxy Harvesting: Tools and Techniques

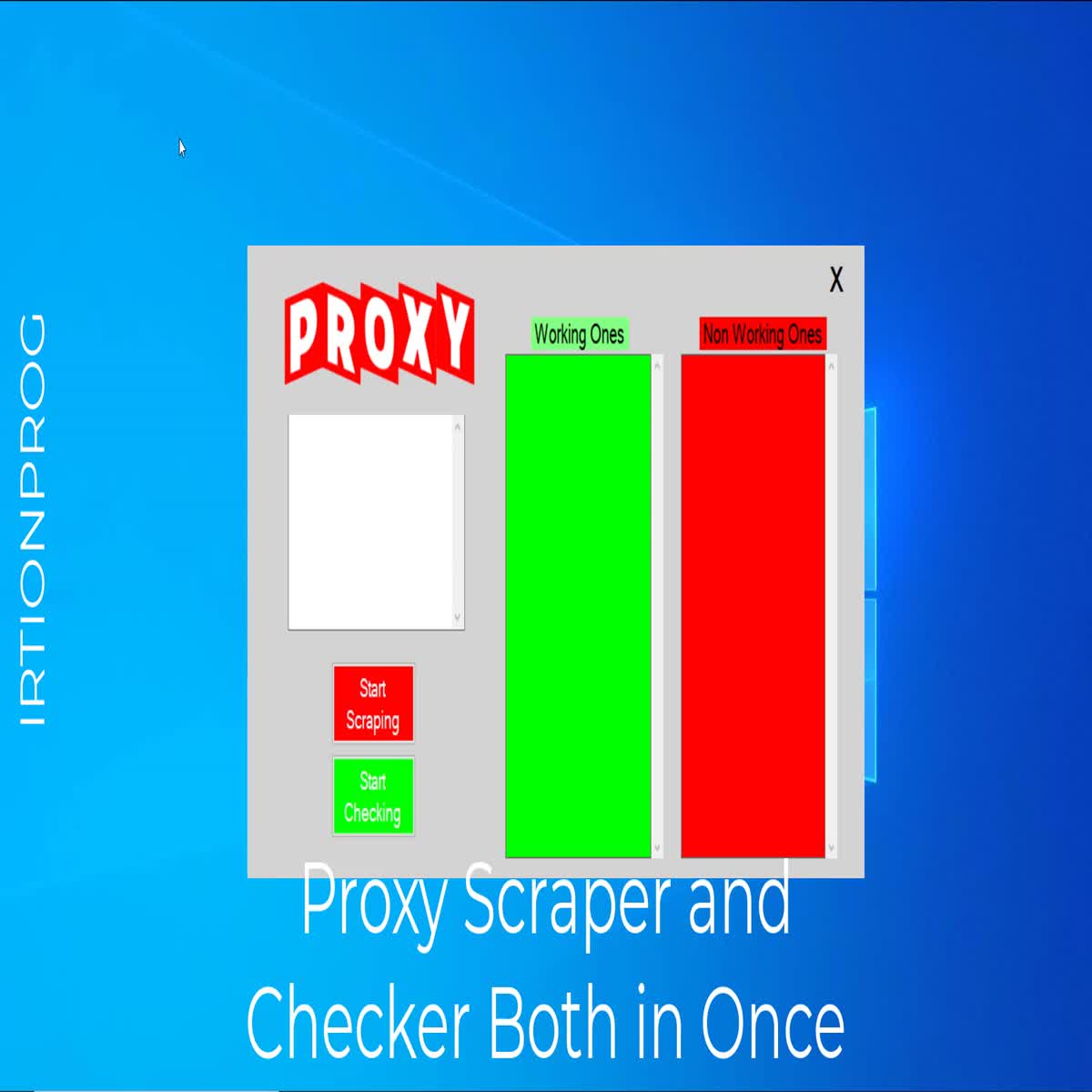

As immersing into proxy scraping, choosing the appropriate tools is essential for efficient data harvesting. Proxy extraction tools are vital for collecting collections of proxies, and several options satisfy various needs. Costless proxy scrapers provide a solid starting point for beginners, while fast proxy scrapers guarantee that users can operate efficiently without noticeable delays. Applications like ProxyStorm offer a simplified way to gather proxies and verify their efficiency, making them important assets for web scraping projects.

After proxies are acquired, confirming their performance is also important. The best proxy checker utilities perform thorough tests to verify that proxies are working as expected. These verification tools commonly assess parameters like speed and anonymity, helping users avoid inefficient or flaky proxies. Options like SOCKS proxy checker s distinguish between well-integrated options, accommodating different scraping scenarios while maintaining a strong performance.

To maximize the utility of proxies, understanding the differences between different types is crucial. HTTP, SOCKS4, and SOCKS5 proxies serve different purposes in web scraping. HTTP proxies are commonly used for simple tasks, while SOCKS proxies provide enhanced flexibility and performance for more intricate automation. By leveraging the right tools to scrape proxies and grasping their characteristics, individuals can greatly improve their information extraction efforts and move through the web effectively.

Paid versus Free Proxies: Which to Opt For

When considering proxies for data extraction and web scraping, a key of the primary decisions is whether to use complimentary or paid proxies. Free proxies are readily available and generally require no payment, making them an appealing option for occasional users or those new to the field. Yet, they frequently come with limitations such as reduced speeds, higher downtime, and less reliability. Additionally, complimentary proxies are frequently shared among numerous users, which can lead to problems with speed and anonymity, compromising the effectiveness of your web scraping activities.

Conversely, premium proxies are typically more reliable and offer better performance. They often come with private IP addresses, which greatly enhance both speed and anonymity. This reliability is crucial for businesses or users who depend on data extraction to operate effectively. Paid proxy services usually offer additional features such as geographic targeting, advanced security protocols, and customer support, making them a suitable choice for focused data extraction tasks and automation processes.

In the end, the decision between complimentary and premium proxies depends on your specific needs and usage scenario. If you are involved in casual browsing or low-stakes scraping, free proxies might be sufficient. On the flip side, for high-volume web scraping, automation, or tasks that require reliable operation and security, investing in a quality paid proxy service is frequently the wise choice.

Assessing and Confirming Proxies

While using proxies, testing and verification are vital processes to confirm they function properly and fulfill your requirements. A solid proxy verification tool can save you resources by highlighting which proxies in your list are operational and which are down. Multiple tools, such as Proxy Checker, let you to assess multiple proxies at the same time, checking their latency, anonymity level, and protocol. This method ensures that your web scraping tasks are not impeded by unresponsive or low-quality proxies.

A further crucial aspect is confirming proxy performance. High-speed proxies are important for efficient data extraction, especially when scraping websites that implement rate limits or other methods against excessive requests. Tools that evaluate proxy speed can help you discover top-performing proxies that deliver fast and reliable connections. Additionally, understanding the difference between HTTP, SOCKS4 proxies, and SOCKS5 can assist your selection of proxies based on the particular needs of your scraping project.

Ultimately, evaluating for proxy anonymity is vital for maintaining confidentiality and avoiding detection. Levels of anonymity can vary between proxies, and using a utility to evaluate if a proxy is clear, private, or elite will help you understand the level of protection you have. This approach is particularly crucial when scraping competitive data or confidential information where being detected can lead to blocking or legal issues. By using comprehensive proxy testing and validation methods, you can guarantee optimal performance in your data extraction tasks.

Proxy for Data Scraping

Effective proxy management is vital for efficient web scraping. It ensures make certain that your scraping activities remain invisible and productive. By using a scraper for proxies, you can obtain a diverse array of proxies to distribute your requests. This distribution of requests across multiple IP addresses not just reduces the chances of being blocked but also boosts the speed of data extraction. A properly managed proxy list allows you to rotate proxies frequently, which is important when scraping data from websites that monitor and restrict IP usage.

In furthermore to using a proxy scraper, you should make use of a reliable proxy checker to validate the health and performance of your proxies. This tool can evaluate for speed, anonymity levels, and reliability, making sure that the proxies in use are effective for your scraping tasks. With the right proxy verification tool, you can filter out slow or poor-quality proxies, thus maintaining the efficiency of your web scraping process. Regularly testing and updating your proxy list will assist in keeping your operations smooth and continuous.

When it comes to selecting proxies for web scraping, take into account the differences between private and public proxies. Private proxies offer better speed and security, making them an ideal choice for specific scraping jobs, while public proxies are generally slower and less reliable but can be used for minor, less intensive tasks. Understanding how to find high-quality proxies and manage them effectively will create a significant difference in the quality and quantity of data you can extract, ultimately improving your results in data extraction and automation tasks.

Best Practices for Using Proxies

While utilizing proxies for data extraction, it is essential to choose a dependable proxy source. Free proxies may seem appealing, but they often come with issues such as lagging speed, recurring downtime, and possible security vulnerabilities. Opting for a paid proxy service can offer more reliability, higher quality proxies, and better anonymity. Look for services that offer HTTP and SOCKS proxies with a positive reputation among web scraping communities, ensuring you have the best tools for your projects.

Frequently testing and validating your proxies is vital to keep their efficacy. Employ a reliable proxy checker to assess the speed, reliability, and anonymity of your proxies. This way, you can identify which proxies are operating optimally and eliminate those that do not meet your performance standards. Conducting speed tests and verifying for geographic location can also help you tailor your proxy usage to your specific scraping needs.

Finally, understand the various types of proxies that exist and their respective uses. HTTP, SOCKS4, and SOCKS5 proxies serve varied purposes, and knowing the differences is critical for successful web scraping. For example, while SOCKS5 proxies accommodate a wider range of protocols and provide more adaptability, they may not be necessary for every tasks. Knowing your specific requirements will help you enhance your proxy usage and ensure productivity in your data extraction efforts.

Automation plus Proxy Usage: Boosting Productivity

In this fast-paced online landscape, the demand for smooth automation in data extraction is essential. Proxies play a key role in this process by enabling users to handle multiple requests simultaneously while not raising red flags. By utilizing a trustworthy proxy scraper, you can obtain a vast range of IP addresses that help diversify your web scraping tasks, significantly reducing the chances of being frozen by target websites. This approach not only speeds up data gathering but also guarantees that your scraping activities remain under the radar.

Adopting a solid proxy verification tool is crucial to ensuring the effectiveness of your automation efforts. A best proxy checker allows you to filter out unusable proxies quickly, ensuring that only reliable IPs are in your rotation. The verification process should include checking proxy speed, privacy levels, and reply times. By frequently testing your proxies and discarding low-performing ones, you can achieve optimal performance during your scraping tasks, leading to quicker and more reliable results.

To boost efficiency even more, consider integrating SEO tools with proxy functionality into your automation workflows. This can elevate data extraction capabilities and provide information that are essential for competitive analysis. Tools that scrape proxies for complimentary can be beneficial for budget-friendly solutions, while acquiring private proxies may yield better performance. Balancing the use of private and public proxies and continuously monitoring their effectiveness will allow your automation processes to succeed, ultimately boosting the quality and speed of your data extraction efforts.