DSR technology paper

Representation forms of knowledge

- The multiple uses of Adjacency Matrixes

- The environment Γ: Cayley Graphs

- Graphs in programming

- Wolfgang Stegmüller / Gotthard Günther - philo papers

- The irrational implications of the word 'is'

- Encoding facts: RDF - "Semantic Triples"

- Summary of the dimensionality of language

- Traversal of Knowledge Graphs

- The important Kleene Star operator

The multiple uses of Adjacency Matrixes

The Applications of Matrices | What I wish my teachers told me way earlier

https://youtube.com/watch?v=rowWM-MijXU&t=15m42s

The environment Γ: Cayley Graphs

With Cayley Graphs I design my environment Γ, the world what can happen:

Common overview: https://duckduckgo.com/?q=cayley+graphs&iax=images

Rubik's Cube: https://en.wikipedia.org/wiki/Optimal_solutions_for_the_Rubik's_Cube

Here somebody found "symmetry groups" within the Rubik's Cayley Graph and he could proof, that it only needs 22 moves to solve any Rubik's cube position:

https://www.researchgate.net/publication/226585368_Twenty-Two_Moves_Suffice_for_Rubik's_CubeR

"Symmetry Groups" are mental points (in graphs called "nodes") of equal state. The "edges" (connecting lines) represent transforms howto to get from one state to the other. E.g. 2, 3, 4, 5 axis robot arms have completely different abilities what they can do.

https://duckduckgo.com/?q=cayley+graph+axis+robot+arm&iar=images

And here i can find e.g. the "shortest path" for a robot to move from one position to the other. God's algorithm to solve Rubik: https://yetanothermathblog.com/permutation-puzzles/lecture-notes-on-the-rubiks-cube/rubiks-cube-notes-cayley-graphs-and-gods-algorithm/

The Nim game graph

https://duckduckgo.com/?q=nim+game+graph&iar=images

You can easily show that n%4+1 is an invariant.

Graphs in programming

The so-called "syntax tree", in fact, is a "call graph":

The type dependencies ("type inference") also build a graph:

The math behind: https://rentry.co/DSRsecuritycoursepart13

https://github.com/milesbarr/hindley-milner-in-python

https://duckduckgo.com/?q=hindley+milner+type+dependency+graph&iax=images&ia=images

https://rentry.co/DSRsecuritycourse#the-secure-eiffel-programming-language-p-c-p

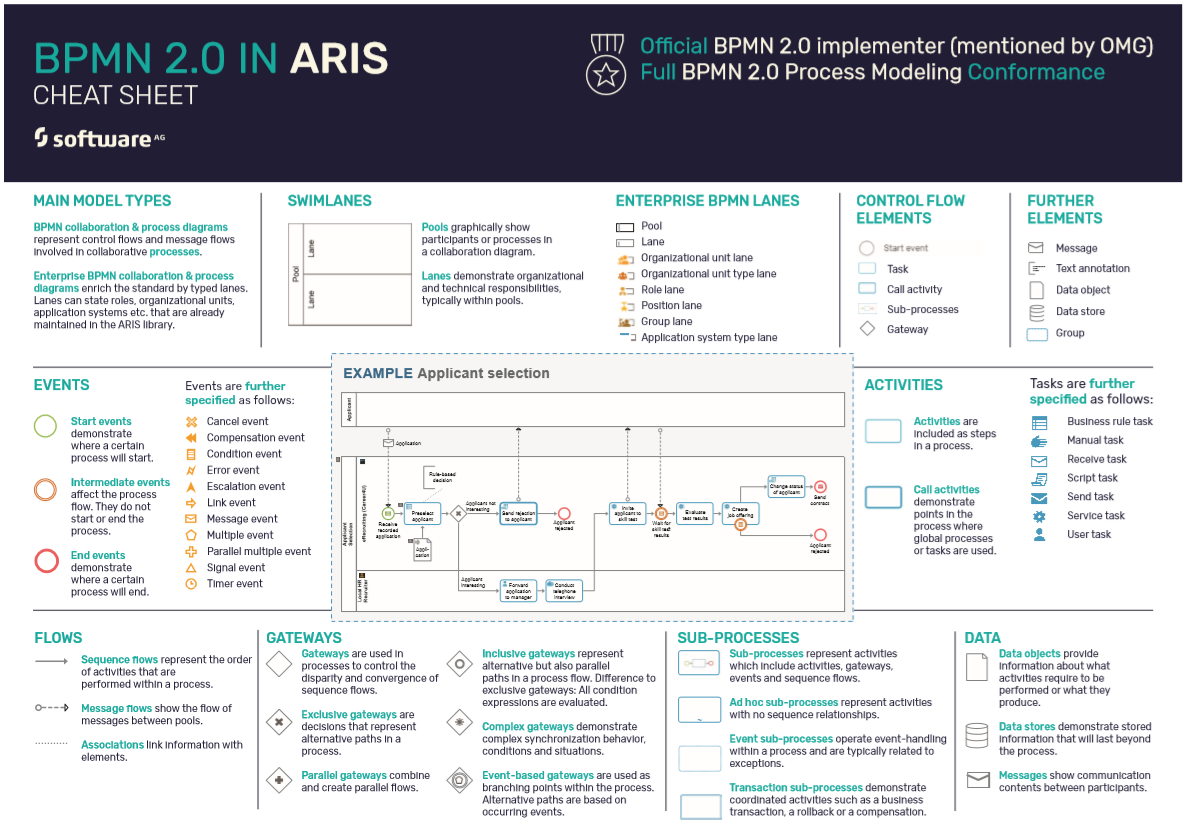

BPMN is a graph

Generate BPMN diagrams from Python:

https://bpmn-python.readthedocs.io/

https://github.com/csgoh/processpiper

Snap! Build Your Own Blocks: https://snap.berkeley.edu/

https://en.wikipedia.org/wiki/Functional_flow_block_diagram

Wolfgang Stegmüller / Gotthard Günther - philo papers

Wolfgang Stegmüller - The functions of the 'is'

Ana Maria Guzmán on "Günther’s critique of bivalent logic"

The irrational implications of the word 'is'

The word "is" is probably the most frequently used word in our language. It is equally versatile in terms of logic and semantics. The reason for this is that on the one hand it has a number of very different functions, but on the other hand it was the reason for the formulation of the basic ontological problem, the so-called "problem of being". It easily leads thinking into abstract connections that can no longer be resolved logically.

Let us consider the following cases:

a) "God is" (Note: "God" is the identifier of something non-existent, a paradoxon)

b) "Berlin is the capital of Germany" (capital is a category)

c) "Timbuktu was a trading center" (trading is a logistical process, 'was' past form)

d) "The whale is a mammal" (implicits: giving milk)

e) "8+3 is 11" (talking about equality)

f) "Heinz is clever" (describing a thoughful, adaptive behaviour)

g) "This is ochre!" (naming an observation)

h) "That's how it is" (confirmation of a situative context)

Summary: The meanings of the word "is"

The tree possible interpretations of the predicative "is":

a) The "is" represents a dependent <language symbol> that appears in sentences and sentence fragments. Sentence fragments are open sentences with an individual variable that can be supplemented in two different ways to make meaningful statements in themselves: replacing the variable with an individual designation or placing an "all" or "there is" in front of it: The "nominalist" interpretation.

b) The predicate expressions in predications are class names. The "is" accordingly expresses an element-class relation, called "extensional Platonism".

c) Predicates can be property names, and the "is" - in a predication - accordingly expresses the possible relationships between a thing and the property that this thing possesses, which is called "intensional Platonism".

Encoding facts: RDF - "Semantic Triples"

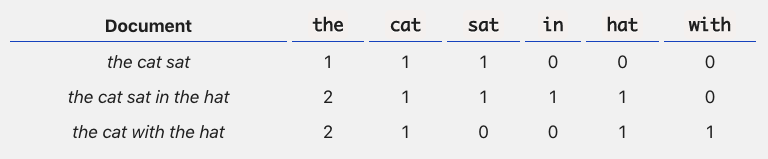

Compare with the word vector encoding in AI:

2D view of the adjacency graph

3D isometric view

The 3D adjacency graph

Allen's algebra: Timely aspects of RDF triples

Allen categorised interfering processes on the time axis into 13 groups:

In fact, language describing processes is much more complicated: See e.g. the words or phrases - is, was, has been, had been, will be, might be, have, had, has had, had had, will have, might have and their passive form becomes, became, has become, had become, will become, might become, might have become (irrealis future active and passive) indicate state, ownership, transforms, time, endurance, likelyhood.

But in principle they are expressible by Allen's interval algebra with <anteriority>, <simultaneity> and <posteriority>.

Advanced time forms describing processes

Planning much has to to with probability of events happening or being ressources being available. Planning, of course, always is thinking or reflecting about future events:

- Beginning in the past ending in the past

- Beginning in the past and recently ended

- Beginning in the past, still ongoing

- Beginning in the past, ongoing, (probably) ending soon

- Beginning in the past, ongoing, (probably) ending (undetermined) in the future

- Beginning in the past, ongoing, (certainly) ending in the (near or far) future

Same for:

Beginning (probably) now ...

Beginning (probably) soon ...

Beginning (probably) in the near future ...

Beginning (probably) in the far future ...

Note: Learn Babylonian / Assyrian language with its many cases and time forms!!

Python and its timeout decorator

Python is one of the few programming languages that can handle such time dependent processes: https://pypi.org/project/timeout-decorator/

Unix, C has time functions by default

In Unix and C handling of time is included in the Posix Standard:

https://man7.org/linux/man-pages/man1/at.1p.html

The 'Maybe Monad' in functional programming

Functional programming languages have a "fall through" mechanism if conditions do not match. No transformation then:

https://en.wikibooks.org/wiki/Haskell/Understanding_monads/Maybe

Overlapping Markup - Multi Contextual

RDF Semantic Triples typically only refer to (or are defined in) one context. With overlapping Markup you can assign to different contexts:

https://en.wikipedia.org/wiki/Overlapping_markup

https://en.wikipedia.org/wiki/Zettelkasten

"Luhmann's Zettelkasten" and Gotthard Güther's "Multicontextual Logic" here build the fundamental logics behind and are the main drivers for general encodings in the world.

Treating implications

"The whale is a mammal" implicits "motherhood" with the processes: childbearing, giving milk, caring, play, educating, training, protecting. This then has to be encoded in dependent (or associated sub-) RDF triples. You need to build up a hierarchy.

Summary of the dimensionality of language

a) relationships

b) assignment of properties

c) behaviours (active, passive or reacting)

d) assigning events to the timeline

e) has and describes hierarchies, priorities

f) probability or likelyhood of events, processes occurring or happening

g) events triggering / stopping processes, happening sametime

h) state somebody or something being in

i) implications, excluding something happening on the timeline

j) describing environments or contexts with rules, conditions, limits, possibilities

k) connotations, implications

l) scope

m) parametrising the world and its processes, behaviours, probabilities

Traversal of Knowledge Graphs

Stored knowledge, of course, must be processed. Functional programming languages here have built-in algorithms not only to traverse knowledge graphs, but also to do functional composition based on (knowledge) graphs.

Syntax Trees and Decision graphs

In programming the so-called "syntax tree" describes the (probable) paths an information, e.g. encoded in a RDF semantic triplle can take being operated. The so-called "Call Graph":

Seems, syntax trees are DAGs (Direct Acyclic Graphs), but keywords for, while, map, zip, goto ... introduce loops and cross connections. Local variables, closures, traits, objects, namespaces introduce <scope> or a <context>, an "environment to be". With limits, allowances, disallowances, predefined states. Implicit here are <types>, see Hindley-Milner "Type Inference".

Functional languages typically have the Kleene Star operator built-in by default: fold, foldr, foldl ...

Example: Encoding the Python world with RDF Graphs

How important it is to store or encode source code in graphs you might get an idea here: https://wala.github.io/graph4code/

Github or $git technology with trees clearly is at it's limit here!

The important Kleene Star operator

Example of the Kleene star operator applied to set of strings. It steers how functional programs operate on a complex tree or graph, searching, permutating through not only all combinations of parameters, but also possible functional compositions, similar to e.g. Linear Span. Software here builds itself from graphs.

Example of Kleene star applied to set of strings:

{"ab","c"}* = { ε, "ab", "c", "abab", "abc", "cab", "cc", "ababab", "ababc", "abcab", "abcc", "cabab", "cabc", "ccab", "ccc", ...}.

Example of Kleene plus applied to set of characters:

{"a", "b", "c"}+ = { "a", "b", "c", "aa", "ab", "ac", "ba", "bb", "bc", "ca", "cb", "cc", "aaa", "aab", ...}.

Kleene star applied to the same character set:

{"a", "b", "c"}* = { ε, "a", "b", "c", "aa", "ab", "ac", "ba", "bb", "bc", "ca", "cb", "cc", "aaa", "aab", ...}.

Example of Kleene star applied to the empty set:

∅* = {ε}.

Example of Kleene plus applied to the empty set:

∅+ = ∅ ∅ = { } = ∅, where concatenation is an associative and noncommutative* product.

Use of Kleene Star Operator in Proof Systems

When you try to mathematically proof a formula, a theorem or you want to proof, that your software is working according to the specs, doing exacty what it is supposed to do - and not more (hackers typically look for additional functionality to exploit), then you have to search or permutate through all possible branches of your program with its typically endless nested if-then-else-if ... constructs.

If you can't verify, that you have covered all demanded variations - and only these - then you're lost! You then can't say anything about the "security" of your system.

And here comes the Kleene Star Operator into play: He's permutating through all possibilities, that might occurr. A huge advantage in functional programming languages!!! See: https://rentry.co/DSRcourse-Formal-Verification

Impact of Kleene Star on BFS, DFS tree traversal

Kleene Star has an impact on how trees are parsed, searched, traversed, composed: BFS or DFS - see: https://www.baeldung.com/cs/dfs-vs-bfs

Kleene Star impact on parser combinators

Kleene Star always is behind more sophisticated parser generators, called PEG parser and Early parser, in general called parser combinators:

Graham Hutton on functional parsing: https://youtube.com/watch?v=dDtZLm7HIJs

Overview over: https://en.wikipedia.org/wiki/Comparison_of_parser_generators

Early parser paper: https://link.springer.com/content/pdf/10.1007%2F3-540-61053-7_68.pdf

https://python-course.eu/advanced-python/function-composition-in-python.php

Have fun!