DSR enhanced security and "formal verification" course

One single line that made functional programming languages popular:

tr -cs A-Za-z '\n' | tr A-Z a-z | sort | uniq -c | sort -rn | sed 10q (more in the text)

Introduction into formal proof systems

We're living i a multidimensional world with many constraints and conditions that make our life more comple

We have invented plenty of methods to find (or exclude) solutions.

Learn: "Positive people find solutions. Negative people find reasons"

To fully understand this course i highly recommend to read Wittgenstein's Tractatus Logico Philosophicus, Frege's Begriffsschrift and Principia Mathematica by Whitehead and Russell

https://en.wikipedia.org/wiki/Ludwig_Wittgenstein

https://en.wikipedia.org/wiki/Tractatus_Logico-Philosophicus

https://en.wikipedia.org/wiki/Begriffsschrift

https://en.wikipedia.org/wiki/Principia_Mathematica

- What's the semantic triple or "RDF triple"

- Why formal verification of algorithms is essential

- Geoff Sutcliffe: "Who killed Aunt Agatha"

- From simple gates to higher order functions (From NAND to TETRIS)

- Truth tables, Domain Driven Design, Panes, Impedance Missmatch

- Essential: What matrices have to do with graphs?

- Essential: What are co- and contravriant types?

- The inventor of category theory: Aristotele's 10 categories

- Essential: Law of excluded middle - "Tertium non datur!"

- The "Tertium non datur!" (and other findings) in Agda:

- The famous Y-combinator

- The need for reducing dependencies in Source Code

- Best solver: Picat - written in pure C!

- The 4 color proof

- The Coq/OCaml verification process

- The process of seL4 verification with Haskell and Isabelle

- Wat! - The JavaScript / Microsoft TypeScript nightmare

- SAT "satisfiable" Solver in pure Python

- The cDMN "constaints tables - compare with "State Machines"

- The Extensionality Axiom - Zermelo-Fraenkel

- Math logic fallacy: Proof that: 2=1

- Proof that 29 is prime?

- The Zermelo-Fraenkel "extensionality axiom"

- What's a "formal spec" that i have to "verify"

- The 'environment' - Strange observations with Rust

- The general problem with math formulas

- Find invariants - things that are true for all times (and under all conditions)

- Dependent Types and Sound Types

- The formal verification process of the seL4 kernel

- The formal verification process of CompCert C compiler

- Word frequency count

- Learning OCaml functional language

- The hoare triple - hoare calcucus

- Fully Homorphic Encryption

- Howto build your own "formally verifyable" functional language

What's the semantic triple or "RDF triple"

It describes the world in categories of "What is the case?" in Wittgenstein's sense:

1 The world is all that is the case.

1.1 The world is the totality of facts, not of things.

1.11 The world is determined by the facts, and by their being all the facts.

1.12 For the totality of facts determines what is the case, and also whatever is not the case.

1.13 The facts in logical space are the world.

https://en.wikipedia.org/wiki/Semantic_triple

Biggest databases on earth are RDF style:

https://www.w3.org/wiki/LargeTripleStores

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3965039/

That's how we mostly encode "data" that we type-in into our computers.

Why formal verification of algorithms is essential

Algorithms are all about <how> data is processed. In 2002 somebody noticed, that the "Timsort" algorithm, used for years in Java and Python - in very rare cases - forgot to sort one number. Nobody noticed, since typically all tests passed and nobody even thought that there could be a bug in it. Until one day ...

https://ercim-news.ercim.eu/en102/r-i/fixing-the-sorting-algorithm-for-android-java-and-python

The typical test scenario - at that time - only was to verify, if the result was correctly sorted in ascending, descending order. Nobody even thought about counting the numbers. One was missing! :-O

They used TLA+ by Leslie Lamport to verify the algorithm. You also can use e.g. Agda:

https://old.learntla.com/introduction/ (time related)

https://github.com/agda/agda (non time related)

Note, that mathematical proofs only work with /time unrelated/ algorithms. For proving e.g. Paxos or Raft algorithm, you need Temporal Logic as in TLA+.

I also highly recommend Leslie Lamport about the importance to program everything with human verifiable state machines:

https://github.com/gbmhunter/FunctionPointerStateMachineExample/

State machines in TLA+ https://www.learntla.com/topics/state-machines.html

Short version (7 pages): https://www.microsoft.com/en-us/research/publication/computer-science-state-machines/?from=https://research.microsoft.com/en-us/um/people/lamport/pubs/deroever-festschrift.pdf

Long version (27 pages): https://www.microsoft.com/en-us/research/publication/computation-state-machines/

https://www.hyrumslaw.com/

Make yourself clear, that every Assembler Instruction - we have seen earlier - in itself is a state machine or better - a "State Transformer": It has <entry states> that go through some <transformation> and that have <output states>. Above that again - this time in in software - logical <entry states> and (a series of) commands that trigger some <state transformation> in the underlying hardware. Together they build a huge <state transformation system>.

Q: What's Java or Python bytecode then? A: Abstract transformers! They're working processor ISA (Instruction Set Architecture) independent.

Quick jump to Temporal logic: https://rentry.co/DSRsecuritycoursepart9

Geoff Sutcliffe: "Who killed Aunt Agatha"

It's about claims (predicates) and restrictions (constraints) and finding (or excluding) solutions.

It's a very old standard problem every solver had to solve in the past:

Someone who lives in Dreadbury Mansion killed Aunt Agatha.

Agatha, the butler, and Charles live in Dreadbury Mansion,

and are the only people who live therein. A killer always

hates his victim, and is never richer than his victim.

Charles hates no one that Aunt Agatha hates. Agatha hates

everyone except the butler. The butler hates everyone not

richer than Aunt Agatha. The butler hates everyone Aunt

Agatha hates. No one hates everyone. Agatha is not the

butler. Therefore : Agatha killed herself.

https://tptp.org/cgi-bin/SeeTPTP?Category=Problems&Domain=PUZ&File=PUZ001+1.p

Mathematically this problem is related to linear inequalities:

https://byjus.com/maths/linear-inequalities-in-two-variables/

These are typically solved with Simplex Tableaus: https://en.wikipedia.org/wiki/Simplex_algorithm

*In fact invented by: https://en.wikipedia.org/wiki/Leonid_Witaljewitsch_Kantorowitsch

(Stupid cowboys continuously rewrite whole Wikipedia for political reasons, http://www.ned.org with their ~88 billion dollar black budget)

Where's the difference? Linear inequalities work on floats, the Agatha problem has to be solved with booleans in the same manner as "truth tables", used in electric circuits:

https://en.wikipedia.org/wiki/NAND_logic

They all can be operated in a matrix, e.g. with GraphBLAS or OpenBLAS:

https://www.openblas.net/ - supported by the German Souvereign Tech Fund!

Q: What is SUDOKU then? A: It's a disjoint states game. Distributing a set { ... } of states horizontally, vertically, diagonal.

From simple gates to higher order functions (From NAND to TETRIS)

Interestingly, NAND not only is build from simple logic gates (NOT AND), but you again can express AND, OR, NOT, XOR by NAND circuits:

Q = A OR B = ( A NAND A ) NAND ( B NAND B )

Typically, you proof this formula with truth tables. Here you go through the complete state space which is generated by combinatorics. For example: "How many possibilities are there to randomly spread 4 bits over a field of 8 bits (one byte)?

The simple formula is: f(n, r) = n!/(r!(n-r!))

https://www.calculatorsoup.com/calculators/discretemathematics/combinations.php

Answer is 70. Always keep in mind, that to proof something true or to disproof something, you always have to go over the complete state space!!!

Note: Try other values with 3 and 5 = 28, 2 and 6 = 56, 1 and 7 = 8 and you'll notice that it is a Gauss curve: https://en.wikipedia.org/wiki/Gaussian_function

https://en.wikipedia.org/wiki/NAND_logic

In general: Here you can see, that I can express very simple things with more complex bricks. Obviously i can climb the complexity ladder (compare with famous Wittgenstein Tractatus Logico Philosophicus, TLP) without losing the ability to do or to calculate even simplest things with very complex machines. That's what you can observe using Mico$oft Windoof. It has become far too complex!

Also see famous "From NAND to TETRIS" course by Shimon Shocken. He teaches you to make your own Tetris computer from NAND gates (=transistors) alone. No software, all in pure hardware. Back to the roots!

Do that fucking course!!! https://www.nand2tetris.org/

Truth tables, Domain Driven Design, Panes, Impedance Missmatch

Simple truth tables like in this example here: https://en.wikipedia.org/wiki/NAND_logic can be combined to very large truth tables, as e.g. needed to verify computer chips. Interestingly you can split those up and insert logical intermediate layers, where complex states are evaluated and representated by something like "[a complex fact] is the case" to reflect upon. These are called "logical panes". Like summaries at school or Blinkist. The essence of whole books squeezed into 10 sentences.

- Checkbox 2 (size of a hand, longish, curved, yellow?)

- Checkbox 2 (the car is red) RDF triple!

What does that mean "The car is red"? What are the conditions that i can make such a claim?

This process of splitting up complex relationships (e.g. a huge matrix) into human understandable parts, connected by some "glue logic" (the panes) is called "Domain Driven Development".

The more incompatible the models are, the more "glue logic" is needed. That's called "impedance missmatch". See the [forever:] [[move 10 steps] -> [turn 15 degrees] ] example: https://snap.berkeley.edu/ . Can you bring it back to sine and cosine?

At impedance missmatch, the cost of projects explode.

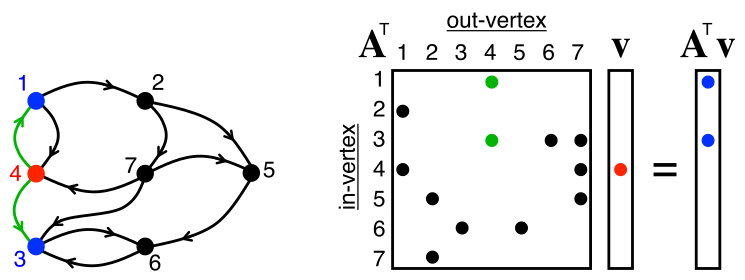

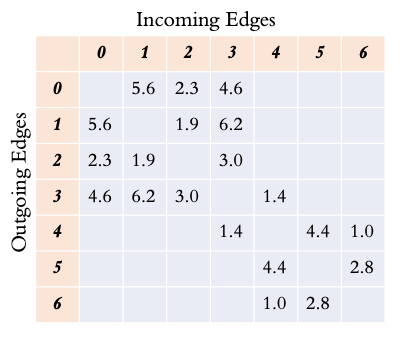

Essential: What matrices have to do with graphs?

Highly recommended to watch these videos first:

The Applications of Matrices | What I wish my teachers told me way earlier

https://youtube.com/watch?v=rowWM-MijXU&t=15m48s

Introduction to GraphBLAS with Python

https://youtube.com/watch?v=JUbXW_f03W0

GraphBLAS discovers hidden relationships within big data

GraphBLAS operations all are operations on mathematical semirings that fulfill special properties. Also compare with: 'abelian group', 'rings', 'semifields', 'idempotency'

To the official homepage: https://graphblas.org/

Giving matrix elements a weight - heavily used in AI!!!

https://python-graphblas.readthedocs.io/en/stable/getting_started/primer.html

The commercial end of Redis-Graph

https://redis.io/blog/redisgraph-eol/

This thesis from a student in St. Petersburg was the reason: https://dspace.spbu.ru/bitstream/11701/39638/2/Master_sThesisV_2.pdf

US CISA intervened, stopped that project: https://www.cisa.gov/about/leadership

Practical use of GraphBLAS or OpenBLAS

What building A² reveals

In the above videos you could see, that multiplying a matrix by itself, building A² from a simple adjacency matrix, that this reveals something about its inner structure, revealing hidden logical connections, that cannot be seen otherwise. In the case of A² you see who is connected with whom over one indirection.

Watch! https://en.wikipedia.org/wiki/Adjacency_matrix

Calculating A³ reveals clusters in community structures or social networks

By computing A³ you see who is connecred with whom over 2 indirections. With this it slowly begins, that you can see e.g. community structures in your enterprise, only by looking at e.g. the internal mail metadata (logs of who communicates with whom) clearly showing who is relevant or can be layed-off). Extract the mail logs, give every employee a number (1-500) and then build the adjacency matrix.

Or use meta data logs provided by on Twitter/X, Telegram which btw. become highly relevant for our secret services finding "terrorist clusters" in social networks (or just a harmless community of people interested in Ornithology (birds).

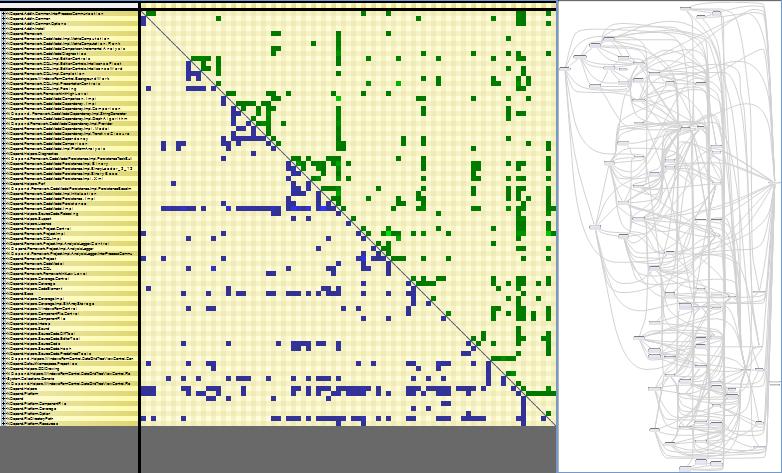

The inner structures of souce code

You can discover the inner structures of source code over time, e.g. with Gource:

https://youtube.com/watch?v=9mput42uZsQ

There you sometimes see blobs getting bigger and bigger, building "god classes", which then have to be smashed by refactoring to keep your code maintainable. "God classes" are a clear indicator for "technical debt". That's why e.g. Microsoft had to completely rewrite .NET 3x now. Oracle hasn't even started yet with its Java libraries.

Reason: That would break downwards compatibility. But programming with Java becomes more and more a pain. Most programming patterns are simply outdated, do not fit in the new age of distributed computing with many cores. See e.g. the Disruptor pattern.

Highly recommended: New age of HPC algorithms: https://en.algorithmica.org/hpc/

GTK3 GUI library - written in pure C - also had a problem with one god class (the GObject) attracting more and more functionality: https://youtube.com/watch?v=aZf2g__6DkY

The Girvan Newman algorithm

Instead of building A², A³ of the Adjacency Matrix you also can use the Girvan Newman algorithm to discover community structures in metadata of communication or within software:

https://flosshub.org/sites/flosshub.org/files/gonzalezBarahona44-48.pdf

Enabling massive deep neural networks with the GraphBLAS

This here is the burner: https://arxiv.org/abs/1708.02937

https://dspace.spbu.ru/bitstream/11701/39638/2/Master_sThesisV_2.pdf

The Hindley - Milner type inference

Cython neccessarily needs type hints, while CPython typically can figure out on its own, what of what type the variables are. Interestingly Cython can internally autocomplete missing type hints. This typically is done with Hindley-Milner algorithm, especially in functional languages. But it also can be done with GraphBLAS.

Essential: What are co- and contravriant types?

Co- and contravariant types differ in preservation (keeping unchanged) of certain properties. E.g. in a gravitational, spheric world, everything should be on curved coordinates, but for simplicity we keep it Euclidean: Span is: {0,0,1}, {0,1,0}, {1,0,0} as our unit vectors, which always are rectangular to each other. Means: I may vary x or y or z coordinates independently from each other.

Applied to compilers i always have problems with transforming from decimal to binary "coordinates". 0.3 in decimal poses a serios problem in binary format:

The binary representation of 0.3 is 0.0100110011001100110011001100110011... where the sequence 0011 repeats infinitely. This means that in binary, 0.3 is a non-terminating, repeating decimal. Note: Precision is not equal to accuracy!!!

Same for the algorithm themselves. In Binary format they vastly differ from what we know from math at school, see: https://graphics.stanford.edu/~seander/bithacks.html

So when Xavier Leroy reports about the "formal verification" of the CompCert C compiler, you might think of this model when you read of "covariant" and "contravariant"

https://xavierleroy.org/publi/compcert-CACM.pdf

The inventor of category theory: Aristotele's 10 categories

When e.g. Bartosz Milewski is phantasising about his catagories, he's in fact citing Aristotele from his Organon, chapter IV: https://en.wikisource.org/w/index.php?title=Organon_(Owen)/Categories#Chapter_4

https://github.com/hmemcpy/milewski-ctfp-pdf

https://en.wikipedia.org/wiki/Categories_(Aristotle)

He's the true originator of all that "category stuff" in functional programming:

Aristotele at his time (~350 b.c.) considered 10 categories to be sufficient:

- (1) substance

- (2) quantity

- (3) quality

- (4) relatives

- (5) somewhere

- (6) sometime

- (7) being in a position

- (8) having

- (9) acting (protagonist)

- (10) being acted upon (antagonist). (Organon, chapter IV)

Q: Living today in a modern civilization: What "qualities" had to be added?

Essential: Law of excluded middle - "Tertium non datur!"

Aristotele: "It is impossible, then, that "being a man" should mean precisely "not being a man", if "man" not only signifies something about one subject but also has one significance. … And it will not be possible to be and not to be the same thing, except in virtue of an ambiguity, just as if one whom we call "man", and others were to call "not-man"; but the point in question is not this, whether the same thing can at the same time be and not be a man in name, but whether it can be in fact. (Metaphysics 4.4)

https://en.wikipedia.org/wiki/Law_of_excluded_middle

In normal human logic the opposite of e.g. "blue" is not "red". It can be brown, green, yellow or whatsever be. Their opposite never will be "blue" again either.

But in mathematics we need this "narrowing down" to make reasoning about the world in logical, close models (groups, rings) with algebraic notation at all possible.

Mathematicians so are all a little bit, let's say "small-minded". But therefore they can be - very precise in their description of the world in physics, chemistry, biology, genetic, philosophy (see Wittenstein's TLP) ... Also see Gotthard Günther - https://www.vordenker.de/ggphilosophy/ggphilo.htm and Wolfgang Stegmüller - https://www.sgipt.org/wisms/analogik/ist-ws.htm

Make yourself clear, that - as a programmer - you're parametrizing the world to computable states. You're the interface between the physical world with its (Wittgenstein) "facts" - according you your interpretation - and some stupid transistors, doing some logical things for you with it: Nonsense in - nonsense out!

The "Tertium non datur!" (and other findings) in Agda:

The ""Tertium non datur":* https://github.com/agda/agda-stdlib/blob/master/src/Axiom/DoubleNegationElimination.agda

The "Extensionality Axiom": https://github.com/agda/agda-stdlib/blob/master/src/Axiom/Extensionality/Heterogeneous.agda

Peano / Zermelo Fraenkel Axioms: https://boarders.github.io/posts/peano.html

When it comes that you have to (purely mathematical) proof an algorithm you can use Agda. For temporal related proofs you have to use TLA+.

The famous Y-combinator

It is a so-called higher-order function, that replicates itself when put into the λ calculus: λƒ.(𝜆𝑥.ƒ(𝑥.𝑥))(𝜆𝑥.ƒ(𝑥.𝑥)) or in simple form: y f = f (y f)

This discovery made the λ useful for programming loops (while true do ... done) and finally to proof the equivalence with the Turing machine:

https://en.wikipedia.org/wiki/Church-Turing_thesis

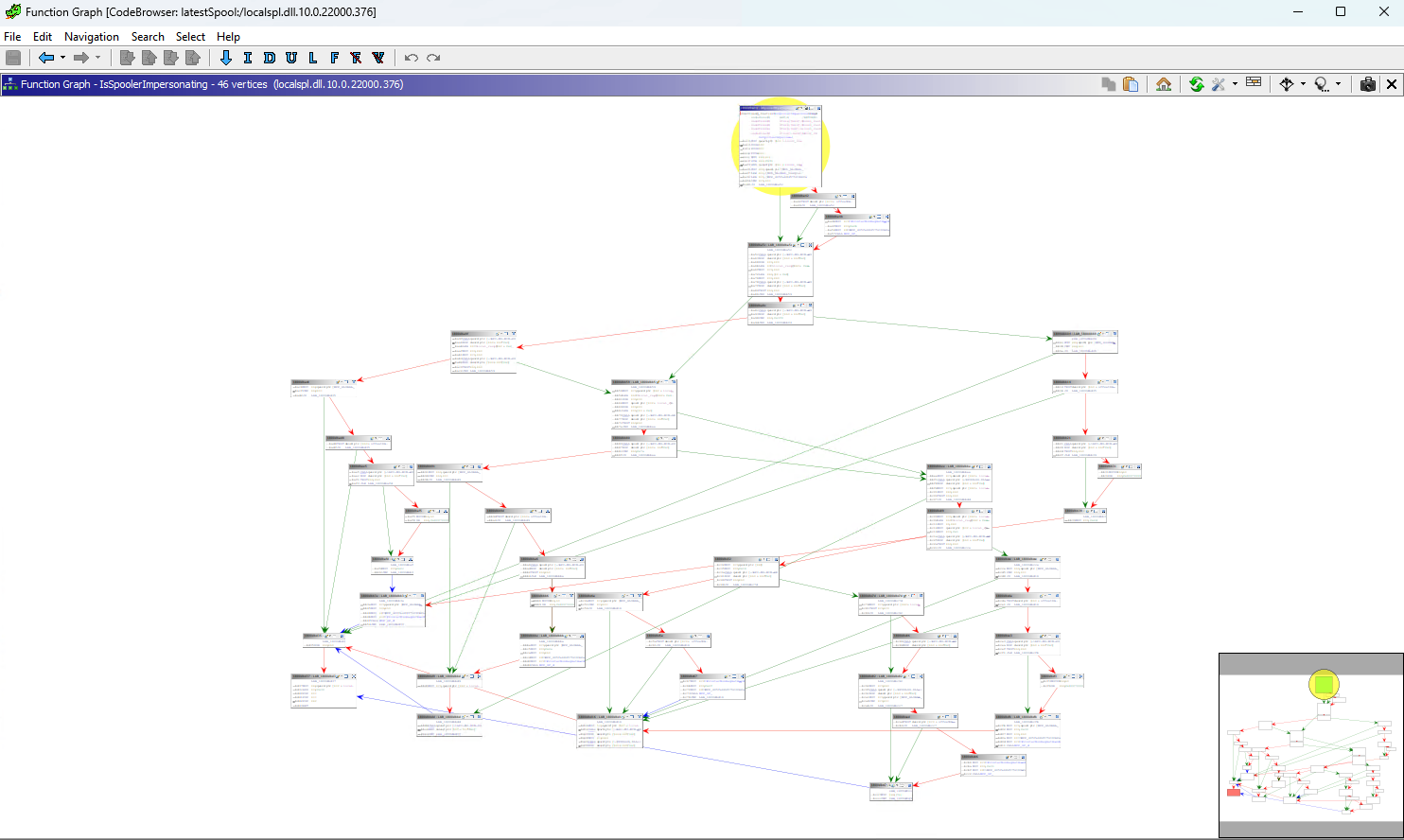

The need for reducing dependencies in Source Code

Two examples:

https://blog.ndepend.com/identify-net-code-structure-patterns-with-no-effort/

and:

Source: https://clearbluejar.github.io/posts/callgraphs-with-ghidra-pyhidra-and-jpype/

Going functional programming languages

One single line that made functional programming languages popular:

tr -cs A-Za-z '\n' | tr A-Z a-z | sort | uniq -c | sort -rn | sed 10q (more in the text)

Functional programming languages significantly reduce cross dependencies of modules and so not only lower long term maintenance cost, but also make "formal verification" possible. As the example impressingly shows, you can replace 1000nds of lines of code by a simple one-liner in bash.

https://onceupon.github.io/Bash-Oneliner

Best solver: Picat - written in pure C!

The biggest advantage of his solver is, that is seamlessly integrates into $ bash shell programs to make even $ bash scripts "intelligent".

Best tutorial so far: http://retina.inf.ufsc.br/picat_guide/

Beginner's intro: https://www.linuxjournal.com/content/introduction-tabled-logic-programming-picat

Picat is very B-Prolog like: https://en.wikipedia.org/wiki/B-Prolog

http://picat-lang.org/download/picat_compared_to_prolog_haskell_python.html

Homepage and docs: http://picat-lang.org/ and http://hakank.org/picat/

1000 examples: https://github.com/hakank/hakank/tree/master/picat

Picat tutorial: https://www.hillelwayne.com/post/picat/

From: http://picat-lang.org/p99/constraints.html

8 Queens problem:

IMHO: Don't look any further. It's the best solver of all times!

Always have in mind, that a solver has to go through all possible states. So it permutates through all possibilities. In the inner for loop you see the constraints, the "rule of the game". This is also called "the model".

The 4 color proof

A mixture between mathematical, formal and computational proof:

https://youtube.com/watch?v=yBXGdJw1xBI

The Coq/OCaml verification process

https://www.google.com/search?q=ocaml+verification+with+coq+isabelle

Introduction into the COQ/Isabelle proof assistent: https://www.lri.fr/~paulin/LASER/course-notes.pdf

Online proof assistent: https://coq.vercel.app/

Equivalence of imperative (state based) and functional (transformer based) languages:

https://en.wikipedia.org/wiki/Church-Turing_thesis

The long process of proving correctness of the CompCert C compiler:

https://xavierleroy.org/publi/compcert-CACM.pdf

The process of seL4 verification with Haskell and Isabelle

https://www21.in.tum.de/teaching/proof21/SS18/files/14-final.pdf

http://web1.cs.columbia.edu/~junfeng/09fa-e6998/papers/sel4.pdf

https://sel4.systems/Foundation/Board/

https://sel4.systems/About/

Rust on top of seL4? https://sel4.systems/Foundation/Board/Minutes/230928-minutes-unconfirmed.pdf

People do not know what they're doing! US money involved?

Wat! - The JavaScript / Microsoft TypeScript nightmare

https://youtube.com/watch?v=et8xNAc2ic8

The original talk by Gary Bernhardt:

https://www.destroyallsoftware.com/talks/wat

Compare with https://bellard.org/quickjs/ unique features!

SAT "satisfiable" Solver in pure Python

SAT solver written in Python: https://github.com/eprover/PyRes/blob/master/saturation.py

The cDMN "constaints tables - compare with "State Machines"

https://dmcommunity.org/wp-content/uploads/2014/10/who-killed-aunt-agatha-corticon.pdf

The cDMN tables notation: https://cdmn.readthedocs.io/en/latest/notation.html

The Extensionality Axiom - Zermelo-Fraenkel

https://en.wikipedia.org/wiki/Zermelo-Fraenkel_set_theory

To find f(n), add 5 to n and then multiply with 2.

To find g(n), multiply n by 2 and then add 10.

Interestingly, those two functions obviously are not identical but extensionally they deliver same values for n>0:

f(3)=16; f(8)=26;

g(3)=16; g(8)=26; Surprising, isn't it?

Obviously two different operations, but same outcome! Can you proof their equivalence?

Math logic fallacy: Proof that: 2=1

Gödel sais, that a logical system (math) cannot be proven within itself. And this is the result. There are inconsistencies.

Mathematical proofs all have their own "quirks and flaws". While differentiation of math formulas always is straightforward following simple rules, the opposite, the integration can be quite challenging. And quite often things are overseen, as in this example:

This kind of logical error is typical for kids at school. They always forget, that "dividing by zero" will heavily be punished! But also the opposite "multiplication with 0" is also problematic, when you go through in "bottom up" reverse order.

Proof that 29 is prime?

https://en.wikipedia.org/wiki/Primality_test

"Certain number-theoretic methods exist for testing whether a number is prime, such as the Lucas test and Proth's test. These tests typically require factorization of n + 1, n − 1, or a similar quantity, which means that they are not useful for general-purpose primality testing, but they are often quite powerful when the tested number n is known to have a special form

The Lucas test relies on the fact that the multiplicative order of a number a modulo n is n − 1 for a prime n when a is a primitive root modulo n. If we can show a is primitive for n, we can show n is prime."

29 as natural number is between 28 and 30 and we know their prime factors

28 < 29 < 30 we can rewrite to:

4*7 < 29 < 5*6 (imagine 4*7 and 5*6 as rectangles)

There obviously is no other decomposition possible between 4 and 5 and 7 and 6 possible. Therefore 29 must be prime! q.e.d?

WTF is going on here?

Answer: https://en.wikipedia.org/wiki/Axiom_of_extensionality

The problem: There can be other decompositions. It have to make sure, that 4*7 and 5*6 are the only prime factors, which they aren't! 2*2*7 and 5*2*3 are possible.

Note: To 'proof' something i have to go through all possible states!

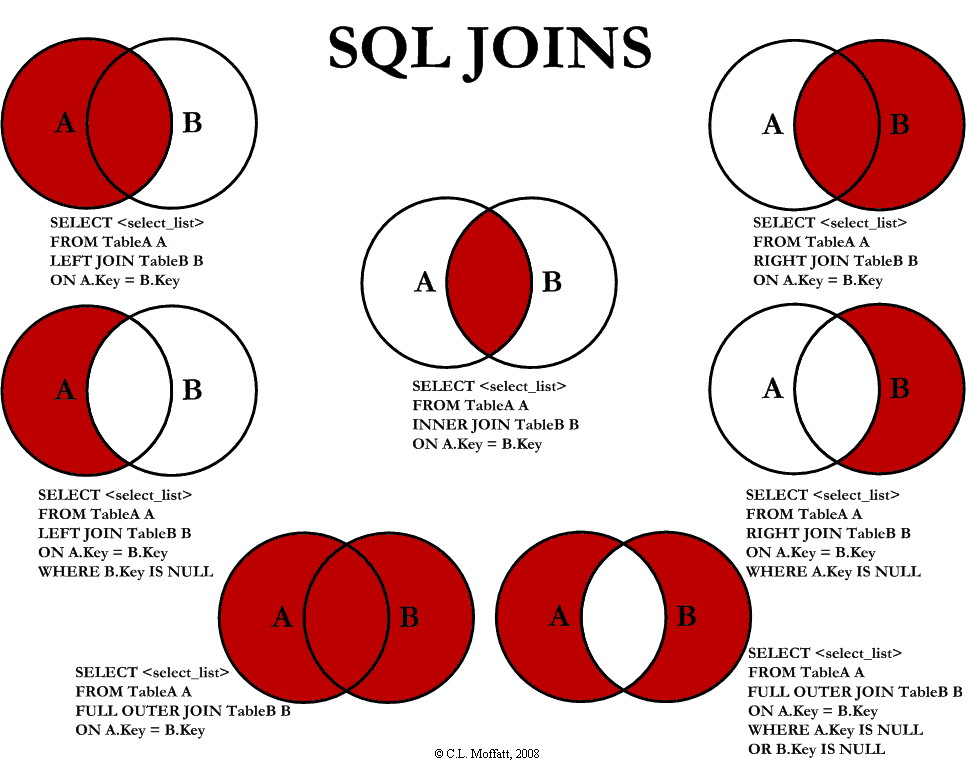

The Zermelo-Fraenkel "extensionality axiom"

In the formal language of the Zermelo–Fraenkel axioms, the axiom reads:

∀𝐴∀𝐵 (∀𝑋 (𝑋∈𝐴 ⟺ 𝑋∈𝐵) ⟹ 𝐴=𝐵)

or in words:

"Given any set A and any set B, if for every set X, X is a member of A if and only if X is a member of B, then A is equal to B."

"If it looks like a duck, swims like a duck, and quacks like a duck, then it probably is a duck." - The "duck" argument: https://en.wikipedia.org/wiki/Duck_test

Interestingly, Zermelo-Fraenkel axioms ("set theory") are the base of the SQL language. All other math above is based on the Peano-Axioms.

https://en.wikipedia.org/wiki/Zermelo–Fraenkel_set_theory

https://en.wikipedia.org/wiki/Peano_axioms

In mathematical proofs I thus have to go though all possibilities. Same for data flow in computer programs. I have to check every possible branch e.g. for dead ends.

What's a "formal spec" that i have to "verify"

It's a set of behaviours: Spec: Set { behaviour 1, behaviour 2 ... behaviour n }

Means: Things have to move to watch if they behave correct. A cat that does not move any longer - e.g. because it's dead - does no longer show "a behaviour" any longer!

So if the "behaviours" described in the specs and in the source code finally show up in the machine code, then your program and compiler - together - are "formally verified".

Note: The complete chain must be verified!!! (As described here: https://xavierleroy.org/publi/compcert-CACM.pdf)

The 'environment' - Strange observations with Rust

A Rust programmer pointed me to a strange observation: One of the environment variables in Bash shell had an incorrect Unicode type as "malformed character" in it.

Result was: Rust compiler as well as all with Rust compiled programs crashed.

Obviously there was the inner logic within Rust code and compiled Rust binaries depending on some strange characters which have nothing to do with either Rust Code nor with the generated Binary. Or - at least - they shouldn't!

Why is Rust behaving in such a unpredictable way? Microsoft is heavily sponsoring Rust development and Microsoft wanted to make sure, that Rust runs on Linux console as well as in Windows GUI environment, or - in a open Powershell window.

The Rust 'println' function description here sais:

Note: “User-friendly” printing is done using the Display trait, debug output (human-readable but targeted at developers) uses the Debug trait. You can find more information about the syntax you can use in println! in the documentation for the std::fmt module. Source: https://rust-cli.github.io/book/tutorial/output.html

What does that mean: Depending on its environment Rust binary shows different behaviours. In Windows, the Rust binary looks, of outout in Powershell or Windows GUI is wanted and behaves correspondingly. Unluckily in Windows all Unicode is 16 bit only, while in Linux Unicode varies between UTF-8 (ascii like) and 16bit and 32bit versions. Linux is fully compatible with global Unicode specs, while Windows isn't. And here suddenly Rust had an obvious problem. What leads us to the next problem:

The general problem with math formulas

In our above examples, the environment is not considered. In physical reality we have two baskets containing things, neccessarily at visually distinguishable places. So the bags cannot be the same as well as the items in the bags. In math, that fact is ignored. Not so in computing. Memory locations are distinguishable as well.

Another thing that is permanently ignored, is ***time***. The instruction pointer IP within a CPU core cannot be at the same time at different places.

See the "ABA" and the *"TOCTOU" problems:

ABA: https://en.wikipedia.org/wiki/ABA_problem

TOCTOU: https://en.wikipedia.org/wiki/Time-of-check_to_time-of-use

To address these problems, Intel introduced the atomic Compare-And-Swap (CAS) instruction: https://en.wikipedia.org/wiki/Compare-and-swap

Find invariants - things that are true for all times (and under all conditions)

Proof of the Gauß summation formula with induction

https://de.wikipedia.org/wiki/Gaußsche_Summenformel

What's the sum of e.g. 1 ... 10? We divide in 2 parts and reverse one part:

. 1 2 3 4 5

10 9 8 7 6

=========

11 11 ... 11 = 10/2 * (10+1) obviously. Replacing '10' by x gives me the formula:

½x(x+1) - can i proof that? For x=10 it was correct. And when i replace x by x+1?

½(x+1)(x+2) then should be the same as ½x(x+1)+(x+1), right?

½(x²+3x+2) == ½x² + ½x + (x + 1)

Interestingly, this should also hold for an odd number of numbers like [1 ...11]. Why? Because it didn't matter when we added (x+1) to our example with 10. Why? In this case, you can add a "0" to make the number even again.

Seems, the right duck always quacks like the left duck! q.e.d.

Seems, with ½x(x+1) we have found an "invariant" which is working for all sums of natural, positive numbers. Same proof in Agda:

https://github.com/jsiek/B522-PL-Foundations/blob/master/lecture-notes-part1.lagda.md

Finding and proving "invariants" - that's what mathematics is all about!

Sequences as invariants in programming

The Random Number in your head:

Choose a 2-digit number, say 23, your "seed". Form a new 2-digit number:

The 10's digit plus 6 times the units digit - gives you the next number.

The example sequence is: 23 - 20 - 02 - 12 - 13 - 19 - 55 - 35 ...

The whole cycle is - until it repeats:

01 06 36 39 57 47 46 40 04 24 26 38 51 11 07 42 16

37 45 34 27 44 28 50 05 30 03 18 49 58 53 23 20 02

12 13 19 55 35 33 21 08 48 52 17 43 22 14 25 32 15

31 09 54 29 56 41 10 01

https://groups.google.com/g/sci.math/c/6BIYd0cafQo/m/Ucipn_5T_TMJ?hl=en

These cycles are a huge problem in Pseudo Random Number Generators and such in RSA and DKHE (https / ssh protocol)

Multiple XOR encryption

To prevent this you can use several different PRNG, shifting and XOR'ing them against each other. So when you use 4 x 4096 bits of random numbers, you shift them by some random offset, the first repeating sequence logically appears after 4096^4 bits. Enough to confuse your MITM.

So you virtually use a telescope like mechanism. It's pretty hard to solve this equation system. You need to invert a 4096^4 elements (2.8147498e+14) quadratic bool matrix. Here again, state space explodes.

Another example would be the "Mandelbrot set" (of pixels):

https://colineberhardt.github.io/wasm-mandelbrot/

Can we find the invariants in the Nim game?

Let's play the Nim game: 16 matches

Two players take between 1-3 matches, the player who takes the last match, loses!

Finding the invariant (n%4)+1 saves you from building a tree at all! Why?

https://cgm.cs.mcgill.ca/~avis/Kyoto/courses/ia/2013/notes/luc_devroye.htm

That's what AI in fact does: Finding the inner dependencies in high dimensional state information and reducing them to only some few relavant states very much like with a funnel.

Finally genomes are nothing else than "State Space Reducers". They're shaping the world, they work against the 2nd law of entropy:

https://en.wikipedia.org/wiki/Folding_funnel

Second law of thermodynamics: https://fs.blog/entropy/

Dependent Types and Sound Types

Why Excel miserably fails

Excel has no "sound types": Here you can see, what happens, when your types are not "sound":/https://exceloffthegrid.com/excel-calculate-wrong-results/

The need for a mathematical foundation

So an idea could be here, that "types" in programming should also have a mathematical foundation. And, indeed, there are "Dependent types" and there are "Sound types" which do have exactly hat kind of mathematical "duck argument" foundation!

https://en.wikipedia.org/wiki/Dependent_type

Sound types in Dart

https://dart.dev/language/type-system

"Dart has sound types, which means that it guarantees that the runtime type of a variable will match its static type. TypeScript has no such guarantee, and the added constraint means that any additions to Dart's type system must be very carefully designed and implemented to preserve soundness. In exchange though, you get much more confidence in the runtime behavior of your code, and there are many more opportunities for optimization and dead code elimination that helps Dart run faster and slimmer"

https://medium.com/dartlang/dart-and-the-performance-benefits-of-sound-types-6ceedd5b6cdc

Java has no sound types

https://hackernoon.com/java-is-unsound-28c84cb2b3f

But the Java - like ABS language has

From: https://abs-models.org/manual/

"ABS is a language for Abstract Behavioral Specification, which combines implementation-level specifications with verifiability, high-level design with executablity, and formal semantics with practical usability. ABS is a concurrent, object-oriented, modeling language that features functional data-types."

Fabrice bellard's QuickJS has sound types!

And Fabrice Bellard's https://bellard.org/quickjs/ has: BigNum and BigDecimal long arithmetic!!! These also build "sound types" because e.g. multiply of 2 64 bit integers cannot overflow!

12,000 lines of magic: https://github.com/bellard/quickjs/blob/master/quickjs.c

Smalltalk has sound types

Smalltalk is one of the oldest programming languages and also the safest one. Not only it automatically switches between BigDecimal, Fractional, BigNum arithmetic it's inner architecture is quite amazing. Everything is an object that is floating around in a vivarium of <<object space>>. Types are implicit. Buffer overflows, by design, do not exist. The only method of objects to communicate is to talk with each other. If one message of a sender is of wrong format, the receiver simply answers with "message not understood": https://wiki.c2.com/?DoesNotUnderstand

Smalltalk fraction type: http://tpcg.io/_JZSTQ1

The WAT! unsoundness of a browser JS engine

What can happen, when you implement compilers without verification, you have seen in the above "WAT! talk" by Gary Bernhardt:

https://www.destroyallsoftware.com/talks/wat

The formal verification process of the seL4 kernel

https://gernot-heiser.org/

https://sel4.systems/news/#seL4-13.0.0

https://youtube.com/watch?v=E1hWlg-Ms9I&t=13m0s

The formal verification process of CompCert C compiler

42000 lines of COQ proof code had to be written to ensure, that CompCert pricisely does, what it is supposed to do: Translating to machine code. Here the story:

https://xavierleroy.org/publi/compcert-CACM.pdf

On the other hand there is Fabrice Ballards OTCC C (subset) compiler that fits onto one A4 page, into 2048 bytes, to be precise. Both do exactly the same!

Word frequency count

https://www.cs.tufts.edu/~nr/cs257/archive/don-knuth/pearls-2.pdf

Douglas McIlroy of Bell Labs criticized Knuth's solution as not even able to process a full text of the Bible, and replied with a one-liner, that is not as quick, but gets the job done:

tr -cs A-Za-z '\n' | tr A-Z a-z | sort | uniq -c | sort -rn | sed 10q

Revolutionary: It's the way of thinking in "transformers"!!!

https://dev.to/kcdchennai/example-for-text-processing-shell-commands-tr-uniq-sort-sed-and-awk-335f

Examples to get the "mindset": https://www.grymoire.com/Unix/Sed.html

Primary goal is to reduce cross dependencies between functional modules!

It makes your language predictable: https://youtube.com/watch?v=KPa8Yw_Navk&t=34m30s

https://fsharpforfunandprofit.com/posts/is-your-language-unreasonable/

With that, "formal proofs" become possible.

A followup article about "Literate Programming":

https://web.archive.org/web/20210725060244/https://www.cs.upc.edu/~eipec/pdf/p583-van_wyk.pdf

Haskell solution: https://franklinchen.com/blog/2011/12/08/revisiting-knuth-and-mcilroys-word-count-programs/

Benchmarks: https://codegolf.stackexchange.com/questions/188133/bentleys-coding-challenge-k-most-frequent-words

Lessons learned from the Word Frequency Count example

tr -cs A-Za-z '\n' | tr A-Z a-z | sort | uniq -c | sort -rn | sed 10q

- Functional programs always can be split up into 'state independent' modules

- There is no 'state propagation' since everything is implemented as 'transformer'

- All operations follow mathematical laws. There always is the "negative element" for "undoing" or reversing a operation: https://en.wikipedia.org/wiki/Inverse_element E.g. SQL servers do not follow these mathematical principles

- These modules always can be independently verified (see http://justine.lol/lambda example)

- Functions are always pure - no side effects! (https://purescript.org/ und https://agraef.github.io/pure-lang/)

- When a λ function once is verified, it is exactly doing what it was meant for - ans nothing else!

- λ functions never have any secret functionality, no "encapsulation", no "private" or "protected", unseen or unreachable states or functionalities

- Immutablitity is always granted. Never change facts in databases backwards in time!!! Compare Datomic with e.g. SQL servers. Compare to blockchain.

- Functional integrity and correctness only can be granted within the program. Any kind of storage or output therefore always is a 'maybe monad'. (You can never know, if hard drive is working or graphics card it there. Same for all kinds of input over keyboard, mouse, ... see the evil Rust traits example

Learning OCaml functional language

The new 2024 OCaml learning book:

https://cs3110.github.io/textbook/ocaml_programming.pdf

The book: https://cs3110.github.io/textbook/cover.html

OCaml tutorials: https://youtube.com/playlist?list=PLre5AT9JnKShBOPeuiD9b-I4XROIJhkIU

Note: OCaml has no US dependencies like LLVM, GNU. It's french! Think european!

Also worth knowing: F# is an OCaml clone. ReasonML is a JavaScript clone written on OCaml compiler. It is like as OCaml has morphed its syntax. With ReasonML you now can reimplement OCaml again, if you like. ML stands for Meta Language. Meta = (greek) above, beside.

https://en..wikipedia.org/wiki/Reason_(programming_language)

https://en.wikipedia.org/wiki/Standard_ML

HINDLEY-MILNER TYPE INFERENCE: https://www.youtube.com/watch?v=_yDo9Q9EOHY

https://en.wikipedia.org/wiki/Hindley-Milner_type_system

The hoare triple - hoare calcucus

The central feature of Hoare logic is the Hoare triple. A triple describes how the execution of a piece of code changes the state of the computation. A Hoare triple is of the form: {𝑃}𝐶{P}

{P} is named the precondition and {Q} the postcondition: when the precondition is met, executing the command establishes the postcondition. Assertions are formulae in predicate logic.

Hoare logic provides axioms and inference rules for all the constructs of a simple imperative programming language. In addition to the rules for the simple language in Hoare's original paper, rules for other language constructs have been developed since then by Hoare and many other researchers. There are rules for concurrency, procedures, jumps, and pointers.

https://en.wikipedia.org/wiki/Hoare_logic

https://www.eiffel.org/doc/eiffel/ET-Design_by_Contract%28tm%29%2C_Assertions_and_Exceptions#Preconditions

Eiffel language - Design by Contract™

https://www.eiffel.org/doc/eiffel/ET-Design_by_Contract(tm)%2C_Assertions_and_Exceptions#Design_by_Contract

Fully Homorphic Encryption

The idea: End-to-End encryption and all calculations happen on encrypted data only!

Slideshow: https://www.zama.ai/introduction-to-homomorphic-encryption

Paper: https://thomas-plantard.github.io/pdf/Chen18.pdf

Lattice based cryptography: Points do have 10000's of coordinates. So finding a combination of the basis vectors that simultaneously makes all 10,000 generated coordinates turns out to be quite hard.

https://medium.com/cryptoblog/what-is-lattice-based-cryptography-why-should-you-care-dbf9957ab717

There are many lattices possible to use. Let's take e.g. a logarithmic lattice:

The core idea: Instead of multiplying 2 numbers you can add their precomputed logarithms. For dividing, subtract their logarithms.

Addition you can do, if you precompute 2^x or 10^x values: log(10^x) = x.

So log(10^x)+ log(10^y) = x + y; Same for subtraction.

Only can do +, -, * , / operations, no loops: https://github.com/google/fully-homomorphic-encryption/tree/main/transpiler

On a lattice, it's then just a affine jump - compute offset + (x,y)

On top you can use the "Vernam" XOR-cypher to make it quantum safe:

https://en.wikipedia.org/wiki/XOR_cipher

https://en.wikipedia.org/wiki/Gilbert_Vernam

Interestingly you don't need to precompute so many numbers. You can do a stepwise approach:

log(10,0 + 0,1) = ?

This formula helps: ln(a + b) = ln(a) + ln(1 + b/a) = ln(b) + ln(1 + a/b)

So to reduce the amount of precomputed values on an endless lattice, you can compute the logarithms step-wise to finally reach the needed accuracy. That's why FHE typically is dog-slow (several seconds per operation).

To further increase compute on Linux:

https://man7.org/linux/man-pages/man2/memfd_secret.2.html

Note: You need to activate this with a special boot parameter. It's no default.

Howto build your own "formally verifyable" functional language

Justine Tunney is a brilliant programmer to learn from. She implemented her own lambda operator in C and x86 assembler to put her own small Lisp on top. It has everything, modern functional programming languages have, like a fully featured garbage collector, tail recursion, even (Haskell's) lazyness.

Implemented in under 512 bytes: http://justine.lol/sectorlisp

Also see: http://justine.lol/lambda

Note: Only simple systems are (single human) understandable, verifiable and finally reliable and maintainable. Especially interpreters that fit on one A4 page!

The C version of her lambda interpreter you can easily extend with C libraries, of course! See: https://rentry.co/DSRsecuritycoursepart11

Have fun!

Back to last lesson - https://rentry.co/DSRsecuritycoursepart5 Next lesson: https://rentry.co/DSRsecuritycoursepart7