Pygmalion H&Q FAQ because answering the same question over and over again sucks!

A lot of issues can simply be solved by updating your stuff, so update before reading any further.

These were written assuming you're using SillyTavern as a frontend but other easily customizable platforms like PygmalionAI.

Feel free to DM me on discord (.trappu) if you have any more questions or think something is missing/needs further elaboration.

- Q: What are the best settings for RP/adventure/narration/chatting?

- Q: My character is outputting complete nonsense. How to fix?

- Q: How can I improve my character's responses?

- Q: Which model should I use?

- Q: How can I use a model? Which frontend? Which backend?

- Q: Where can I find characters

- Q: What are lorebooks? How do I use them?

- How to use models on mobile?

- Q: My character keeps talking for me. How can I stop that?

- Q: How can I stop my model from speedrunning the roleplay and talking about bonds all the time?

- Q: How to get shorter responses? (Pygsite/ST)

- Q: What's Author's/Character's Note?

- Q: How do I import settings?

- Q: How do I import/choose instruct/context templates?

Q: What are the best settings for RP/adventure/narration/chatting?

A: Sampler settings (Temperature, Repetition Penalty, etc...) are, most of the time, what make or break models and very few people know how to set them up properly to make the experience as enjoyable. I highly recommend you use this bad boy. If you don't know how to import settings presets, click here for a little guide.

The way this preset works is, every sampler is disabled except Temperature, Min P and Repetition Penalty DRY Rep Pen.

Repetition Penalty: It's 99% of the time going to be fine at 1.05. Recommended values: 1.05 - 1.15. Anything higher than that and your gens are gonna be odd.- DRY rep pen: Works kinda like Rep Pen except it penalizes tokens based on repeating sequences. I like it over Rep Pen because there's a possibility for the latter to catch good tokens in the crossfire. DRY Rep Pen is less likely to do so. Recommended values: Multiplier = 0.8, Base = 1.75, Allowed Length = 2, Penalty Range = 1024

- Temperature: Each token has a probability attached to it and Temperature is the sampler that affects that. Higher temperature will flatten the curve, meaning that there will be higher chances that the model picks a token that it otherwise wouldn't have. Recommended values: 1 - 5 (I'll elaborate on that later.)

- Min P: This is a relatively new sampler that's really great to filter out bad tokens. What it does is set a threshold where tokens that are under a minimum probability will simply be removed. This acts like a filter that gets rid of tokens you won't want to see. Recommended values: 0.05 - 0.2

Temperature and Min P are samplers that work really well together; Min P gets rid of bad tokens while Temperature increases the likelihood of seeing a wider variety of tokens. Min P makes it so that a lot of bad tokens are kicked out before Temperature increases their probability, which means it's possible to very high Temperature values as long as you change Min P accordingly. For starters, I recommend 3 different combinations:

- Temperature 1.20 and Min P 0.1 | Very safe values, extremely unlikely to cause any problems.

- Temperature 1.40 and Min P 0.1 | Safe values, more fun than the former, safer than the latter.

- Temperature 1.80 and Min P 0.15 | A little risky, very fun values but not recommended on dumb models.

This is, of course, my personal preference so you'll want to experiment with them yourself. This preset is also very beginner-friendly and easy to tune.

Boring gens = increase Temperature or reduce Min P.

Unhinged gens = reduce Temperature or increase Min P.

Doing this will put you well above the rest and give you the optimal (afaik) LLM RP experience.

Q: My character is outputting complete nonsense. How to fix?

A: If your character's responses look like this:

"dek facesdadeyk eitherECKilor Revolifenpowertha alternative bucket preference option behaviourrollerfit Micro dest eitherosaбriosemparina Blockimming organisationfacesECKNCifenigaument dodarinafitisko Revoliga Chapterarinaisser chapterfrifit� Chapter craroller Revol Pra occasioniera attituderollerrai Kiligostha Revol Revolempigaykykstru Majwer indirectaya amb emerECK threstra Majб contact preference Pra thrchapter Revoligaistoire ups microfit péri Koreifenroller risp alternativeykrollercontact electrondadechapterayayk"

That means your settings are most likely fucked. Here's an easy preset to use for beginners, simply import it in the settings tab in SillyTavern.

Q: How can I improve my character's responses?

A: There are a lot of things you can do.

- Use this settings preset.

- Make sure the advanced formatting (A icon at the top of the ST screen) is in order. This is what I use most of the time.

- Responses still suck? Use instruct mode. In the advanced formatting tab (top of the screen, click on the big A icon to open it), look at the Instruct Mode area. Pick an Instruct preset that fits your model (if you don't know, check the model card for your model on HuggingFace). The improvement will vary depending on the model. As for the system prompt, I personally dislike the base ones so here's my custom all-purpose prompt:

You are {{char}}. Your responses must be detailed, creative, immersive, and drive the scenario forward. NSFW allowed. Consent is implied, though resistance may occur as part of the roleplay. Don't leave anything out, describe everything and do not summarize.

- Responses STILL suck? Could be that your character card is not well-written. This is the case for a crap ton of cards posted on websites like Chub, SpicyChat, or JanitorAI. I'd recommend reading this guide on character creation. Simply reading the introduction will be enough to give you an idea on how to write good characters.

- ... Still bad? We've exhausted all the possibilities except one. Your model is outdated or just too bad. Refer to recommendations below this section.

Q: Which model should I use?

A: The most important factor when it comes to choosing which model to run, is knowing ho much VRAM you have. In order to do so:

- Open your task manager (ctrl + alt + delete OR right click task bar).

- Go to the

Performancetab. - Click on the GPU.

- Scroll down and your VRAM should be under

Dedicated GPU memory. - Once you know how much VRAM you have, move onto the next section.

Recommended models

Notice: No the Rentry isn't dead, it's just that nothing has come close to Mistral Nemo 12b for RP that's not in the 70B range.

| Parameter size | Model | GGUF for KoboldCPP | exl2 for TabbyAPI | Recommended ST formatting |

|---|---|---|---|---|

| 8B | Hathor_Respawn-L3-8B-v0.8 | GGUF for KoboldCPP | exl2 for TabbyAPI | Llama 3 |

| 9B | Gemma-2-Ataraxy-9B | GGUF for KoboldCPP | exl2 for TabbyAPI | Gemma 2 |

| 12B | Muse-12B | GGUF for Koboldcpp | exl3 for TabbyAPI | ChatML |

| 12B | Rei-V3-KTO-12B | GGUF for Koboldcpp | exl2 for TabbyAPI | ChatML |

| 12B | Dans-PersonalityEngine-V1.1.0-12b | GGUF for Koboldcpp | exl2 for TabbyAPI | ChatML |

| 12B | Bigger-Body-12b | GGUF for Koboldcpp | nada | ChatML |

| 12B | Magnum-Picaro-0.7-v2-12b | GGUF for Koboldcpp | exl3 for TabbyAPI | ChatML |

| 12B | MN-GRAND-Gutenberg-Lyra4-Lyra-12B-DARKNESS | GGUF for Koboldcpp | exl2 for TabbyAPI | Read the model page |

| 22B | UnslopSmall-22B-v1 | GGUF for Koboldcpp | exl2 for TabbyAPI | Minimalist or Mistral (might lead to more assistant-y behavior) |

| 27B | magnum-v4-27b | GGUF for Koboldcpp | exl2 for TabbyAPI | ChatML |

| 32B | Archaeo-32B | GGUF for Koboldcpp | exl2 for TabbyAPI | chatml |

| 70B | Llama-3.3-70B-Instruct | GGUF for Koboldcpp | exl2 for TabbyAPI | llama 3 |

Tip: Each of these models really want the appropriate formatting (Instruct/Context templates). If you don't know how to import/pick instruct or context templates, click here

Figuring out which quant and which model size you should run

The bigger the better(usually). Use this calculator to figure out which models you can run. It's not perfectly accurate but it gives you a good idea of what you can run.

When picking which quant to use, you should generally aim to run as big of a quant as your hardware allows, but Q5_K_M / 6.0bpw is the sweet spot where you get both speed and performance. Q4_K_M / 5.0bpw is a good alternative if Q5_K_M / 6.0bpw is too slow. Going below IQ4KS / 4.0bpw is not really recommended because the quality loss increases exponentially the smaller the quant is. Bigger models (>30B) are more forgiving when it comes to small quants, allowing you to get good outputs even at Q3_K_M / 3.75bpw.

Q: How can I use a model? Which frontend? Which backend?

A: To run a model, you need a frontend, and a backend. The backend is where the model will be running, and the frontend is there to help with prompt formatting and a bunch of annoying stuff.

Frontend

The undisputed best frontend atm is SillyTavern, specifically the Staging branch because it's honestly really stable and just allows you to get the cool features before the main branch people.

Backend

You have 3 options to pick from, depending on your hardware and the model you're attempting to run:

- KoboldCPP

KoboldCPP is currently the most beginner-friendly backend, as well as the best when it comes to running GGUF models, which are models that can be loaded using both your GPU and CPU. This is your best option if you want to run a model you can't load fully on your GPU due to a lack of VRAM, or if you want an easy-to-use backend. If you have no clue what to choose, then this is the one for you. Before loading a model, make sure, if you have an NVIDIA GPU, to select the CuBLAS preset, set layers to however much your GPU can handle. Don't rely on the automatic estimation since it tends to underestimate the layers your GPU can handle by a looooot.

If you have an AMD GPU, get this version of KoboldCPP instead.

Tip: The default BLAS batch size is set to 512, however, I recommend lowering it to 256 if you struggle to fit every single layer of a model on your GPU

- TabbyAPI

TabbyAPi is a backend that specializes in running exl2 models, which is currently considered the fastest and most VRAM efficient type of quant. This is the backend I recommend if you have enough VRAM to be able to load a model FULLY on your GPU. Refer to this calculator if unsure. - Colab.

Colab's free tier allows the use of a T4 with 16GBs of VRAM for a couple of hours. Once again, refer to this calculator to figure out what can fit on Google's T4. Do be careful, though. You'll eventually be disconnected if you use it for a long time, in which case, get another google account and go again.

The model is loaded. Now what?

Once your model is loaded, a link will be given to you at the bottom of your backend's console. Copy that link, go to SillyTavern's API tab (2nd icon at the top of the screen), select Text Completion API, select either KoboldCPP or TabbyAPI or whichever backend you chose, then paste the link in the API url box. Make your settings are in order.

- For generation settings, grab this preset and import it in ST.

- For advanced formatting (A icon), copy this screenshot. If you're using one of the models recommended above, I HIGHLY suggest enabling instruct mode and importing the Instruct presets I provided along with the model.

Q: Where can I find characters

A: You'll find high quality, curated character cards on Pygmalion's website and discord. If you couldn't find what you were looking for, feel free to check Chub's website. It's very hit or miss because there is abolutely 0 quality check.

I'm personally a firm proponent of writing your own character cards rather than getting them from unreliable sources if you don't really know what to look out for (chub, janitor, spicychat, etc...). Refer to this guide to learn how to write characters by hand. Alternatively, if you're feeling lazy and want an already-existing character with a wiki, refer to this portion of the guide on how to use ChatGPT to write your characters in less than 10 minutes.

You can also check out my Rentry if you need some inspiration or would like to see how character cards are meant to be written.

Q: What are lorebooks? How do I use them?

A: World Info/Lorebooks is a feature in SillyTavern that allows you to create some sort of folder filled with entries that each contain a piece of information. Each Lorebook entry is generally linked to a keyword. The moment the model sees a keyword, the entry's contents are thrown in the model's memory. There are lots of options available but here are the ones you mainly need to know about to get started:

Scan depth

Defines how many messages ST will scan for keywords. The higher the value, the longer each entry will remain in context. I like a value of 8

Context%

Defines a limit on how many tokens worth of entries can be triggered. It's equal to a percentage of your context size.

Content

This is the prompt that gets injected in the context (model's memory) when at least 1 of the entry's keywords is encountered. These can be formatted in 3 ways (that i find good).

- PList: This is a trait list, useful for describing concepts while using as few tokens as possible, it follows this format:

[Thing: trait, trait, trait, etc...] - Plain text: Super basic, you just write a paragraph about the concept you're trying to introduce.

-

Example Dialogue: A little harder for beginners. Recommended for character-specific lorebooks. Interview style is just the easiest, it's like this:

{{user}}: "Hey, tell me about X"

{{char}}: "Ah, yes, X," {{char}} said, "X is..."This is just an example, you'd wanna write the character's response the same way you think your character would speak.

Primary keywords

A list of words that, when seen by the model, will trigger the entry and inject the contents of the related entry. When coming up with keywords, try to think about words you, or the model could say naturally that would trigger the entry when appropriate. For example, an entry that describes a "mana crystals" as a form of currency that can only be acquired by doing missions would have keywords like mana, crystal, currency, dungeon, money, coins, poor, rich, fortune, trade, buy, sell, well-off, gate, reward, quest, bounty, job

Position

Defines where your lorebook entries are injected in the prompt.

- Before char: The default. Before char entries are injected above the description box's contents in the prompt. It's recommended that your entries in plain text are at this position if you're not sure what to do.

- @ D: This is basically like author's note and allows you to have the entries injected X messages away from the very last message sent. I recommend putting your PList lorebook entries @ D = 4-8. The lower, the stronger. If you have some sort of Jailbreak or instruction, you can put it @ D = 0.

Order / Priority

Defines which entries get priority when multiple are triggered at the same time. The more essential an entry is, the lower the order should be.

Status

3 options:

- Blue: Makes the entry permanent. It's always in context.

- Green: Makes the entry conditional. It needs one of the entry's keywords to be within scan range to be inserted into the prompt.

- Red: Disables the entry.

The above is a very surface-level explanation of World Info / Lorebooks. If you'd like to learn more about them, I highly suggest reading this in-depth guide on World Info.

How to use models on mobile?

A: Just like on PC, you're gonna need both a frontend and backend, but the options are much narrower.

Android

- Frontend: SillyTavern is still your best option. Follow this guide on how to install and run SillyTavern on Android with Termux. Make sure to follo the instructions to install the staging branch. After it's done, all you need to do, whenever you want to use SillyTavern, is typing

git pullto update, andnpm run startto run it.- Getting an error message? Try

npm ithen run SillyTavern. Still getting an error message? Trynpm cithen run it again. If that doesn't work, join the SillyTavern Discord for help. Make sure to read error messages thoroughly, sometimes they tell you what to do to solve your issue. Also copy paste the error in google to see if others have had the same issue. Last thing you should do is ask a question that's been asked billions of times before in a support channel! - SillyTavern doesn't pop up when you do

npm run start? Copy paste the url in green at the bottom of Termux that should look something likehttp://127.0.0.1:8000/, then paste it in your browser.

- Getting an error message? Try

- Backend: Although it's possible to run models on your phone's CPU, let's not bother with that. Your best option is Colab. Make sure to check the

API boxbefore running it. Colab's free tier allows the use of a T4 that has 16GB VRAM for a couple of hours. Once again, refer to this calculator to figure out what can fit on Google's T4. Do be careful, though. You'll eventually be disconnected if you use it for a long time, in which case, get another google account and go again.

IOS

- Frontend: Termux is unavailable for IOS so no SillyTavern. Instead, you have 2 alternatives

- If you have a PC with SillyTavern, it's possible to remotely access your PC's ST instance on your phone. This guide covers how to do it better than I can so go read it! It's a really simple process.

- If you don't have a PC, or don't wanna bother setting up ST remote access, I recommend PygmalionAI. Although still in development, PygmalionAI has most of the features I consider necessary in order to have a pleasant experience with LLMs.

- Backend: Your best option is Colab. Make sure to check the

API boxbefore running it. Colab's free tier allows the use of a T4 that has 16GB VRAM for a couple of hours. Once again, refer to this calculator to figure out what can fit on Google's T4. Do be careful, though. You'll eventually be disconnected if you use it for a long time, in which case, get another google account and go again.

Remote access

It's possible to run SillyTavern on your computer and remotely access it on your phone. This guide covers how to do it better than I can so go read it!

Q: My character keeps talking for me. How can I stop that?

A: Let's get a common misconception out of the way first. Telling the model Do not write for {{user}} in Author's Note is 99% of the time not going to work on small, open source models. Negative instructions, as a whole, are much less effective than positive instructions (i.e. Do not write short responses vs Write long and detailed responses). Heck, negative instructions tend to have the opposite effect most of the time. The best way to teach the model to do something is to set a pattern that it can then follow. This is, by the way, what example dialogues do, and why Ali:Chat is such a powerful way to write characters.

Okay, done rambling. There are many things that could cause impersonation and many possible fixes. Here are the ones that are the most likely to work:

- In the advanced formatting tab (big A icon, top of the screen), if

Instruct modeis disabled, make sure theAlways add character's name to promptbox is checked. If it wasn't, clear your chat and try again. - If that didn't work, next step is enabling

Instruct mode, picking an Instruct Preset that fits your model and enablingInclude names. You can find which instruct preset fits your model on the model's HuggingFace page, or, if you're using one of the models I recommend, back here. - If you still get impersonation, then it's most likely an issue with the way your character card was written. Character cards, at the end of the day, exist to set a pattern that show the model how the user speaks, and how the character speaks (now you understand why i rambled about pattern setting earlier). If the character's example dialogues have the character describe the user's actions, then that pretty much tells the model "Hey, it's okay to impersonate the user." This is the key reason I personally recommend people write their own character cards, because most of the cards you'll find on websites like Chub or SpicyChat were written by people who probably haven't used local models enough to know the key mistakes they must avoid making.

What it means is that if the previous steps didn't fix impersonation, your next step is going through the card's example dialogues and greeting message and getting rid of every bit where the character describes the user's actions. This usually occurs in the greeting message where people tend to write something like{{user}} walks in and spots {{char}}.

Q: How can I stop my model from speedrunning the roleplay and talking about bonds all the time?

A: This is a common issue with models when tasked to generate looooooong responses (400+ tokens) without guidance. They tend to converge towards a happy ending, talking about unbreakable bonds, doing time skips, etc... I can think of 3 ways to get around that:

- Handholding the model, basically instructing it on what to do in the chat OR making sure your own messages help keep the model in check. You could, at the end of your response, add an instruction in the form of

[Instruction: {{char}}'s next response must be detailed and bla bla bla]. Completely up to you what kind of instruction you write in there as it's situational. - Much less effective that the previous solution and not guaranteed to work perfectly, but it's possible to put an instruction formatted like the one above on how the bot's next response should be in Author's Note at Depth = 0. The pros are, you won't have to rewrite the instruction after every single message, and it'll greatly influence the character's responses. Cons are, what you'll want in there is gonna be situational and it's easy to forget there's something in Author's Note. It's also annoying to constantly have to go in and out of Author's Note to edit the instruction when needed. There are also points where you won't want the instruction to be active.

- Be more proactive. As stated earlier, models will converge towards slop the longer gens are. This can easily be avoided if you take an active role in the scenario to steer it in whichever direction you prefer. The more a model has to work with, the less likely it is to hit you with the really boring stuff.

Q: How to get shorter responses? (Pygsite/ST)

A: Getting a model to output shorter or longer responses isn't as straightforward as one might think, because doing so would require modifying the model's perspective on what you, the user, consider a "good" response, since models simply do what they interpret as being the "best" thing. There are a few ways to reach that outcome:

- Reducing

Max Tokensand enablingTrim Incomplete Sentencesor usingcontinue

Max Tokensis a setting you can edit through your chosen sampler preset (Pygsite: Chat tab, Completion > Sampler > Editor). WhatMax Tokensdoes is, it puts in place a hard cap on how many tokens the model is allowed to generate before it's interrupted. The interruption happens regardless of whether the model was in the middle of a sentence, which might lead to sentences being abruptly cut off. In order to remedy this, you have 2 possibilities- a. Enable

Trim Incomplete Sentences. This option makes it so that, if a model's output is interrupted mid-sentence, the website will trim the excess and leave you with a nice and complete output. - b. Use the

continuefeature. This will make the model continue the output where it left off and potentially complete it.

- a. Enable

-

Putting an instruction in the

Author's Notefield.

To keep it brief,Author's Noteis a field that allows you to write something that's then injected near the bottom of the prompt. The closest to the bottom something is, the more impact it has on the next output. You can put a lot of things inAuthor's Note, but in this case, the goal is to decrease output length. My recommendedAuthor's Noteif that is your goal is[ Instruction: Keep responses concise and conversational. Focus on direct dialogue with minimal narration. Actions should be brief and only included when essential to the scene. Let the conversation flow naturally through short exchanges rather than lengthy descriptions. Avoid internal monologues or detailed scene-setting unless specifically relevant to the immediate dialogue. ]. For ST users, set the depth between 1 and 4. The lower, the stronger.This is just what I personally use and you're free to write your own instruction based on the style of messages you'd like the model to output. VERY IMPORTANT THOUGH; make sure your instruction is wrapped in brackets like the one above or things won't go well.

- Use the

One Paragraphsampler preset, or add\nas astop stringin your chosen sampler preset (Pygsite) or Advanced Formatting tab (ST).

TheOne Paragraphpreset, as its name implies, forces outputs to be interrupted after 1 paragraph has been generated. This one is fairly straightforward and doesn't require much explaining. If you like your current preset and don't wish to switch to this one, the alternative is to edit your current preset / Advanced Formatting tab and add\nas a stopping string. - Edit your character.

This one is the most effective method, but also the most tricky one that you should probably go for as a last ditch effort if the above didn't work.

As explained before, models simply generate what they think is what you, the user, would like to see. When you write example dialogues for a character card, the goal is to establish a pattern that the model will latch onto and want to follow. That means that your example dialogues, but most importantly, your character's greeting message will have a massive impact on what the model will want its outputs to look like. A long greeting message will lead to long outputs. Same thing for example dialogues. Models seek patterns and they will always feed on previously-established patterns.

Try editing the greeting message and making it shorter.

If that doesn't work, edit the example dialogues to make them shorter as well.

If you have no idea how to write characters, refer to my PLists + Ali:Chat character writing guide. - Try another model.

Some models just really really like sticking to the data they were trained on. A model trained on a LOT of really long outputs will have a harder time adapting and writing shorter outputs. That's the reason why Pygmalion hosts multiple models, because people have different preferences when it comes to how they want a model to behave. Picaro and Magnum are more likely to give long outputs while Imp will be more "to the point." If using an instruction inAuthor's Noteand editing the character card to include shorter examples doesn't work, then your next step should be to experiment with other models offered by the website.

If you're an ST user, you can try other models from the list.

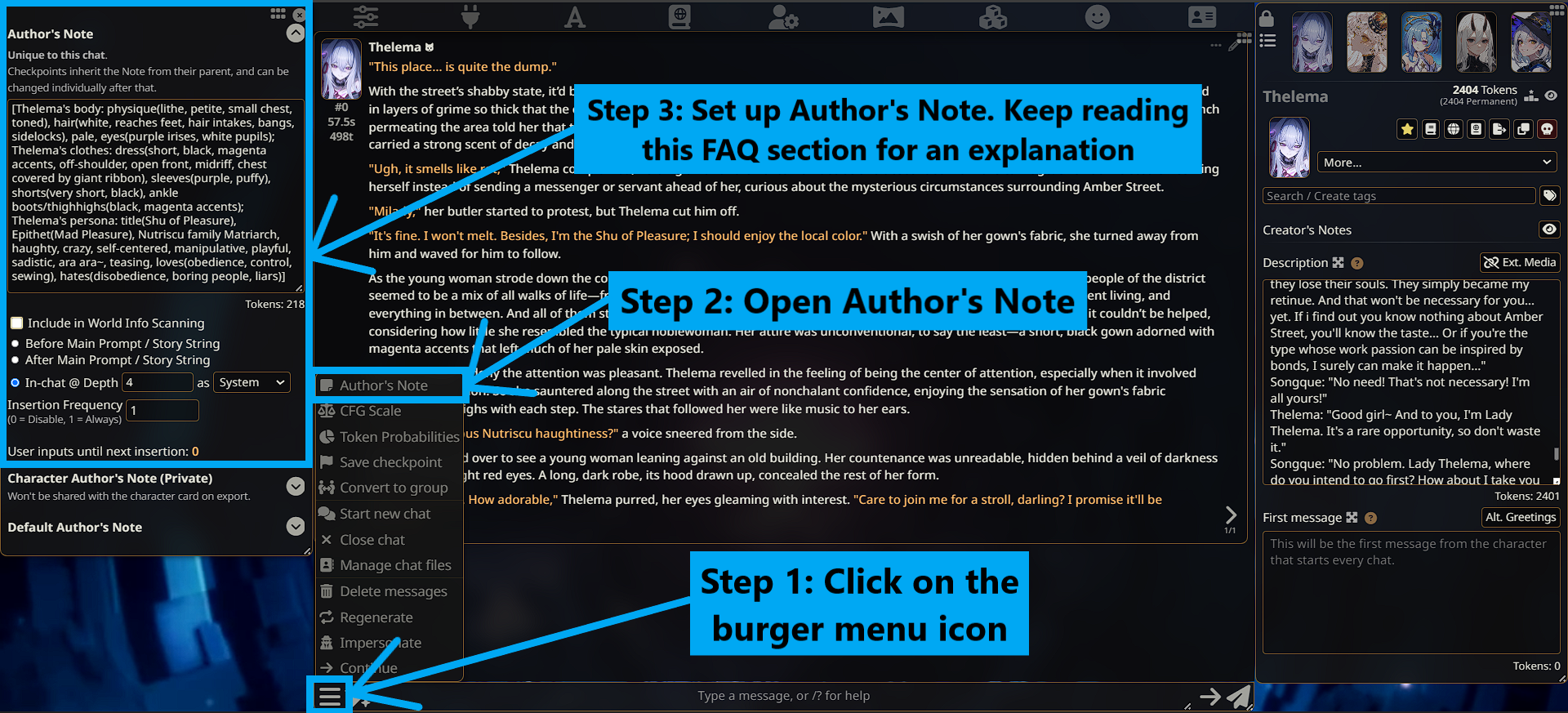

Q: What's Author's/Character's Note?

A: Here is how you access Author's/Character's Note:

Author's Note/Character's is one of the best and most underused features some frontends offer. What it does is allow the user to have a certain "note" injected at a set depth and frequency in the prompt. This note could contain anything. a PList (trait list), an instruction, a jailbreak, etc... Author's Note generally comes with 4 options:

Note

The Note is text that's injected in the prompt. It can be used in many ways but I personally only use it for two things:

-

Instruction: It is possible to use Author's Note to instruct the model on his its outputs should be formatted or what they should contain. I personally like using a depth = 0 and frequency = 1 for them. An example could be

[Instruction: {{char}}'s next response must include narration and dialogues. Be creative and make the scenario engaging.].

This is very basic. You could instruct it to use a more casual language, maybe more explicit words so as to avoid innuendos, etc...

- Character reinforcement: Author's Note can also be used as a tool to reinforce character traits and force the model to remain in character. If you've read the character creation guide, you should already know about it. For those who haven't, I really like having something called a PList in Author's note at depth = 4 and frequency = 1. Here's an example:

[Eden's appearance: hair(long, fiery), eyes(golden), dress(crimson, silk), sleeves(Juliet-style), bodice(tight), gold trim, skirts(pleated, full), tights/gloves(black), hairpin/earring(golden); Tags: fantasy, slice of life, romance; Scenario: Conversation between {{user}} and {{char}}; Eden's persona: calm, soft-spoken, generous, velvety-toned, brilliant, confident, extravagant, rich, title(brightest star of the era, loves(singing, performing, giving out precious items, wine, being drunk), wants to be admired, elegant, poetic, young, plays the harp].

Frequency

This defines the frequency at which the Author's Note is injected in the prompt. 1 means it's always there, 0 means it's disabled, 2 means once every 2 messages, etc... For the sake of simplicity, leave it as 1 unless you know what you're doing.

Depth

This defines the Author's Note's placement in the prompt. The lower the depth, the stronger the Note is.

- If you want the Note to heavily influence the outputs, have a depth of 0.

- If you want the Note to help guide the model, or if it contains a PList, have a depth of 4-8.

- If you want the Note to be a slight nudge, such as a scenario or minor point, have a depth of 9+

The ideal depth is always going to depend on your goal, model, message length, etc... The values i provided are arbitrary and won't always fit what you're looking for, so I highly recommend you to figure out your own preferred values.

Role

This option defines the "role" your Note has and is only relevant if you have Instruct Mode enabled.

- System: Your Note will count as a system message, and will therefore have the

system message prefixandsuffixdefined in your instruct preset added to it. - User: Your Note will count as a user message, and will therefore have the

user message prefixandsuffixdefined in your instruct preset added to it. - Assistant: Your Note will count as an assistant message, and will therefore have the

assistant message prefixandsuffixdefined in your instruct preset added to it.

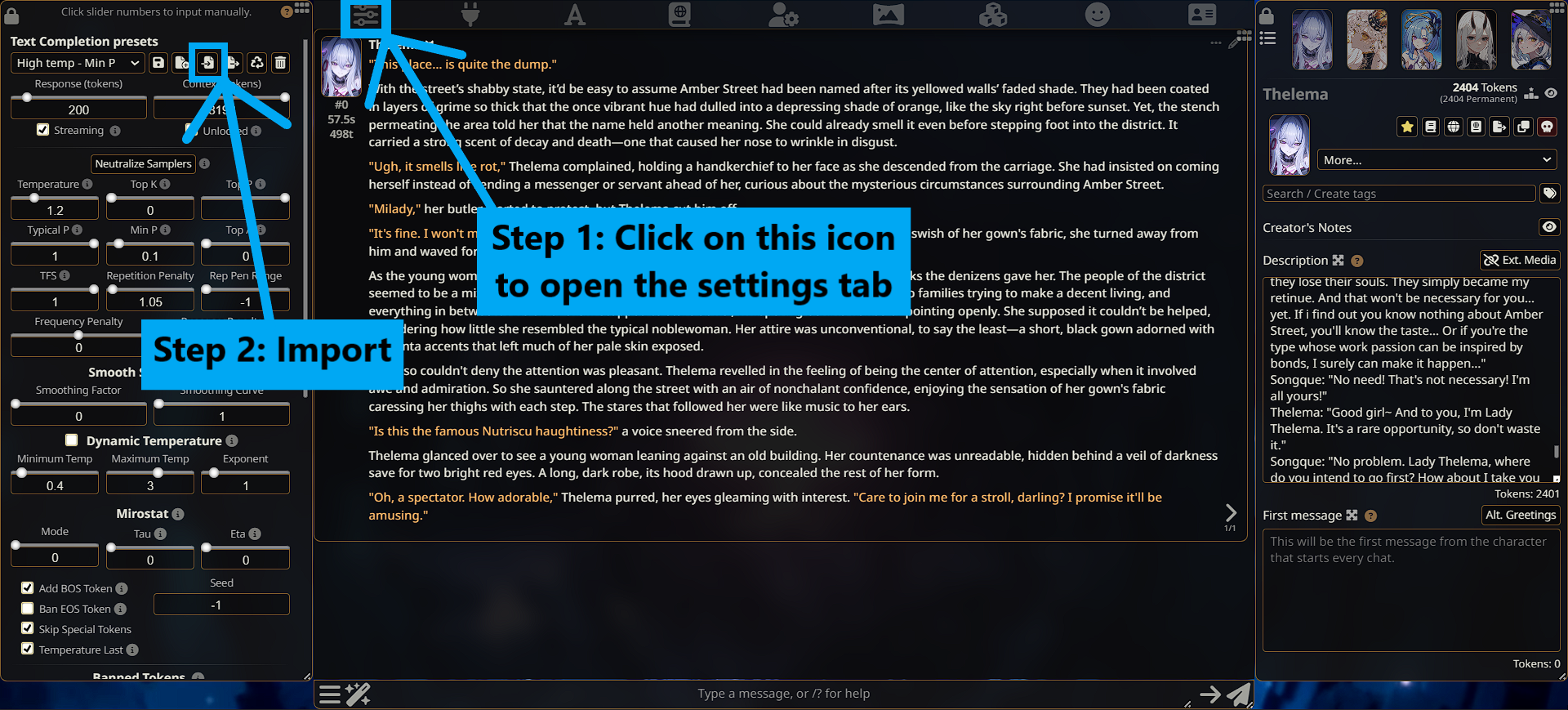

Q: How do I import settings?

A:

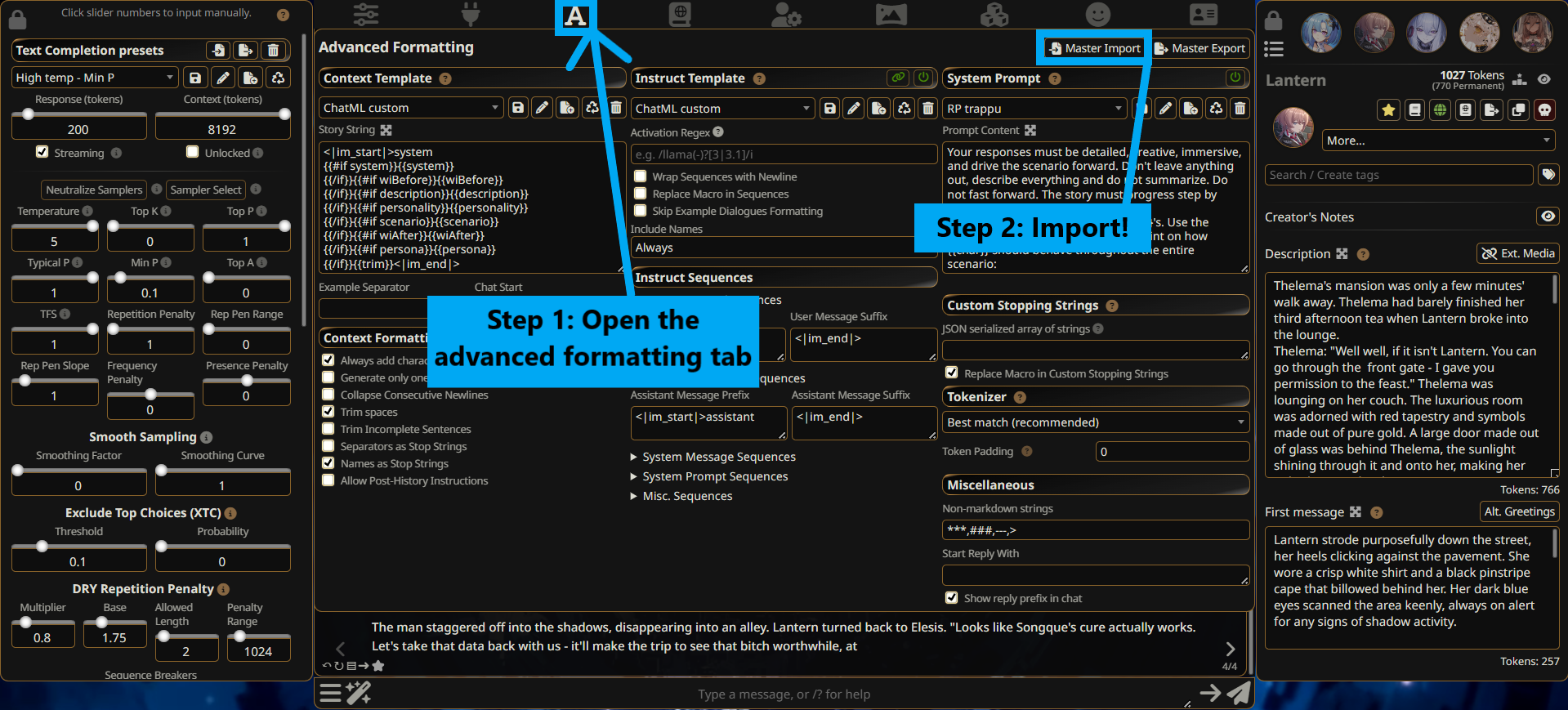

Q: How do I import/choose instruct/context templates?

A:

Note: If I didn't link a formatting template, that means ST already comes with it included (ChatML, llama 3, Gemma, etc...). You can simply select them in the advanced formatting tab seen below without importing anything.